By Jim Shimabukuro (assisted by Grok)

Editor

[Related: Jan 2026, Dec 2025, Nov 2025, Oct 2025, Sep 2025]

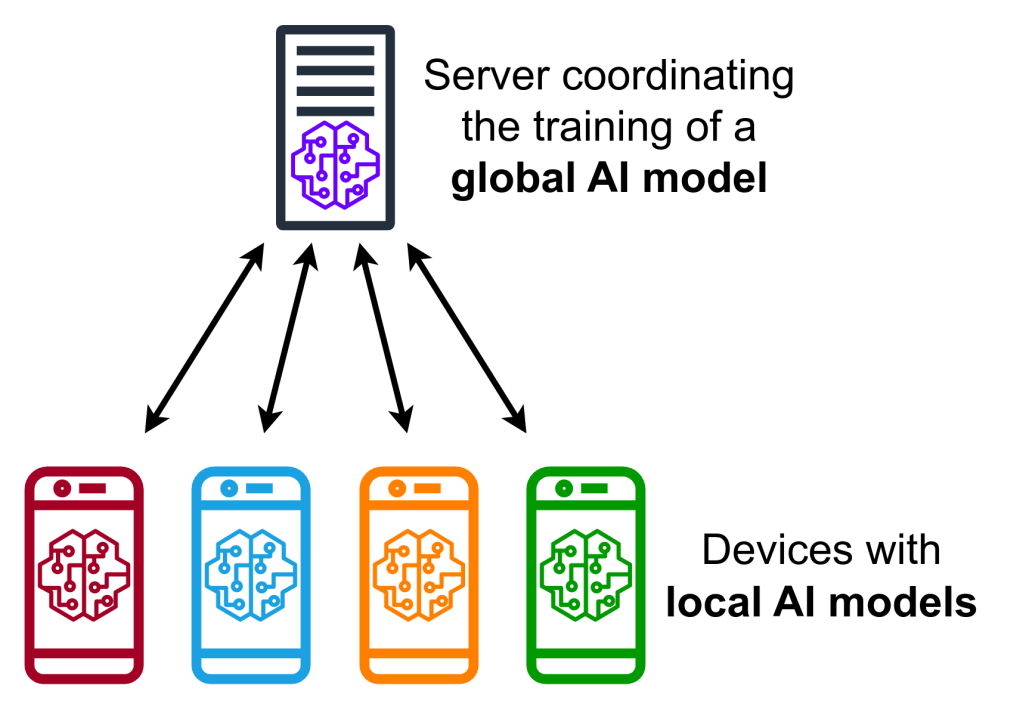

1. Federated Learning: The Quiet Revolution in Privacy-Preserving AI

Federated learning is an innovative machine learning paradigm that allows multiple devices or organizations to collaboratively train AI models without sharing raw data. Instead of centralizing sensitive information on a single server, the approach involves training models locally on edge devices—like smartphones or hospital servers—and only exchanging model updates or gradients. This mitigates privacy risks, as data never leaves its source, using techniques like secure aggregation and differential privacy to obscure individual contributions. The process begins with a global model distributed to participants; local training occurs, and aggregated updates refine the central model iteratively.

Leading this charge are tech giants and research institutions. Google pioneered federated learning with its Gboard keyboard app, improving next-word predictions across billions of Android devices without uploading user typing data. Apple employs it in iOS for features like QuickType suggestions and health data analysis. In healthcare, IBM and NVIDIA are advancing applications through partnerships with hospitals, while the European Union’s GDPR regulations have spurred adoption in finance via companies like JPMorgan Chase for fraud detection. Development is concentrated in Silicon Valley, USA, with significant contributions from Europe’s Max Planck Institute and China’s Tencent, where privacy laws drive innovation.

This trend is gaining traction primarily in regulated industries like healthcare, finance, and automotive, where data silos are common. For instance, in the EU and US, hospitals collaborate on disease models without breaching patient confidentiality, accelerating drug discovery. In Asia, telecom firms like Huawei use it for network optimization. Its popularity stems from escalating data privacy concerns—global breaches rose 20% in 2024—and the explosion of IoT devices, projected to reach 75 billion by 2025. Unlike flashy generative AI, federated learning operates behind the scenes, enabling scalable, ethical AI without fanfare. As quantum threats loom, its decentralized nature offers resilience, quietly reshaping collaborative AI. Yet, challenges like communication overheads persist, but with open-source frameworks like TensorFlow Federated, adoption is surging among enterprises seeking compliant, efficient training. This under-the-radar gem promises a future where AI learns from the world without invading it, fostering trust in an increasingly data-driven era.

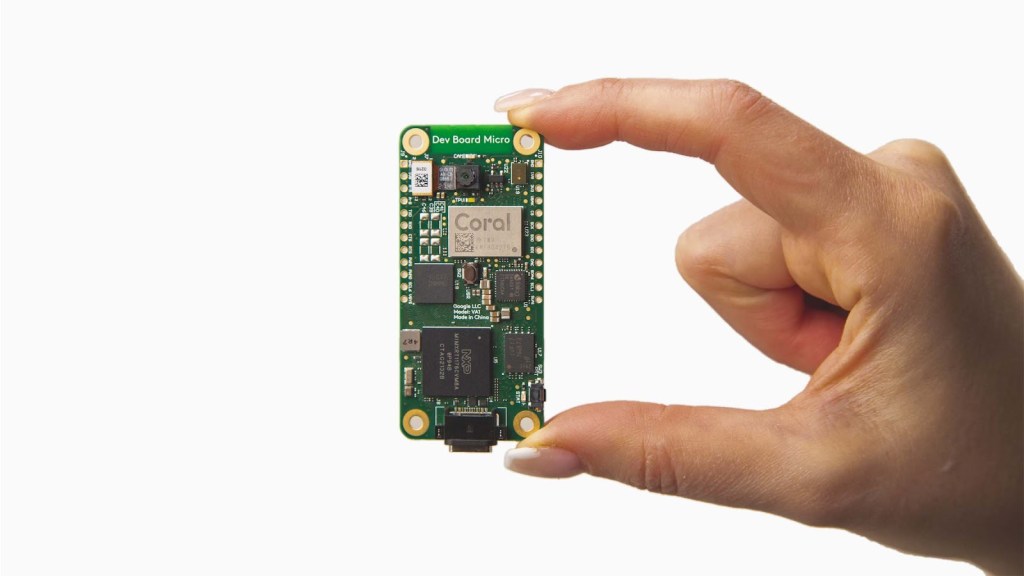

2. TinyML: Empowering AI at the Edge of Everyday Devices

TinyML, or Tiny Machine Learning, refers to the deployment of lightweight AI models on resource-constrained microcontrollers and embedded systems, enabling real-time inference without relying on cloud connectivity. These models, often under 1MB in size, use techniques like quantization, pruning, and knowledge distillation to compress complex algorithms, allowing them to run on devices with mere kilobytes of RAM and milliwatts of power. Applications range from predictive maintenance in sensors to voice recognition in wearables, processing data locally to reduce latency and enhance privacy.

Key players include Google with TensorFlow Lite Micro, which powers TinyML on billions of devices, and Arm, whose Cortex-M processors form the hardware backbone. Startups like Edge Impulse in the Netherlands provide no-code platforms for model deployment, while academic hubs like Harvard’s TinyMLx initiative drive research. In industry, Bosch and STMicroelectronics integrate it into automotive and industrial sensors. Development hotspots include Silicon Valley, where Google’s teams iterate on frameworks; Europe, particularly Germany and the UK for IoT applications; and Asia, with China’s Huawei embedding TinyML in smart home ecosystems.

TinyML is proliferating in IoT-heavy sectors like agriculture, where soil sensors predict crop yields, and healthcare, via wearables monitoring vital signs offline. In manufacturing, it’s used for anomaly detection in machinery, preventing downtime. Its quiet rise is fueled by the IoT boom—expected to generate $1.6 trillion by 2025—and energy efficiency demands amid climate concerns. Unlike cloud AI, it thrives in remote or bandwidth-limited environments, such as African wildlife trackers or Arctic weather stations. Popularity grows due to cost savings (no constant data transmission) and security, as data stays on-device amid rising cyber threats. Open-source communities on GitHub accelerate innovation, with over 10,000 contributors. However, hardware limitations challenge accuracy, but advancements in neuromorphic chips are bridging gaps. TinyML democratizes AI, embedding intelligence into the fabric of daily life without the spotlight, poised to transform edge computing into a trillion-dollar market by enabling ubiquitous, sustainable smart systems.

3. Self-Supervised Learning: Unlocking AI from Unlabeled Data Oceans

Self-supervised learning (SSL) is a machine learning technique where models generate their own supervisory signals from unlabeled data, eliminating the need for costly human annotations. By creating “pretext tasks”—like predicting masked parts of an image or sentence—the model learns rich representations, which can then be fine-tuned for specific downstream tasks. This contrasts with supervised learning’s reliance on labeled datasets, making SSL scalable for vast, raw data sources.

Pioneers include Meta (formerly Facebook) with models like DINO for vision and Google’s BERT for language, which pre-train on massive corpora. OpenAI incorporates SSL in GPT variants, while academic leaders like Yann LeCun at NYU advance theoretical foundations. Companies such as Hugging Face host SSL models on their platform, democratizing access. In healthcare, PathAI uses it for pathology image analysis. Development centers in the US (Silicon Valley and New York), Canada (Vector Institute in Toronto), and France (INRIA), where collaborative research flourishes.

SSL is applied in natural language processing for sentiment analysis without labeled reviews, computer vision for object detection in autonomous driving, and biology for protein folding predictions. In retail, Amazon employs it for recommendation systems sifting through user behavior data. Its surge in popularity arises from data abundance—internet content doubles every two years—coupled with labeling costs exceeding $1 billion annually for large datasets. Privacy regulations like CCPA push for less human involvement. Quietly gaining ground in enterprises, SSL reduces training time by 90% in some cases, enabling AI in data-scarce domains like rare disease research. Open-source libraries like PyTorch’s SSL modules fuel adoption among startups. Challenges include task design complexity, but hybrid approaches with weak supervision are evolving. Under public radar due to its backend nature, SSL is revolutionizing AI efficiency, powering breakthroughs in multimodal models and fostering sustainable, autonomous learning systems that mimic human intuition from unstructured worlds.

4. Agentic AI: Autonomous Systems Redefining Human-Machine Collaboration

Agentic AI encompasses intelligent software agents that act autonomously to achieve goals, reasoning through complex tasks with minimal human oversight. These agents break down objectives into subtasks, interact with tools or environments, and adapt via feedback loops, often powered by large language models (LLMs) enhanced with planning algorithms like chain-of-thought prompting. Unlike passive chatbots, they execute actions, such as booking flights or analyzing data.

Trailblazers include OpenAI with projects like Auto-GPT, and Microsoft integrating agents into Copilot. Anthropic’s Claude and Google’s DeepMind lead research, while enterprises like UiPath embed them in robotic process automation. Startups such as Adept in San Francisco focus on agentic interfaces. Development thrives in the US (Bay Area), UK (DeepMind in London), and China (Baidu’s Ernie agents), supported by venture funding exceeding $5 billion in 2024.

Applications span workplaces: in IT, agents automate password resets; in finance, they optimize portfolios. Healthcare sees agents triaging patient data, while e-commerce uses them for personalized shopping. Happening globally, with EU pilots in smart cities and Asian factories for supply chain management. Its popularity swells due to productivity demands—GenAI experiments show 20-30% efficiency gains—and workforce shortages post-pandemic. With AI hardware advancing, agents handle unstructured data via retrieval-augmented generation (RAG), addressing hallucinations. Under radar as it’s enterprise-focused, not consumer-facing like ChatGPT, agentic AI grows quietly via internal tools, with 68% of IT leaders planning adoption by mid-2025. Ethical concerns like bias require governance, but frameworks from the OECD are emerging. This trend heralds a shift to “superagency,” empowering humans by offloading routine cognition, potentially adding trillions to global GDP while reshaping jobs toward oversight roles. As models evolve, agentic AI promises seamless integration, quietly automating the mundane to unlock human potential.

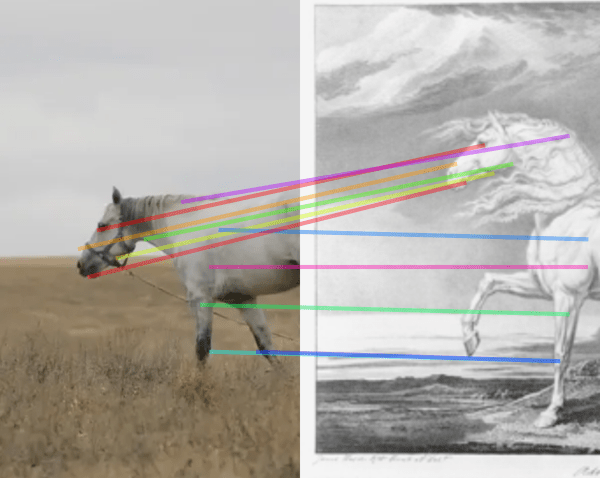

5. Vision Transformers: Revolutionizing Image Understanding Beyond Convolutions

Vision Transformers (ViTs) apply transformer architectures—originally for language—to computer vision, processing images as sequences of patches and using self-attention mechanisms to capture global dependencies. Unlike convolutional neural networks (CNNs) that focus on local features, ViTs analyze entire images holistically, enabling better scalability and interpretability through attention maps that highlight influential regions.

Google introduced ViTs in 2020, with iterations in models like DeiT. Meta’s DINOv2 advances self-supervised variants, while Hugging Face hosts pre-trained ViTs for community use. In industry, NVIDIA integrates them into Omniverse for simulations, and Adobe uses them in creative tools. Research hubs include Google’s Brain team in Mountain View, USA; Oxford University in the UK; and Tsinghua University in Beijing, China, where collaborations with ByteDance apply ViTs to video analysis.

ViTs excel in medical imaging for tumor detection, autonomous vehicles for scene understanding, and manufacturing for defect inspection. In agriculture, they’re deployed for crop monitoring via drones. Happening in data-rich environments like US tech labs and European industrial parks, with Asian adoption in surveillance. Popularity rises from superior performance on large datasets—outperforming CNNs on ImageNet benchmarks—and efficiency in transfer learning, reducing training costs by 50%. The data explosion from cameras (over 45 billion by 2025) and need for explainable AI in regulated fields fuel growth. Under public radar as a technical evolution, not a new gadget, ViTs quietly enhance multimodal AI, integrating with LLMs for image-text tasks. Challenges like high compute needs are easing with efficient variants like Swin Transformers. This trend is transforming visual AI, enabling precise, context-aware systems that mimic human perception, poised to underpin advancements in AR/VR and robotics while staying embedded in backend innovations.

Filed under: Uncategorized |

[…] Five Emerging AI Trends in Late-August 2025 […]