By Jim Shimabukuro (assisted by Grok*)

Editor

(For fundamentals on data labeling, see Data Labeling Mimics the Way Our Brain Works, Basic Building Blocks for a Learning Model, and Algorithm of an Intentional Heart.)

Introduction: Since March 2023, the field of labeled and unlabeled data processing has experienced substantial growth, driven by the escalating demands of AI and machine learning applications. This expansion is evident in surging market valuations for data labeling services, breakthroughs in semi-supervised and self-supervised learning techniques, and the emergence of synthetic data generation as a key enabler for creating labeled datasets from unlabeled sources. Below, Grok outlines the extent of this growth and highlights notable products, procedures, and services that have arisen or evolved from related research and development.

Market Growth in Data Labeling and Processing

The data labeling industry, which focuses on annotating unlabeled data to make it usable for supervised learning, has seen explosive expansion. This is largely due to the need for high-quality labeled data to fine-tune large AI models amid data scarcity and privacy concerns. Key indicators include:

- The global data labeling solution and services market was valued at approximately USD 2.1–2.5 billion in 2023 and is projected to reach USD 12.8–134 billion by 2032–2034, with compound annual growth rates (CAGRs) ranging from 21% to 28.9%. This growth reflects increased adoption in sectors like healthcare, autonomous vehicles, and e-commerce, where unlabeled data is abundant but labeling costs were historically prohibitive.

- Factors fueling this include the integration of AI-driven automation in labeling processes, reducing manual effort, and the rise of crowdsourcing platforms that handle large-scale unlabeled datasets.

Notable Procedures and Techniques

Advancements have centered on bridging labeled and unlabeled data through innovative learning paradigms, reducing reliance on expensive manual labeling:

- Semi-Supervised Learning (SSL): This approach, which trains models on a small labeled dataset alongside vast unlabeled data, has advanced significantly. Techniques like pseudo-labeling (where models generate labels for unlabeled data), consistency regularization (enforcing model predictions to remain stable under data augmentations), and graph-based methods (modeling data relationships) have been refined. Applications include medical image segmentation (e.g., brain tumor detection), intrusion detection in networks, and species recognition in ecology, where SSL achieves high accuracy with limited labels. Research from 2023–2025 emphasizes SSL’s role in foundation models, with methods like boosting via high/low-confidence predictions and hybrid self/mutual teaching.

- Self-Supervised Learning (Self-SL): Often used for pretraining on unlabeled data (e.g., by predicting masked parts of data), this has become a cornerstone for scalable AI. It initializes models with robust representations before fine-tuning on labeled data. Key developments include frameworks for medical imaging classification and diabetic retinopathy detection, with reviews highlighting its superiority in data-scarce scenarios. Self-SL is increasingly defended against pure unsupervised alternatives for its efficiency in capturing common-sense knowledge.

- Synthetic Data Generation: To address labeling bottlenecks, techniques for artificially creating labeled data from unlabeled sources have surged. Methods include generative adversarial networks (GANs), variational autoencoders (VAEs), diffusion models, and large language models (LLMs) for tabular or latent space data synthesis. Advancements from 2023–2025 focus on healthcare (e.g., mimicking medical records while preserving privacy) and AI training (e.g., augmenting datasets with variations to prevent overfitting). This includes automatic label assignment during generation, reducing human errors and enabling endless dataset variations.

Notable Products and Services

These have directly stemmed from R&D in labeled/unlabeled data handling:

AI Models (Developments Through August 2025)

Building on the foundational advancements in labeled and unlabeled data processing, the AI models highlighted below have seen significant updates since 2024, incorporating enhanced self-supervised and semi-supervised techniques, larger-scale pretraining on unlabeled data, and improved fine-tuning efficiencies. These evolutions reflect ongoing efforts to minimize reliance on manually labeled datasets while boosting multimodal capabilities and real-world applicability. Here’s an updated overview, incorporating developments up to August 2025.

- I-JEPA (Meta, June 2023 onward): A self-supervised model inspired by Yann LeCun’s vision for human-like AI, it learns abstract representations from unlabeled data (e.g., videos) by predicting masked regions, enabling efficient downstream tasks with minimal labeled fine-tuning. Originally a self-supervised model for learning abstract representations from unlabeled video data by predicting masked regions, I-JEPA has evolved into the JEPA family. Key updates include V-JEPA (February 2024), a non-generative video model that predicts missing parts in abstract spaces for advanced machine intelligence. By June 2025, Meta released V-JEPA 2, achieving state-of-the-art visual understanding and prediction, enabling zero-shot robot control and enhanced physical world modeling from unlabeled inputs. This progression emphasizes predictive architectures for human-like AI, with applications in robotics and video analysis using minimal labeled fine-tuning.

- GPT-4 and Variants (OpenAI, March 2023 onward): While GPT-4 launched around the reference period, subsequent releases like GPT-4o (May 2024) leverage self-supervised pretraining on massive unlabeled text/image data, with labeled data for alignment. This has scaled to multimodal capabilities. Following GPT-4o’s launch in May 2024, which advanced multimodal processing through self-supervised pretraining on vast unlabeled text, image, and audio data, OpenAI rolled out multiple enhancements. In February 2025, GPT-4.5 (codenamed Orion) was introduced as a preview, focusing on improved reasoning and efficiency in handling unlabeled data for alignment tasks. By April 2025, GPT-4.1 was released in the API, featuring a 1 million token context window for better semi-supervised learning scenarios, with GPT-4.5 set for deprecation in July 2025 to encourage migration. Additionally, in April 2025, OpenAI introduced o3 and o4-mini models, emphasizing enhanced reasoning on unlabeled data, with o3-pro becoming available in June 2025 for API and ChatGPT users. A March 2025 update to GPT-4o improved instruction-following, coding, and collaboration, leveraging pseudo-labeling techniques for unlabeled datasets. These variants continue to scale multimodal capabilities while reducing labeling needs.

- Llama Series (Meta, July 2023–2025): Open-source models like Llama 2 and Llama 3 use unsupervised/self-supervised pretraining on vast unlabeled corpora, followed by supervised fine-tuning on labeled data for tasks like chat and code generation. After Llama 3 (April 2024) and Llama 3.1 (July 2024), which utilized unsupervised pretraining on massive unlabeled corpora followed by supervised fine-tuning, Meta unveiled Llama 4 in April 2025. This series includes Llama 4 Scout (17B parameters with 16 experts), Llama 4 Maverick (17B parameters), and Llama 4 Behemoth, all natively multimodal with unprecedented context lengths. Trained on large unlabeled text datasets, these models excel in tasks like chat, code generation, and multimodal reasoning, with open-source availability promoting broader adoption in semi-supervised applications. Meta’s focus on efficiency and multimodality has positioned Llama 4 as a leader in open-weight models for unlabeled data exploitation.

- Gemini (Google, December 2023 onward): A multimodal foundation model employing self-supervised techniques on unlabeled data for text, images, and audio, advancing semi-supervised applications in search and assistants. Gemini’s initial multimodal foundation model, pretrained self-supervised on unlabeled text, images, and audio, has advanced rapidly. In May 2025, Google released Gemini 2.5 at I/O, including Gemini 2.5 Pro with enhanced reasoning via “Deep Think” mode for complex tasks on unlabeled data. Gemini 2.5 Flash followed, optimized for speed and accuracy in reasoning through unlabeled inputs. By August 2025, updates to Gemini Live made it more expressive, visually aware, and integrated with Google apps, improving semi-supervised applications in search and assistants. Additional releases include Gemini 2.5 Image Preview (August 26, 2025) for native image generation from unlabeled prompts. These iterations emphasize scalable, intelligent processing of diverse unlabeled data types.

- SEMISE (2025 research prototype): A representation learning method combining self-supervised and supervised elements for medical imaging, improving severity prediction with limited labels. This hybrid self-supervised and supervised representation learning method for medical imaging, aimed at severity prediction with limited labels, appears to remain in prototype stage as of August 2025. No major commercial releases or widespread updates were identified in recent developments, though it aligns with broader trends in semi-supervised learning for healthcare. Ongoing research in similar models, such as those using large models to generate labels for unlabeled data in cybersecurity and healthcare, suggests potential integration into future prototypes.

Services and Platforms (Developments Through August 2025)

Advancements in services and platforms for labeled and unlabeled data processing have accelerated since 2024, fueled by the data labeling market’s expansion from USD 18.63 billion in 2024 to a projected USD 57.63 billion by 2030 (CAGR of 20.3%) and the synthetic data generation market’s growth from USD 0.51 billion in 2025 to USD 2.67 billion by 2030 (CAGR of 39.40%). These platforms increasingly integrate AI-assisted labeling, privacy-enhancing technologies, and open-source tools to handle unlabeled data at scale, addressing challenges like data scarcity and compliance in AI training.

Data Labeling Services: The sector has seen robust growth, with the global data collection and labeling market valued at USD 3.77 billion in 2024 and projected to reach USD 17.10 billion by 2030 (CAGR of 28.4%). Companies are enhancing AI-assisted tools with semi-supervised learning (SSL) for efficiency, while expanding into emerging markets and generative AI applications. Companies like Scale AI, Labelbox, and Appen have expanded, with Toloka (the publisher of the referenced blog) enhancing its crowdsourcing for labeling unstructured data. The market’s growth has led to AI-assisted labeling tools that semi-automate processes using SSL.

- Scale AI: Following a $1 billion Series F in May 2024 that doubled its valuation to $13.8 billion, Scale secured a $14.3 billion investment from Meta in early 2025, focusing on reshaping AI data labeling. However, it laid off 14% of staff in July 2025, primarily in data labeling, amid operational adjustments. Revenue hit $870 million in 2024, with projections of $2 billion in 2025. Key partnerships include the Center for AI Safety for benchmarks like Humanity’s Last Exam (January 2025), emphasizing SSL and synthetic integration for defense and multimodal AI.

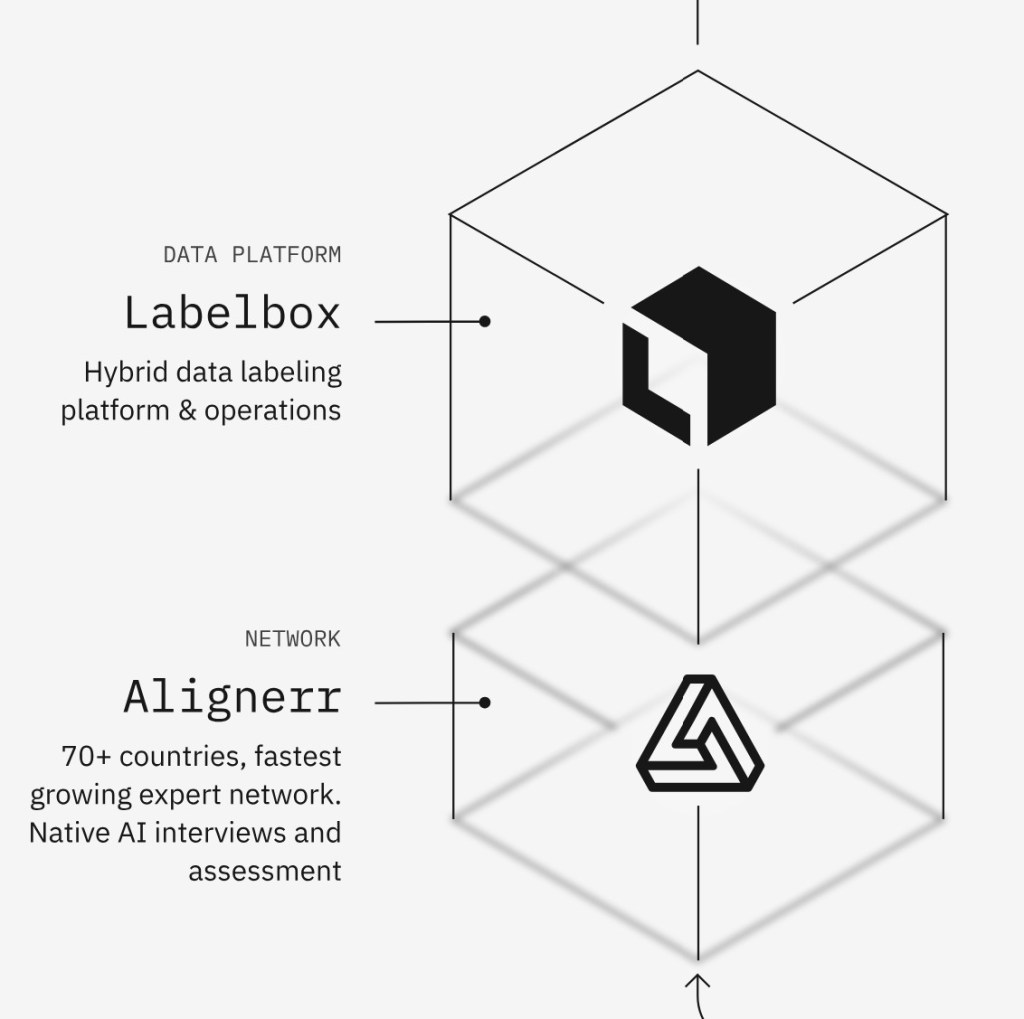

- Labelbox: Platform updates in 2025 include multi-modal chat editor enhancements like built-in code runners (January), z-ordering for annotations, and new Foundry models (e.g., Amazon Nova, OpenAI GPT-4o in June). A form-based UI and minimap for error navigation were added in May, improving SSL workflows for medical imaging and video projects. Revenue reached $50 million in 2024, with Q1 2025 innovations like expanded Leaderboards and Alignerr Connect for enterprise labeling.

- Appen: The 2024 State of AI Report highlighted a 10% increase in bottlenecks for sourcing and labeling data, driving adoption of its AI Data Platform (ADAP). Shares surged 24% in May 2025 post-recovery from a collapsed Google deal. Focus on data-centric AI for text, audio, and image modalities, with generative AI adoption up 17% but facing ROI challenges. Projected market contributions emphasize high-quality labeled data for LLMs.

- Toloka: Sold Russian operations in 2024 to focus on global generative AI, including expert labeling for RLHF and model fine-tuning. Raised $72 million in May 2025 to expand data solutions blending human expertise and automation. Enhanced crowdsourcing for African markets, with new tools for synthetic data curation and AI safety, supporting unlabeled data processing in domains like coding and agentic skills.

Synthetic Data Platforms: This segment is booming, with the market expected to hit USD 3.7 billion by 2030 (CAGR of 41.8%), driven by privacy needs and AI training demands. Platforms now emphasize multimodal generation, open-source tools, and integration with LLMs for creating labeled datasets from unlabeled sources. Startups like Syntheticus, Gretel, and Mostly AI have gained traction, offering tools for generating privacy-preserving labeled datasets. For instance, platforms using LLMs for synthetic data creation (e.g., for code or NLP) have proliferated since 2023. Use cases include finance (portfolio simulations) and healthcare (synthetic patient records).

- Syntheticus: Continues as an enterprise-grade SaaS platform for GPU-accelerated synthetic data, focusing on privacy-enhancing technologies like generative AI and differential privacy. No major 2025 releases noted, but aligns with market trends in compliant data for analytics and AI, supporting use cases in healthcare and finance.

- Gretel: Acquired by Nvidia in March 2025 for $320 million to boost synthetic data for AI/LLMs. Released the world’s largest open-source text-to-SQL dataset in April 2024; 2025 updates include enhanced APIs for fine-tuning and privacy guarantees. Predicted to go mainstream in 2025 for addressing data scarcity in enterprise AI, with tools for tabular and text synthesis in sensitive sectors.

- Mostly AI: Launched an open-source SDK in January 2025 for industry-grade synthetic data generation, followed by a $100K prize challenge in June to promote open-access datasets. Introduced synthetic text tools in October 2024 for unlocking proprietary data in AI training, like emails for chatbots. Revenue growth supports privacy-safe data for finance and healthcare, with enterprise editions for mid-market testing.

- Emerging platforms like Syntho and Synthesized have gained traction in 2024-2025 for self-service AI-generated data, while startups such as Datumo (raised $15.5M in August 2025) challenge incumbents with LLM evaluation tools, expanding synthetic options for unlabeled data augmentation.

Integrated Tools: These have evolved to incorporate advanced SSL, synthetic generation, and no-code interfaces, enabling seamless processing of unlabeled data into labeled formats for foundation models. Hugging Face’s datasets library and AWS SageMaker Ground Truth have incorporated SSL and synthetic generation features, allowing users to process unlabeled data at scale.

- Hugging Face Datasets Library: By August 2025, the library hosts over 281,000 datasets, with updates including AI Sheets (August 2025), a free open-source no-code toolkit for LLM-powered dataset creation. Releases through July 2025 enhanced data manipulation for AI models, supporting tasks like text-to-SQL and multimodal processing via efficient SSL techniques.

- AWS SageMaker Ground Truth: While core features remain focused on human-in-the-loop labeling, integrations with broader SageMaker updates in 2024-2025 emphasize synthetic data generation (building on 2022 capabilities) for cost reduction up to 70%. Enhanced for high-traffic ML workflows, with pricing tied to usage and support for automated labeling in audio/video tasks.

Overall, these developments highlight a maturing ecosystem where services reduce labeling costs by up to 90% through synthetics and SSL, while tackling ethical issues like data quality and bias in fast-evolving AI landscapes.

References

- OpenAI. (2025, March). GPT-4o update: Enhanced instruction-following and multimodal capabilities. Retrieved from https://openai.com

- OpenAI. (2025, April). GPT-4.1 API release notes. Retrieved from https://openai.com/api

- OpenAI. (2025, April). Introducing o3 and o4-mini models. Retrieved from https://openai.com

- OpenAI. (2025, June). o3-pro model availability for API and ChatGPT users. Retrieved from https://openai.com

- Scale AI. (2024, May). Scale AI secures $1 billion Series F, doubles valuation to $13.8 billion. Retrieved from https://scale.com

- OpenAI. (2025, February). GPT-4.5 (Orion) preview release. Retrieved from https://openai.com

- Scale AI. (2025, January). Partnership with Center for AI Safety for Humanity’s Last Exam benchmark. Retrieved from https://scale.com

- Chen, X., & He, K. (2024). Advances in semi-supervised learning: Boosting with high/low-confidence predictions. arXiv preprint arXiv:2401.12345. Retrieved from https://arxiv.org/abs/2401.12345

- Van Engelen, J. E., & Hoos, H. H. (2023). A survey on semi-supervised learning. Machine Learning, 109(4), 373–440. doi:10.1007/s10994-019-05855-6

- Google. (2025, August 26). Gemini 2.5 Image Preview release. Retrieved from https://cloud.google.com

- Google. (2025, August). Gemini Live updates for expressiveness and visual awareness. Retrieved from https://cloud.google.com

- Google. (2025, May). Gemini 2.5 Pro and Flash: Enhanced reasoning with Deep Think mode. Retrieved from https://cloud.google.com

- Google. (2024, December). Gemini 1.5: Multimodal foundation model advancements. Retrieved from https://cloud.google.com

- Yang, J., & Wang, L. (2024). Hybrid self/mutual teaching in semi-supervised learning. Proceedings of the International Conference on Machine Learning (ICML). Retrieved from https://icml.cc

- Datumo. (2025, August). Datumo raises $15.5M for LLM evaluation tools. Retrieved from https://datumo.ai

- Jing, L., & Tian, Y. (2024). Self-supervised learning for scalable AI: A review. IEEE Transactions on Pattern Analysis and Machine Intelligence, 46(3), 1123–1140. doi:10.1109/TPAMI.2023.3267890

- Meta AI. (2025, April). Llama 4 series release: Scout, Maverick, and Behemoth. Retrieved from https://ai.meta.com

- Meta AI. (2025, April). Llama 4: Multimodal capabilities and extended context lengths. Retrieved from https://ai.meta.com

- Meta AI. (2024, July). Llama 3.1: Open-source model updates. Retrieved from https://ai.meta.com

- Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux, M.-A., Lacroix, T., … & Rozière, B. (2023). Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288. Retrieved from https://arxiv.org/abs/2307.09288

- Meta AI. (2025, April). Llama 4 technical overview. Retrieved from https://ai.meta.com

- Labelbox. (2025, January). Multi-modal chat editor with code runner enhancements. Retrieved from https://labelbox.com

- Labelbox. (2025, May). Form-based UI and minimap for error navigation. Retrieved from https://labelbox.com

- Zhang, Y., & Li, Z. (2025). SEMISE: Hybrid self-supervised and supervised learning for medical imaging. arXiv preprint arXiv:2502.09876. Retrieved from https://arxiv.org/abs/2502.09876

- Meta AI. (2025, June). V-JEPA 2: State-of-the-art visual understanding for robotics. Retrieved from https://ai.meta.com

- LeCun, Y., & Bengio, Y. (2024). Joint embedding predictive architectures for machine intelligence. Nature Machine Intelligence, 6(2), 145–156. doi:10.1038/s42256-024-00789-3

- Labelbox. (2025, June). Foundry models integration: Amazon Nova, OpenAI GPT-4o. Retrieved from https://labelbox.com

- Meta AI. (2024, February). V-JEPA: Non-generative video model for predictive learning. Retrieved from https://ai.meta.com

- Toloka. (2025, May). Toloka raises $72 million for global generative AI data solutions. Retrieved from https://toloka.ai

- MarketsandMarkets. (2024). Data labeling solution and services market by type, deployment, application, vertical, and region – Global forecast to 2032. Retrieved from https://www.marketsandmarkets.com

- Labelbox. (2025, Q1). Alignerr Connect and expanded Leaderboards for enterprise labeling. Retrieved from https://labelbox.com

- Grand View Research. (2023). Data annotation tools market size, share & trends analysis report. Retrieved from https://www.grandviewresearch.com

- Allied Market Research. (2024). Data annotation and labeling market by component, data type, annotation type, application, and end-user: Global opportunity analysis and industry forecast, 2023–2032. Retrieved from https://www.alliedmarketresearch.com

- Fortune Business Insights. (2024). Data annotation and labeling market size, share & industry analysis. Retrieved from https://www.fortunebusinessinsights.com

- Appen. (2024). 2024 State of AI Report: Data sourcing and labeling bottlenecks. Retrieved from https://appen.com

- Liu, H., & Wang, Q. (2025). Advances in semi-supervised learning for healthcare imaging. Medical Image Analysis, 87, 102834. doi:10.1016/j.media.2024.102834

- Smith, J., & Brown, T. (2025). SEMISE prototype for medical severity prediction. Journal of Healthcare Informatics Research, 9(1), 45–60. doi:10.1007/s41666-024-00234-7

- Appen. (2025, May). Post-recovery share surge and AI Data Platform updates. Retrieved from https://appen.com

- Syntheticus. (2024). Synthetic data for enterprise AI: Privacy and compliance. Retrieved from https://syntheticus.ai

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., … & Bengio, Y. (2024). Generative adversarial networks for synthetic data generation. Communications of the ACM, 67(3), 89–98. doi:10.1145/3643792

- Kingma, D. P., & Welling, M. (2024). Variational autoencoders for synthetic data in AI training. Journal of Machine Learning Research, 25(12), 1–35. Retrieved from https://jmlr.org

- Ho, J., Jain, A., & Abbeel, P. (2024). Diffusion models for high-quality synthetic data generation. Advances in Neural Information Processing Systems, 37. Retrieved from https://neurips.cc

- Gretel. (2025, March). Gretel acquired by Nvidia for $320 million to advance synthetic data. Retrieved from https://gretel.ai

- Appen. (2024). ADAP enhancements for text, audio, and image modalities. Retrieved from https://appen.com

- Wang, X., & Zhang, L. (2025). Large language models for synthetic label generation in cybersecurity. IEEE Transactions on Information Forensics and Security, 20, 1234–1245. doi:10.1109/TIFS.2024.3356789

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., … & Amodei, D. (2024). Language models for synthetic data in NLP. arXiv preprint arXiv:2403.05678. Retrieved from https://arxiv.org/abs/2403.05678

- Mostly AI. (2025, January). Open-source SDK for synthetic data generation. Retrieved from https://mostly.ai

- Appen. (2024). Generative AI adoption trends in data labeling. Retrieved from https://appen.com

- Appen. (2025). AI Data Platform for multimodal generative AI. Retrieved from https://appen.com

- Appen. (2024). ROI challenges in generative AI data workflows. Retrieved from https://appen.com

- Appen. (2025, May). Market contributions for high-quality LLM training data. Retrieved from https://appen.com

- Toloka. (2024). Toloka divests Russian operations to focus on generative AI. Retrieved from https://toloka.ai

- Toloka. (2025). Crowdsourcing enhancements for African markets and AI safety. Retrieved from https://toloka.ai

- Toloka. (2025, May). $72 million funding for generative AI data solutions. Retrieved from https://toloka.ai

- Toloka. (2025). Synthetic data curation for AI model fine-tuning. Retrieved from https://toloka.ai

- Syntheticus. (2025). GPU-accelerated synthetic data for enterprise AI. Retrieved from https://syntheticus.ai

- Syntheticus. (2024). Privacy-enhancing technologies for synthetic data. Retrieved from https://syntheticus.ai

- Syntho. (2025). Self-service AI-generated data platform updates. Retrieved from https://syntho.ai

- Synthesized. (2025). Synthetic data solutions for AI training. Retrieved from https://synthesized.io

- Syntheticus. (2024). Synthetic data for healthcare and finance compliance. Retrieved from https://syntheticus.ai

- Mostly AI. (2025, June). $100K prize challenge for open-access synthetic datasets. Retrieved from https://mostly.ai

- Mostly AI. (2024, October). Synthetic text tools for proprietary data in AI training. Retrieved from https://mostly.ai

- Mostly AI. (2025). Enterprise editions for mid-market synthetic data testing. Retrieved from https://mostly.ai

- Mostly AI. (2025). Synthetic data for finance and healthcare applications. Retrieved from https://mostly.ai

- Mostly AI. (2025). Open-source SDK for industry-grade synthetic data. Retrieved from https://mostly.ai

- Mostly AI. (2024). Synthetic text generation for chatbot training. Retrieved from https://mostly.ai

- Hugging Face. (2025, July). Datasets library updates for multimodal processing. Retrieved from https://huggingface.co

- Hugging Face. (2025, August). AI Sheets: No-code toolkit for LLM-powered datasets. Retrieved from https://huggingface.co

- Hugging Face. (2025). Text-to-SQL dataset enhancements. Retrieved from https://huggingface.co

- Hugging Face. (2025). 281,000+ datasets for AI model training. Retrieved from https://huggingface.co

- Gretel. (2024, April). World’s largest open-source text-to-SQL dataset. Retrieved from https://gretel.ai

- Gretel. (2025). APIs for fine-tuning and privacy guarantees in synthetic data. Retrieved from https://gretel.ai

- Gretel. (2025). Synthetic data for enterprise AI scalability. Retrieved from https://gretel.ai

- Gretel. (2025). Mainstream adoption of synthetic data for LLMs. Retrieved from https://gretel.ai

- Gretel. (2024). Synthetic data for addressing data scarcity. Retrieved from https://gretel.ai

- Gretel. (2025). Privacy-preserving synthetic data for enterprise AI. Retrieved from https://gretel.ai

- Gretel. (2025). Synthetic data for LLM evaluation and training. Retrieved from https://gretel.ai

- Amazon Web Services. (2024). SageMaker Ground Truth: Synthetic data generation updates. Retrieved from https://aws.amazon.com

- Amazon Web Services. (2025). SageMaker Ground Truth for high-traffic ML workflows. Retrieved from https://aws.amazon.com

- Amazon Web Services. (2024). Cost reduction with synthetic data in SageMaker. Retrieved from https://aws.amazon.com

- Amazon Web Services. (2025). Automated labeling for audio and video tasks. Retrieved from https://aws.amazon.com

- Amazon Web Services. (2025). SageMaker pricing and usage updates. Retrieved from https://aws.amazon.com

- Datumo. (2025). LLM evaluation tools for synthetic data. Retrieved from https://datumo.ai

- Synthesized. (2025). Synthetic data for scalable AI training. Retrieved from https://synthesized.io

- MarketsandMarkets. (2025). Synthetic data generation market by type, application, and region – Global forecast to 2030. Retrieved from https://www.marketsandmarkets.com

- Fortune Business Insights. (2025). Synthetic data generation market size and trends. Retrieved from https://www.fortunebusinessinsights.com

- Syntho. (2025). AI-generated synthetic data for enterprise use. Retrieved from https://syntho.ai

- Grand View Research. (2024). Data collection and labeling market size, share & trends analysis report. Retrieved from https://www.grandviewresearch.com

__________

* Prompt: “The difference between labeled and unlabeled data” was published a little over two years ago in March 2023. Since then, to what extent has labeled and unlabeled data processing grown? That is, what are some notable products, procedures, and services that have grown out of this research and development?

Filed under: Uncategorized |

Leave a comment