By Jim Shimabukuro (assisted by DeepSeek)

Editor

Introduction: The hardware for each is optimized for vastly different workloads. The core difference is that desktop GPUs (Graphics Processing Units) are designed for high frame rates in a single application (a game) on a single machine, while data center GPUs (like those in xAI’s cluster) are designed for high throughput in massively parallel computations across thousands of machines. Here’s a detailed breakdown, referencing the most relevant hardware.

Referenced GPUs

Key Differences Between Desktop and Data Center GPUs

1. Primary Purpose and Optimization

- Desktop GPU (e.g., RTX 4090): Optimized for low-latency rendering. Its goal is to take a set of inputs (game state, player view) and generate a single, perfectly rendered frame as quickly as possible. It then immediately moves on to the next frame. This sequential, real-time processing is paramount for a smooth gaming experience.

- Data Center GPU (e.g., H100): Optimized for high-throughput computing. Its goal is to perform the same mathematical operation (like a matrix multiplication) on a massive batch of data simultaneously. It doesn’t care about the latency of a single operation; it cares about how many trillions of operations (TeraFLOPs) it can complete per second. Training a large language model is a massively parallel task, not a sequential one.

2. Memory: Capacity and Type

- Desktop GPU (RTX 4090): 24 GB of GDDR6X. This VRAM is very fast for gaming textures and framebuffers but is error-prone. A single bit-flip might cause a visual artifact in a game, which is acceptable.

- Data Center GPU (H100):80 GB of HBM3 (High Bandwidth Memory). This is a huge difference.

- Capacity: Large AI models are many gigabytes in size and must fit entirely into VRAM to be trained efficiently. 80GB+ is often a minimum requirement.

- Technology: HBM is stacked vertically on the same package as the GPU die, providing immensely higher bandwidth (over 3 TB/s on the H100 vs. ~1 TB/s on the RTX 4090). This is critical for feeding the vast number of cores with data.

- ECC (Error Correcting Code): Data center memory features ECC. A single bit-flip during a weeks-long AI training run could corrupt the entire model. ECC finds and corrects these errors on the fly, ensuring computational integrity.

3. Interconnect: Linking Multiple GPUs

This is one of the most critical differentiators for clusters like xAI’s Colossus.

- Desktop GPU (RTX 4090): Uses PCIe (Gen 4 or 5) to connect to the CPU. To link multiple GPUs, it uses NVIDIA NVLink (3rd gen, 100+ GB/s) or, more commonly, SLI (which is now effectively dead). This is a bottleneck for parallel computing.

- Data Center GPU (H100): Features a dedicated, ultra-high-speed interconnect called NVLink. The 4th-gen NVLink on the H100 provides a staggering 900 GB/s of bidirectional bandwidth per GPU. This allows hundreds or thousands of GPUs to act like one giant, cohesive compute unit, essential for distributed training. They also use specialized networking like Infiniband.

4. Precision and Specialized Cores

- Desktop GPU (RTX 4090): Excels at FP32 (32-bit floating point) precision, which is the standard for game graphics. Its AI cores (Tensor Cores) are powerful but are primarily used for DLSS (a graphics upscaling technology) in gaming.

- Data Center GPU (H100): Built for scientific and AI computation. It has massive arrays of specialized cores for lower-precision math, which is faster and more efficient for AI.

- TF32 and FP16: Accelerates AI training.

- FP8: A new precision format on the H100 specifically for ultra-efficient AI inference (running the trained model).

- FP64: Strong double-precision (64-bit) performance for scientific simulations, which is largely absent on gaming cards.

5. Form Factor, Power, and Cooling

- Desktop GPU (RTX 4090): A large 3-4 slot card designed to fit in a PC case. It draws 450-600W and is air-cooled with large fans.

- Data Center GPU (H100): Almost exclusively comes in a SXM form factor. These are bare boards that slot directly into a specialized server motherboard (like NVIDIA’s DGX systems), allowing for better power delivery and space efficiency. They are designed for dense, rack-scale deployment with centralized, powerful liquid cooling systems. An H100 SXM can draw 700W.

Summary Table

| Feature | Desktop GPU (NVIDIA RTX 4090) | Data Center GPU (NVIDIA H100) |

|---|---|---|

| Primary Goal | Low-Latency Rendering (High FPS) | High-Throughput Compute (TeraFLOPs) |

| Memory | 24 GB GDDR6X | 80 GB HBM3 with ECC |

| Memory Bandwidth | ~1 TB/s | ~3.35 TB/s |

| GPU Interconnect | PCIe, NVLink (3rd Gen, ~100 GB/s) | NVLink (4th Gen, 900 GB/s) |

| Key Precision | FP32 (Graphics) | TF32/FP16/FP8 (AI), FP64 (Scientific) |

| Form Factor | PCIe Card (Air Cooled) | SXM Module (Liquid Cooled) |

| Typical Use Case | Gaming, VR, Content Creation | AI Training, HPC, Scientific Simulation |

Conclusion for xAI’s Colossus

A cluster like xAI’s Colossus uses tens of thousands of H100 GPUs (and their upcoming successor, the B100) because it is a single, massive computer designed for one task: training and running enormous AI models. The H100’s immense memory bandwidth, fast interconnects (NVLink/Infiniband), and specialized AI cores allow these GPUs to work in perfect harmony on a problem that is far too large for any desktop GPU, or even a rack of them.

You cannot build a similar cluster with RTX 4090s because the communication bottlenecks between the cards would be so severe that adding more GPUs would actually make performance worse. The data center GPU is engineered from the ground up to avoid this problem.

Physical Size Difference

The physical size difference is dramatic and directly related to their function. The most common form factors for these GPUs are:

- Desktop GPU (NVIDIA GeForce RTX 4090): The Founders Edition model.

- Data Center GPU (NVIDIA H100): The SXM5 module form factor (the standard for dense servers, not the PCIe card version).

Here is a direct comparison of their physical dimensions and weight.

Physical Size Comparison

| Measurement | NVIDIA GeForce RTX 4090 (Desktop) | NVIDIA H100 SXM5 (Data Center) | Note |

|---|---|---|---|

| Height | 5.4 in / 137 mm | 4.4 in / 112 mm | The H100 SXM is actually shorter. |

| Length | 12.0 in / 304 mm | 16.8 in / 427 mm | The H100 is significantly longer*. |

| Width (Thickness) | 3.0 in / 61 mm (3-Slot) | 1.6 in / 41 mm | The H100 SXM is a bare board, so it’s much thinner. |

| Weight | 4.6 lbs / 2.1 kg | ~7.7 lbs / ~3.5 kg | The H100 is much heavier for its size due to its dense components. |

Visualizing the Difference

While the H100 SXM module is shorter and thinner, its much greater length* and significantly heavier weight make it a far larger component in terms of overall volume and density.

- The RTX 4090 is a massive consumer card designed to fit (with some difficulty) into a standard PC case. Its large cooler with fans and heat pipes accounts for most of its volume and weight.

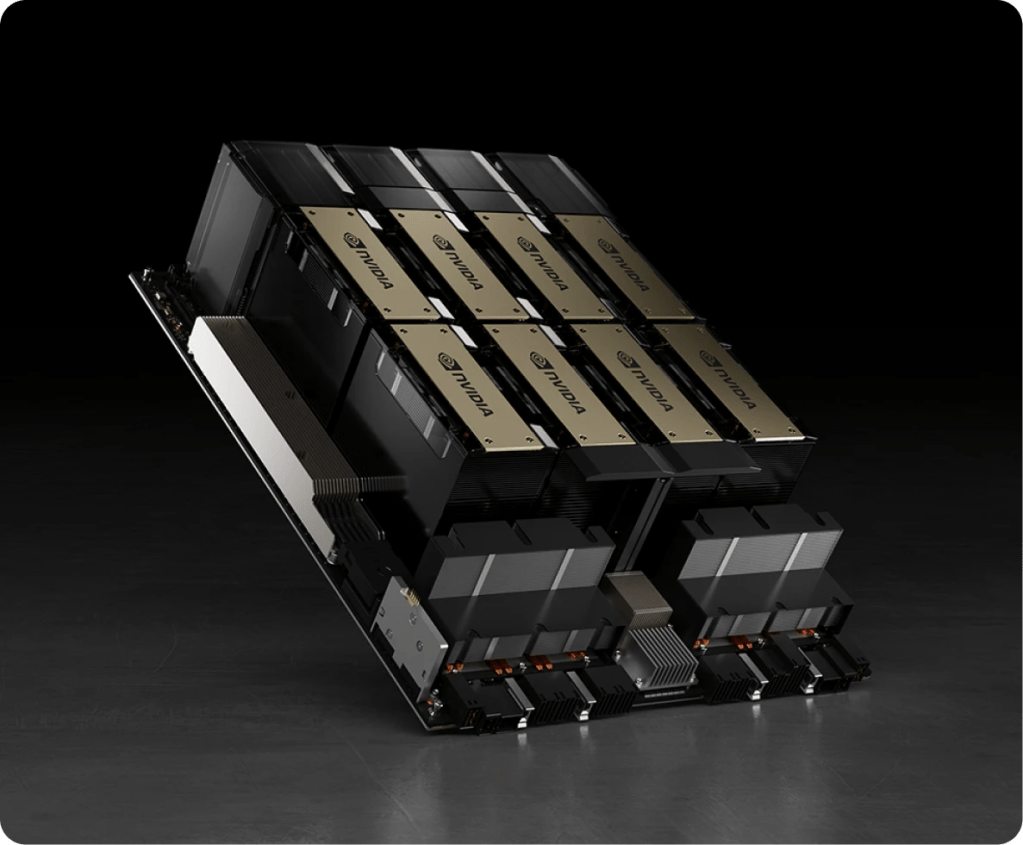

- The H100 SXM is not a standalone card. It’s a compute module—essentially the GPU die and its massive HBM3 memory stacks mounted directly onto a board. It has no fans or onboard cooling of its own. It is designed to be inserted into a specialized server tray where eight of these modules are liquid-cooled by a single, massive cold plate and cooled by centralized fans in the server rack.

Why is the H100 SXM so long* if it’s just the board?

The length* is necessary to accommodate:

- The massive GH100 GPU die itself.

- The six stacks of HBM3 memory surrounding it.

- The complex voltage regulation modules (VRMs) and circuitry needed to deliver up to 700 watts of power reliably.

- The NVLink connector at the top of the board that allows it to communicate directly with another H100 at incredible speed.

The Bigger Picture: How They Are deployed

This size comparison tells only half the story. The real physical difference is seen in how they are used:

- A Desktop GPU (RTX 4090): Plugs into one PCIe slot in your computer.

- Data Center GPUs (H100 SXM): Are deployed in groups of eight on a single motherboard (like in the NVIDIA DGX H100 server). These servers are then stacked in a rack, with shared power and liquid cooling. A full rack can contain 32 H100 GPUs, and systems like xAI’s Colossus use thousands of them.

In summary, while the H100 SXM module itself isn’t “thicker” than a gaming GPU, it is longer, denser, heavier, and designed to be integrated into a massively scalable system that is orders of magnitude larger than a desktop PC.

__________

* The H100 SXM5 pictured in this article is a different model with a different form factor.

Filed under: Uncategorized |

Leave a comment