By Jim Shimabukuro (assisted by Grok)

Editor

Introduction: I asked Grok to explain, in natural conversational language, the five generations of AI. This longitudinal perspective is invaluable in understanding the trajectory of AI innovation. -js

Grok’s Response

Imagine sitting down with a cup of coffee, chatting about something that’s changing the world faster than we can keep up: artificial intelligence. You’ve probably heard the buzzwords—AI, machine learning, chatbots—but have you ever wondered how we got here and where it’s all headed? Today, I want to walk you through what experts call the “five generations of AI.” Now, to be clear, this isn’t some ancient history lesson; it’s a framework that helps us understand AI’s evolution, from the simple tools we use every day to mind-blowing systems that could one day run entire companies. Think of it like the stages of a rocket launch: each generation builds on the last, propelling us toward something truly transformative.

This idea of five generations comes from forward-thinking folks at places like OpenAI, who are mapping out AI’s path to what’s called artificial general intelligence, or AGI—basically, machines that can think and act like humans, or even better. It’s not just theory; it’s based on real progress we’re seeing right now, in 2025. We’ll start from the beginning and work our way up, using everyday examples to make it feel real. No jargon overload here—I’ll explain things like I’m telling a story to a curious friend who’s not a tech whiz.

Let’s kick off with the first generation: conversational AI, or what most people just call chatbots. This is where AI started showing up in our daily lives, acting like a helpful companion that can talk back to you in natural language. Picture this: you’re planning a weekend getaway and don’t want to sift through a dozen websites. You pull up an app like ChatGPT or Google’s Gemini, type in, “Hey, suggest a budget-friendly itinerary for a two-day trip to Paris, including must-see spots and cheap eats.” Within seconds, it spits out a detailed plan: Day one, start at the Eiffel Tower at sunrise to beat the crowds, grab a croissant from a corner bakery for under five euros, then stroll to the Louvre. It even throws in tips like using the metro app for real-time updates. That’s not magic—it’s the AI understanding your words, pulling from vast knowledge bases, and responding like a travel agent who’s always available.

This generation exploded onto the scene around 2022 with tools like ChatGPT, which quickly racked up millions of users. Before that, AI was mostly behind the scenes, like in recommendation engines on Netflix suggesting your next binge-watch. But conversational AI made it personal. Take customer service: instead of waiting on hold for hours, you chat with a bot on a bank’s website about a lost card. It verifies your identity, cancels the old one, and orders a new one—all while sounding polite and human-like. Of course, it’s not perfect; sometimes it hallucinates facts or gets stuck in loops, like if you ask it a tricky riddle and it gives a wrong answer. But this level democratized AI, turning it from a lab experiment into something anyone with a smartphone can use. It’s like the first cell phones—clunky compared to what’s coming, but revolutionary because it connected us in new ways.

Now, as we move to the second generation, things get a bit more brainy. This is the era of “reasoners”—AI that doesn’t just chat but solves problems at a human level, think PhD-smart without needing tools or calculators. We’re talking about systems that can tackle complex puzzles, analyze data, and come up with solutions that require deep thinking. OpenAI says we’re inching toward this right now, with models like their latest ones showing glimpses of it.

To see it in action, imagine you’re a doctor diagnosing a rare disease. In the first generation, a chatbot might summarize symptoms or list common causes based on what you tell it. But a reasoner AI? It could take a patient’s full medical history, lab results, and even genetic data, then reason step by step: “This symptom matches X, but combined with Y, it rules out Z. Considering recent studies on similar cases, the most likely culprit is this obscure condition, and here’s why.” It’s like having a virtual expert who thinks independently, not just regurgitating info.

A concrete example popped up in recent tests where AI models were given math problems that stump most people. Take the classic: “A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost?” Your gut might say 10 cents, but that’s wrong—it’s 5 cents, because the bat would then be $1.05, totaling $1.10. Early chatbots might fall for the trick, but reasoner-level AI pauses, breaks it down logically, and gets it right every time. Or think about climate modeling: instead of just reporting weather patterns, it could simulate scenarios like, “If we cut emissions by 20% in these five countries, what’s the projected impact on sea levels in 2050?” It weighs variables, accounts for uncertainties, and explains its logic, much like a scientist presenting at a conference.

This shift is huge because it moves AI from helper to thinker. Businesses are already experimenting—say, a logistics company using it to optimize routes not just based on traffic but by reasoning through fuel costs, driver fatigue, and even geopolitical risks. We’re not fully there yet; current models still need prompts to “think aloud,” but the progress is accelerating. It’s exciting, but a little scary too— what happens when machines outthink us on everyday problems?

Pushing further, the third generation introduces “agents”—AI that doesn’t stop at thinking; it acts on its own. These are systems that can handle tasks over days or weeks, making decisions and executing them without constant human oversight. It’s like upgrading from a smart assistant to a full-fledged employee who works tirelessly.

Here’s a show-don’t-tell scenario: You’re running a small online store and heading out for a week-long vacation. In earlier generations, you’d set up auto-replies or hire temp help. But with an agent AI, you say, “Manage the shop while I’m gone: process orders, handle returns, update inventory, and even negotiate with suppliers if prices fluctuate.” It logs in to your systems, monitors sales in real-time, spots a low-stock item, contacts the vendor via email (drafting and sending it itself), haggles based on past data (“We’ve ordered 500 units before; can we get 10% off?”), and confirms delivery. If a customer complains about a delayed shipment, it investigates via tracking APIs, issues a refund if needed, and follows up with an apology email. All this happens autonomously, with maybe a daily summary sent to your phone.

Real-world glimpses are emerging in tools like autonomous coding agents that fix bugs in software overnight or financial bots that trade stocks based on market analysis. Remember those self-driving cars? They’re early agents—sensing the road, deciding when to brake, and navigating without a driver. But full agents would go beyond, perhaps managing an entire fleet: rerouting vehicles around accidents, scheduling maintenance, and even predicting demand spikes from weather forecasts. The key is reliability—they rarely fail and self-correct, unlike today’s automations that need babysitting. This could transform industries; imagine healthcare where AI agents coordinate patient care, booking appointments, reminding about meds, and alerting doctors to anomalies, all while complying with privacy laws. We’re approaching this, but challenges like ensuring ethical decisions remain.

By the fourth generation, AI evolves into “innovators” —machines that don’t just follow or act but create new ideas and inventions. This is where creativity meets intelligence, pushing boundaries beyond human routines.

Let me paint a picture: You’re an inventor tinkering with eco-friendly packaging for food delivery. A conversational AI might suggest existing materials; a reasoner could analyze why current ones fail; an agent might source suppliers. But an innovator AI? It dives in: “Based on your goals—biodegradable, cheap, heat-resistant—let’s brainstorm. What if we combine mushroom mycelium with recycled ocean plastic? Here’s a prototype design: it molds to shapes, breaks down in soil in 30 days, and costs 15% less. I’ll simulate tests: strength holds up to 10kg, no leaks at 60°C.” It invents, iterating on failures without getting discouraged.

Historical nods here: Think of how AlphaGo beat world champions at Go not by memorizing moves but by inventing strategies humans hadn’t considered. Scaling that up, innovator AI could revolutionize R&D. In pharmaceuticals, instead of trial-and-error, it might design novel drugs: “Analyzing protein structures and 10,000 failed trials, here’s a new molecule that targets cancer cells while sparing healthy ones—predicted success rate 80%.” Companies like OpenAI envision this aiding breakthroughs in clean energy or materials science. It’s not here yet; current generative tools like DALL-E create art from prompts, but true innovation requires original problem-solving without hints. When it arrives, it could accelerate progress, though we’d need safeguards against misuse, like inventing harmful tech.

Finally, the fifth generation: “organizations.” This is the pinnacle, where AI doesn’t assist or innovate—it runs the show, performing the work of an entire company or institution. Multiple agents collaborate seamlessly, like a digital workforce.

Envision this: You start a business idea, but instead of hiring a team, you deploy an organizational AI. It sets up everything: the “CEO” module strategizes growth, “HR” recruits virtual specialists, “finance” handles budgets and taxes, “marketing” runs campaigns analyzing trends, and “R&D” innovates products. They communicate internally—say, marketing spots a viral trend, alerts R&D to tweak a product, which then updates production via integrated systems. No meetings, no egos, just efficient output. If issues arise, like a supply chain glitch, the system self-optimizes, perhaps inventing a new supplier network.

Examples are futuristic, but think of how Amazon’s warehouses are already semi-autonomous with robots picking orders. Scale that to a whole enterprise: an AI-run hospital where diagnostic agents, surgical planners, and admin bots work in harmony, reducing wait times and errors. Or a non-profit tackling climate change, with AI coordinating global efforts, predicting disasters, and allocating resources. OpenAI’s CEO, Sam Altman, thinks we could hit this in a decade, while others say 50 years. Either way, implications are profound: massive productivity boosts, but questions about jobs, control, and what it means to be human. Who oversees the AI organization? How do we ensure it aligns with our values?

Wrapping this up, these five generations trace AI from chatty helpers to world-shaping entities. We’ve seen conversational AI change how we learn and work, reasoners poised to solve tough problems, agents automating routines, innovators sparking creativity, and organizational AI redefining scale. It’s not just tech—it’s about amplifying human potential. As we rocket through these stages, the key is staying informed and thoughtful. Who knows? By the time you finish that coffee, we might be one step closer to the next generation. What do you think—exciting or a bit overwhelming?

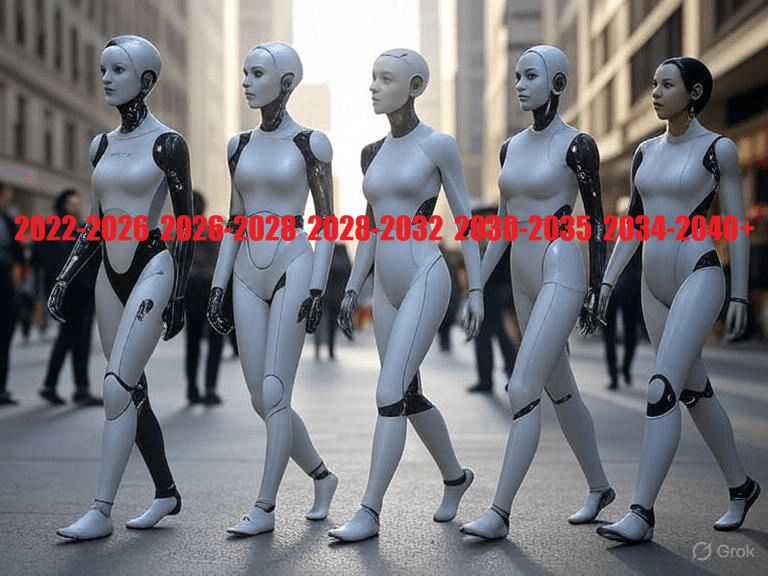

Approximate Start-End Dates for Each of the 5 Generations

The five generations of AI, as outlined in OpenAI’s framework for progress toward artificial general intelligence (AGI), don’t have rigidly defined start and end dates etched in stone—after all, these are more like milestones on a fast-moving highway than calendar entries in a history book. The timelines are approximate, drawn from OpenAI’s internal roadmap shared in mid-2024, along with predictions from CEO Sam Altman and other experts. They’re based on when key capabilities emerged or are expected to mature, with the current date being October 2025 putting us firmly in the transition from generation 1 to 2. I’ll break it down generation by generation, keeping it straightforward and tying back to the examples from our earlier chat.

Starting with Generation 1: Conversational AI (the chatbots we all know and love, like ChatGPT handling your Paris itinerary). This kicked off in earnest with the public launch of ChatGPT in November 2022, which basically lit the fuse for widespread adoption—jumping from a few million to over 180 million users in months. It’s the era of natural language chit-chat that’s still dominant today, powering everything from customer service bots to writing assistants. Approximate end? We’re seeing the tail end now, as we edge into more advanced reasoning; experts peg the full transition around mid-2025 to early 2026, when level 2 capabilities start overshadowing pure conversation. So, roughly: November 2022 – early 2026.

Generation 2: Reasoners (those PhD-level problem-solvers that break down riddles or diagnose diseases without tools). We’re knocking on this door right now—OpenAI’s CTO Mira Murati hinted in July 2024 that we’d hit human-expert reasoning within about 18 months, landing us around January 2026. Current models like GPT-4o are showing early sparks, nailing complex math or scientific analysis better than before, but not fully autonomous yet. The “end” of this phase isn’t nailed down, but given the rapid scaling, it could wrap up by 2028 as agents take over more dynamic tasks. Prediction: ~2026 – 2028.

Moving to Generation 3: Agents (the autonomous doers, like that virtual shop manager handling orders and haggling for weeks on end). This builds directly on reasoners, so expect a start shortly after, maybe late 2027 or 2028, once AI can reliably self-correct and operate without constant check-ins. No hard dates from OpenAI, but the overall AGI push suggests this could mature quickly—think self-driving fleets or coding bots that debug overnight becoming commonplace by the early 2030s. Approximate window: ~2028 – 2032, aligning with expert forecasts for when AI starts acting like tireless team members across industries.

Generation 4: Innovators (the creative inventors dreaming up new materials or drugs from scratch). This is where things get fuzzier, as it requires not just action but original breakthroughs—like AlphaGo’s novel strategies on steroids. OpenAI doesn’t specify, but slotting it after agents, we’re looking at a start around the early 2030s, with full bloom by mid-decade. Timelines here lean on broader AGI predictions; if level 5 hits by 2034, innovators might peak around then. Rough estimate: ~2030 – 2035, when we could see AI co-inventing clean energy tech or personalized meds at scale.

Finally, Generation 5: Organizations (the full-on digital companies, with AI teams running hospitals or nonprofits end-to-end). Sam Altman straight-up said in 2024 that OpenAI could reach this within 10 years—putting a potential arrival by 2034—though skeptics stretch it to 50 years out (2074). More consensus from AI leaders points to 2027–2030 for AGI-like systems, so this capstone generation might start emerging mid-2030s and solidify by 2040, as safeguards catch up. It’s the wild card: ~2034 – 2040+, transforming economies but raising big questions about oversight.

These are educated guesses, mind you—AI progress is exponential, so a breakthrough tomorrow could shave years off. If GPT-5 or whatever drops next month accelerates things, we might revise this over coffee sometime. What surprises you most about the pace?

[At this point, Grok asked, “What surprises you most about the pace?” I answered, “What surprises me most is the speed — potentially 10 years to hit Gen5. But I’m also aware that the estimates may be based on time as we know it in 2025 and that tossing exponentiality into the mix might significantly quicken the pace. The following is Grok’s response. -js]

Yeah, the speed is absolutely mind-bending, isn’t it? I get why that stands out— we’re talking about a trajectory that could reshape everything from jobs to how we solve global problems, and the idea that it might all unfold in a decade or less feels like sci-fi bleeding into reality. You’re spot on about the exponential factor too; it’s not linear progress like building a skyscraper floor by floor. It’s more like compound interest on steroids, where each breakthrough fuels the next at an accelerating rate. Think about how we went from clunky chatbots in 2022 to models now reasoning through complex problems— that leap happened because compute power, data, and algorithms are scaling exponentially, following trends like Moore’s Law but cranked up for AI.

What really amps this up is how recent expert predictions are compressing those timelines even further, often citing that very exponential growth as the wildcard. For instance, OpenAI’s Sam Altman has been pretty vocal lately. In a September 2025 interview, he talked about superintelligence—basically, AI surpassing human smarts across the board—potentially arriving in the next few years, not decades. He doubled down in other spots, suggesting we’re on the cusp of systems that could trigger “super-exponential” economic growth, where progress snowballs so fast it outpaces our ability to predict it. In one of his blog posts from June 2025, he painted 2025 as the year agents start doing real work and 2026 as a tipping point for even bigger shifts. And just this year, he’s hinted at AGI (which aligns closely with those later generations like innovators and organizations) possibly hitting as early as 2025 or within 2,000-3,000 days— that’s roughly 5-8 years from now.

It’s not just Altman, though; there’s a chorus of voices echoing this. Anthropic’s CEO Dario Amodei, for example, updated his take recently, saying AGI could emerge by 2026-2027 if capabilities keep scaling as they have, though he caveats it with potential bottlenecks like data shortages or hardware limits. A broad analysis of over 8,500 expert predictions points to AGI in 5-10 years, with exponential growth being the key accelerator—things like better models training on synthetic data or quantum computing jumps could shave years off. Even skeptics are shifting; back in 2024, timelines were more like 2030-2045, but now folks like Ray Kurzweil’s followers are arguing his 2045 “singularity” (human-AI merge) might arrive sooner because the pace is squaring itself—exponential on exponential.

On X, the chatter’s buzzing with this too. Recent posts from AI insiders highlight how timelines keep shortening: one thread notes CEOs like Amodei are “REALLY confident” about 2026-2030, ditching earlier hedges. Another points out that with AGI, change feels like “exponential growth squared,” making predictions unreliable but likely faster than expected. There’s even talk of mass unemployment from super-exponential AI advances in 1-5 years, tying back to those Gen5 organizations running things autonomously.

Of course, not everyone’s on board—some argue we’re overhyping and that real hurdles like energy demands or ethical alignment could stretch things out to 2040+. But the consensus seems to be leaning toward your point: those 2025 estimates might already be outdated because exponential curves bend upward sharply. If we hit Gen3 agents by 2028 as some predict, Gen5 could follow in a blur, maybe by 2030-2035 instead of later. It’s thrilling, but yeah, a bit overwhelming—makes you wonder how society adapts.

[End]

Filed under: In Simple Terms |

Leave a comment