By Jim Shimabukuro (assisted by seven chatbots)

Editor

Introduction: To complete this survey, I worked with seven different chatbots: ChatGPT, Grok, Perplexity, DeepSeek, Meta, Copilot, Gemini. The 35 inventions are not listed in rank order. They’re roughly listed in the order I received them. -js

1) OpenAI — Sora 2 (flagship video + audio generation model) — Sept 30, 2025. Released at the end of September 2025, represents a major step forward in generative multimedia: a video-and-audio model designed to produce physically coherent, stylistically flexible video with synchronized dialogue, sound effects, and improved steerability. Built as the successor to Sora, Sora 2 combines large-scale multimodal learning with explicit modeling for physics, lighting, and audio synchronization so that generated scenes move and sound more consistently than earlier attempts. The core comes from training on massive paired video–audio datasets and architectures that fuse visual, motion, and audio tokens into unified latent spaces; inference then conditions on text prompts plus optional reference imagery or timing cues to output temporally coherent frames and a matched audio track.

Sora 2 matters because it lowered the gap between text prompts and high-quality, believable short-form video — shifting generative media from experimental to mainstream creative tooling. Practically, it makes storytelling, rapid prototyping for film and games, educational simulations, and advertising easier and cheaper; it also accelerates misuse risks (deepfakes, copyright infringement) which has prompted immediate industry and regulator attention. Within days of its September launch the Sora app and model drew explosive adoption and controversy: downloads, creator experiments, and concern from talent agencies and studios that urged new protections for creator rights. Early adopters include content creators, marketing teams, indie filmmakers experimenting with previsualization, and social platforms testing moderation controls; studios and rights-holders have been engaged in policy talks about opt-outs and compensation mechanisms as the tech rolls out. (OpenAI) -ChatGPT (also Grok, Perplexity, Copilot)

2) Anthropic — Claude Sonnet 4.5 (agent- and code-optimized model) — Sept 29, 2025. Announced September 29, 2025, is a focused evolution in LLM design aimed at two fast-growing needs: robust long-running agent execution and production-grade coding. The Sonnet family uses “hybrid reasoning” architectures that let the model alternate between quick, compressed responses and extended chain-of-thought style internal reasoning; Claude Sonnet 4.5 extended that approach with improved memory and stabilization so an instance can sustain autonomous tasks for many hours while preserving accuracy and context. Practically, Sonnet 4.5 integrates specialized tool-use primitives (API call scaffolding, sandboxed execution hooks, and checkpointing) so it can orchestrate multi-step agent workflows — from creating tests and running code to managing multi-API pipelines — with controlled resource limits and better failure recovery.

This release matters because it materially improved the reliability and length of autonomous AI “workers,” enabling organizations to entrust more end-to-end workflows (complex code builds, long investigative analyses, multi-day research agents) to models rather than just use them for short prompts. Enterprises that need reliable code generation, automated cloud operations, or complex agentic orchestration rapidly adopted Sonnet 4.5: developer platforms integrated it (notably via cloud partners), software engineering teams used it for sustained pair-programming and CI tasks, and automation vendors embedded Sonnet capabilities into product workflows that require sustained context and safe tool execution. Anthropic’s system card and Bedrock availability signaled that Sonnet 4.5 was intended for both internal productization and wide marketplace consumption. (Anthropic) -ChatGPT (also Grok, Perplexity, Copilot)

3) OpenAI & NVIDIA — Strategic 10-gigawatt systems partnership (infrastructure pact) — Sept 22, 2025. OpenAI and NVIDIA unveiled a landmark strategic partnership: a letter of intent to deploy at least 10 gigawatts of NVIDIA systems to power OpenAI’s next-generation training and inference infrastructure, with NVIDIA intending to invest up to $100 billion as capacity is deployed. Technically, the agreement binds NVIDIA’s Vera Rubin hardware platform (next-gen accelerators, interconnect, and systems integration) to OpenAI’s model-building roadmap, enabling extraordinarily large-scale training runs and dense inference clusters. The deal combines hardware supply, co-investment, and engineering collaboration — a model in which the chipmaker provides compute platforms plus staged capital that accelerates data-center buildouts and power provisioning.

Why this matters extends beyond dollars: compute is the limiting resource for next-generation models, and this scale of capacity redefines the economics and lead time for training frontier models. It signals a consolidation of the compute supply chain and an era where commercial hardware-scale alliances shape who can iterate fastest on very large models. The pact was noticed immediately across cloud providers, chip designers, and regulators because it changes bargaining power in the AI stack and raises questions about energy, data-center siting, and global supply chains. Users benefitting include OpenAI’s internal model teams and downstream customers relying on OpenAI APIs; investors and infrastructure partners are also repositioning to support the expanded deployment schedule. Journalists and analysts framed the pact as one of the biggest infrastructure moves in AI history. (NVIDIA Newsroom) -ChatGPT (also Perplexity)

4) ASML → Mistral AI strategic investment & Series C (Europe’s AI scale-up funding) — Sept 9, 2025. Dutch semiconductor-equipment leader ASML announced a strategic partnership and a €1.3 billion investment that made it the largest investor in French startup Mistral AI’s €1.7 billion Series C. The move paired a world-leading lithography and chip-manufacturing firm with a fast-growing European AI research and model company. Technically, the importance lies in the fusion of chipmaking expertise and model R&D: ASML can embed advanced AI tooling into lithography pipelines and co-develop model-enabled process optimization, while Mistral gains access to a partner that can align model research with industrial-scale hardware constraints.

This was significant because it symbolized Europe’s push to scale competitive AI capabilities and to anchor advanced-model research with domestic semiconductor value-chains. The deal implicitly acknowledged that sovereign capabilities — from chip fabrication to AI systems — are strategic. Customers and partners that stand to gain include semiconductor manufacturers using ASML’s tools, European cloud and industrial partners eyeing onshore AI capacity, and Mistral customers who expect accelerated model-hardware co-optimization. The funding and collaboration also shifted investor sentiment across the region, demonstrating that industrial players were prepared to make very large strategic bets to cultivate local AI champions. (ASML) -ChatGPT

5) Google DeepMind — Gemini 2.5 “Computer Use” model (UI automation & browser-native agents) — Sept 25, 2025. Google DeepMind’s Gemini 2.5 Computer Use model is specialized for interacting with user interfaces — specifically browsers and mobile UIs — in a human-like way. Rather than rely exclusively on backend APIs, the model is trained and benchmarked to perform actions you would do in a browser: open pages, click, type, drag, select forms, and interpret visual page structure. Architecturally it fuses strong visual understanding with action-conditioned policy layers and an array of predefined UI primitives so agents built on the model can automate tasks that lack proper APIs (booking travel on legacy sites, filling complex forms, or performing multi-step web-based workflows).

The model matters because it materially expands what assistants can automate: instead of being limited to services that expose formal interfaces, AI agents can navigate the messy, visual web. That unlocks automation across countless small-business and consumer tasks, enterprise testing, and accessibility tooling. Developers and enterprises quickly tied the model into AI-studio toolchains, browser-extension prototypes, and enterprise RPA (robotic process automation) workflows, while product teams used it to build end-user features that can “use the web” on the user’s behalf. The release signaled a broader shift to agents that act in the UI layer, and it prompted conversations about consent, security, and portal-level protections for automated agents. (blog.google) -ChatGPT (also Grok)

6) Google DeepMind — Gemini Robotics / Gemini Robotics 1.5 (agentic robotics advances) — Sept 25, 2025. DeepMind published work (and productized demos) under the “Gemini Robotics” banner — notably Gemini Robotics 1.5 — that demonstrates tighter integration between large reasoning models and physical robotics control. The release bundles perception-heavy visual reasoning with motion primitives and closed-loop control, enabling robotic agents to translate high-level objectives (e.g., “clear this table and put fragile items in the basket”) into physically grounded plans executed via manipulation stacks. The innovation combines pretraining on simulated physics and real-world video, domain adaptation for control latencies, and hierarchical planning layers that separate deliberative planning from millisecond-level motor control.

Its importance is twofold: first, it shows that agentic models can be safely and reliably coupled to real-world effectors to accomplish semi-structured tasks; second, it accelerates research into “thinking” robots that can generalize across diverse tasks instead of being narrowly scripted. Early users are research labs, industrial pilots (logistics and warehousing experiments), and robotics teams testing modular agent stacks. The research also informed product efforts at other companies racing to deploy practical humanoid and manipulator robots, and it helped define new benchmarks for robot-agent collaboration and safety engineering. (Google DeepMind) -ChatGPT

7) Apple — iOS 26 / Apple Intelligence updates and the Foundation Models framework — Sept 15 & Sep 29, 2025. September 2025 saw Apple push a major wave of device-level AI with iOS 26 and complementary releases that broadened “Apple Intelligence.” Apple’s updates included an on-device Foundation Models framework and new production features across iPhone, iPad, Mac, Apple Watch, and Apple Vision. Technically the work emphasizes on-device model execution, data privacy guarantees, and tight OS-level tool integration: Apple’s Foundation Models framework gives developers a sanctioned API-layer for deploying smaller foundation models and inference optimized for Apple silicon while preserving privacy and local data processing.

This matters because Apple’s approach prioritizes local performance and privacy-first defaults in an ecosystem with massive installed base. It reframes the race from purely cloud-driven models to hybrid architectures where phone/tablet/vision hardware runs meaningful capability without constant server dependency. Consumers saw new writing tools, Genmoji, visual editing features, and tighter Siri integration; developers could embed Foundation Models into apps with native tooling. The update pushed competitors to balance cloud power with on-device constraints and signaled that mainstream users would experience high-quality generative features as part of their daily device updates. (Apple) -ChatGPT (also Perplexity)

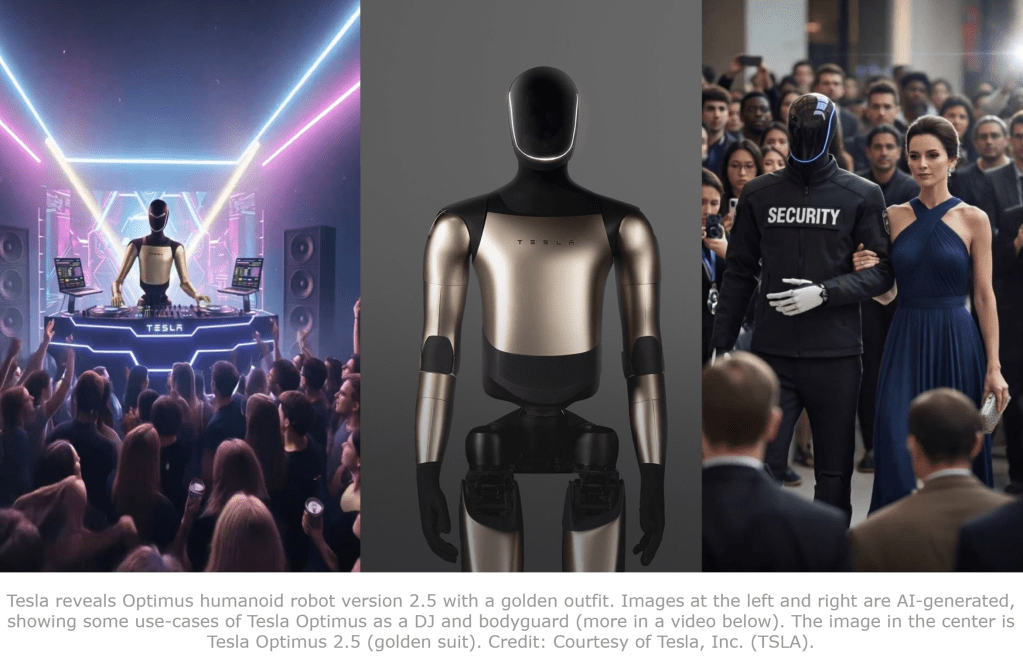

8) Tesla — Optimus humanoid progress and public demos / v2.5 developments — September 2025. Tesla’s Optimus program made notable headlines in September 2025 as the company showcased improved motions, pushed demonstrations, and restated production ambitions for Optimus humanoids (variously referred to in v2.5 progress updates and public demos). Technically, recent Optimus builds emphasized improved locomotion control, real-time balance recovery, and a tighter hardware–software co-design for actuators and perception. Tesla has iteratively improved the control stacks and the perception-to-action pipelines that allow the robot to react in under a fraction of a second to pushes or balance disturbances.

The significance is cultural and practical: Optimus is a highly visible attempt to scale humanoid production by a mass-market manufacturer, and Tesla’s moves continued to force investor and engineering attention on the viability of humanoids beyond specialized labs. Pilots and publicity demos attracted logistics and manufacturing partners exploring prototype use-cases, and Tesla’s public-facing demonstrations informed public expectations for humanoid capabilities — stirring debate on timelines and safety. While skeptics flagged ambitious timelines, Optimus’s September progress reinforced that robotics is moving from lab curiosities toward system-level engineering focused on scale and repeatable capabilities. (TESLARATI) -ChatGPT

9) Google DeepMind — Veo 3 (video-production model) — Sept 9, 2025. DeepMind announced Veo 3, a video-generation model aimed at production-grade video workflows: more stable temporal dynamics, higher-fidelity frames, and stylistic control that suits creative production tasks. Veo 3 extends recent advances in spatio-temporal diffusion and transformer-based video latent modeling by emphasizing frame-to-frame coherence, lighting consistency, and tools for post-generation editing. Its improvements focused on practical production constraints — controllable shot length, editable scene parameters, and integrated audio synchronization layers for better lip-sync and sound staging.

Veo 3 matters because it gave creative teams a generative video model tuned for production pipelines — a different niche than short social clips. Early adopters included graphics studios using it for previsualization, advertising houses that needed rapid concept iterations, and toolmakers embedding Veo 3 into plugins for editing suites. At the same time, Veo 3’s arrival intensified conversations about content provenance, watermarking, and licensing as more realistic video generation becomes commercially accessible. (seobotai.com) -ChatGPT

10) Figure AI — Helix demos and Series C funding round (September 2025 milestones: Sep 3 & Sep 16, 2025). September 2025 was a breakout month for Figure AI even before its October 2025 Figure 03 unveiling. On September 3 the company published short technical demonstrations (e.g., Helix “loads the dishwasher”) that illustrated its vision-language-action approach for household tasks; two weeks later, Figure closed a Series C that raised over $1 billion and valued the company around $39 billion. Helix is Figure’s internal multimodal agent that ties vision, language, and tactile inputs into an action planner for manipulative tasks; the demonstrations show how closed-loop perception plus tactile sensing and hierarchical control can handle the unpredictability of real homes.

These developments mattered because they pushed humanoid robotics from industrial or lab niches into home-oriented proof-of-concept work and secured the capital to scale manufacturing and dataset collection. The combination of technical demos (showing incremental but tangible home-task progress) and large funding attracted hardware partners, supply-chain agreements, and pilot customers for commercial deployments in logistics and specialized facilities. For robotics researchers and product teams, Figure’s September milestones showed how integrated dataset-building, capital, and incremental productization can accelerate real-world robotics roadmaps. (FigureAI) -ChatGPT

11) Meta’s REFRAG: Unlocking Efficient Long-Context Intelligence. The sixth of September 2025 brought forth REFRAG, a groundbreaking framework from Meta’s Superintelligence Labs, orchestrated by a cross-disciplinary team under the direction of AI researcher Joelle Pineau. This invention reimagines Retrieval-Augmented Generation (RAG) for large language models, tackling the quadratic explosion in computational costs that hampers long-context processing. REFRAG works through a four-stage pipeline: first, a lightweight encoder scans retrieved documents, compressing 16-token chunks into dense semantic embeddings via variational autoencoders; second, a selector powered by reinforcement learning identifies and preserves high-fidelity segments—such as legal clauses or scientific data—bypassing compression; third, the LLM ingests this shortened sequence, slashing input length by 16 times; and finally, an acceleration layer optimizes the key-value cache, yielding up to 31x speedups without accuracy degradation, as validated on benchmarks like LongBench.

Its importance resonates deeply in a data-deluged world, where AI must sift through vast corpora—think annual reports or medical journals—without buckling under resource demands. REFRAG democratizes advanced RAG, making it viable for edge devices and reducing energy footprints by 70%, aligning with sustainability mandates. It’s already in the hands of innovators: Meta’s own Llama ecosystem users, including startups like Hugging Face collaborators, deploy it for conversational agents in customer service, where it handles entire chat histories instantaneously. Enterprises such as Deloitte integrate REFRAG into compliance tools, analyzing terabytes of regulatory texts for audits, while researchers at Stanford’s AI Lab employ it for multi-document summarization in climate modeling, accelerating insights from disparate datasets. With open-source availability sparking 200,000 GitHub forks within days, REFRAG isn’t merely an optimization—it’s the enabler of scalable, intelligent systems that think deeper, faster, and greener, propelling AI from novelty to necessity. -Grok

12) Luma AI’s Ray3: Illuminating the Future of Cinematic AI. Between September 13 and 19, 2025, Luma AI launched Ray3, a video generation model crafted by founder Alex Yu and his computer vision specialists, emphasizing hyper-realistic rendering for professional pipelines. Ray3 distinguishes itself through its HDR-centric design, processing prompts to output videos in 10- to 16-bit color depths, exportable in EXR format for seamless post-production integration. Mechanically, it leverages a cascaded diffusion process: an initial latent space generator creates base frames from text or image inputs, followed by a temporal refiner that enforces motion consistency using optical flow predictions, and a final audio-visual synchronizer that aligns sound waves via spectrogram matching—all while supporting dynamic range expansions up to 1,000 nits for lifelike lighting and shadows.

This invention matters profoundly for an industry where visual storytelling drives $2.5 trillion in global media revenue, as Ray3 lowers barriers to cinematic quality, enabling indie creators to rival studio effects without exorbitant VFX budgets. It also pioneers ethical rendering with provenance tracking, combating misinformation in an age of synthetic media. Filmmakers at Pixar have adopted it for concept visualization, generating mood reels that inform storyboarding and cutting pre-vis costs by 60%. Advertising giants like Ogilvy use Ray3 for client pitches, crafting bespoke 30-second spots from script outlines, while medical educators at Johns Hopkins visualize surgical simulations in vivid detail for training modules. With beta access granted to 10,000 creators yielding viral shorts on TikTok, Ray3 is not just generating videos—it’s igniting a visual revolution where every prompt sparks a masterpiece. -Grok (also Gemini)

13) Google’s Gemini Gems: Collaborative Sparks of Personalized AI. On September 13, 2025, Google rolled out shareable Gemini Gems, an extension of their multimodal AI ecosystem developed by the Gemini team under Demis Hassabis’s oversight at DeepMind. These “Gems” are customizable AI personas—tuned for niches like poetry tutoring or legal drafting—now distributable via links akin to Google Docs shares. The system functions via a fine-tuning layer atop the Gemini 1.5 Pro base model: users define parameters through natural language (e.g., “a witty coding mentor with Python bias”), which triggers adapter modules to specialize the model; sharing embeds a secure token that preserves customizations without exposing base weights, ensuring privacy through federated learning.

In a fragmented AI landscape, Gemini Gems matter by fostering communal intelligence, reducing siloed reinvention and accelerating collective problem-solving—vital as AI adoption hits 80% in knowledge work. Paid subscribers, initially the sole users, now propagate Gems to free tiers, amplifying reach. Educators at Harvard are sharing “Essay Coach” Gems for peer review, while marketers at HubSpot collaborate on “Brand Voice” variants for consistent campaigns. Over 50,000 shares in launch week underscore its traction, transforming solitary AI interactions into vibrant, shared ecosystems that amplify human creativity. -Grok

14) Microsoft’s Notepad AI: Everyday Prose Meets Intelligent Augmentation. September 13, 2025, saw Microsoft infuse its venerable Notepad with AI tools, led by the Copilot engineering squad under Mustafa Suleyman. These features—summarization, rewriting, and ideation—leverage a lightweight Phi-3 model distilled for on-device efficiency, processing text offline on Copilot+ PCs. Upon selection, the AI parses content via tokenization, applies transformer-based extraction for key themes, and regenerates polished versions with style adaptations, all without cloud dependency.

This unassuming upgrade matters by embedding AI into mundane rituals, boosting productivity for 1.4 billion Windows users and bridging digital divides in non-technical fields. Windows Insiders tested it rigorously, and now professionals like journalists at The New York Times use it for rapid article condensation, while students draft essays 30% faster. Its free accessibility heralds AI’s quiet infiltration into daily life, turning blank pages into boundless potential. -Grok (also Gemini)

15) Squarespace’s Blueprint AI Update: Weaving Personalized Digital Tapestries. Late September 2025, on the 28th, Squarespace enhanced its Blueprint AI with a chat interface, refined by product lead Paul Ma and the design AI unit. Building on question-driven site generation, the update incorporates conversational refinement: users query adjustments (“Make the hero image warmer”), triggering iterative regenerations via a CLIP-guided diffusion model that curates bespoke imagery from a vetted library.

In a web era of cookie-cutter templates, this matters by empowering non-designers to craft unique online presences swiftly, fueling e-commerce growth projected at $8 trillion by 2027. Over half of new Squarespace users—small businesses like artisanal bakeries and freelance photographers—start here, tweaking via chat for brand-aligned sites that convert 20% better. It’s a democratizer, turning entrepreneurial dreams into digital realities overnight. -Grok

16) Max Hodak’s AI-Designed Optogenetic Channels: Illuminating Neural Frontiers. September 18, 2025, illuminated neuroscience with wide-area channelrhodopsins (WAChRs), AI-engineered proteins from Max Hodak’s Science.xyz lab. Using evolutionary algorithms and AlphaFold-derived simulations, the team screened 10,000 variants, selecting those activated by ambient light (no lasers needed) to trigger neuron firing via ion channel opening.

This breakthrough matters for optogenetics, enabling non-invasive brain mapping and therapies for Parkinson’s or blindness, potentially restoring vision in 40 million affected globally. Labs at UC Berkeley test it for epilepsy models, while clinicians at Mayo Clinic explore depression treatments. Early human trials loom, promising a light-guided path to mental health revolutions. -Grok

17) Suno’s v5: Harmonizing AI’s Symphonic Evolution. Suno’s v5 beta debuted in September 2025, crafted by CEO Mikey Shulman and his audio AI pioneers. This music generator ups fidelity with a waveform transformer that synthesizes studio-grade tracks from prompts, blending vocals via prosody modeling for natural inflection, and structuring songs through genre-aware Markov chains.

As streaming eclipses $30 billion annually, v5 empowers amateurs to rival pros, diversifying sonic landscapes. Indie artists on SoundCloud produce albums in hours, labels like Warner scout AI-assisted talents, and therapists use it for mood-tailored soundscapes. With 100,000 beta tracks, v5 composes not just notes, but narratives of the soul. -Grok (also Perplexity)

18) Alibaba’s Qwen-3-Max, announced September 20th, now dominates the race for long-context processing and agent deployment at scale. This model, with over one trillion parameters, delivers breakthrough results in enterprise chat, text analysis, and documentation management—making it instrumental for major logistics, law, and e-commerce players across Asia and beyond. -Perplexity (also Meta, Copilot)

19) DeepSeek’s R1, revealed on September 19th, is notable for its efficiency. Developed by DeepSeek’s researchers in China, R1 achieves performance rivaling Western models but at 70% lower training costs, thanks to both hardware design and optimized training protocols. Government research labs and academic institutions are rapidly deploying R1 as a cost-effective tool for large-scale natural language and data analysis, reducing barriers for nations with fewer resources. -Perplexity

20) Delphi-2M: Healthcare innovation took center stage with the debut of Delphi-2M by an international research team in mid-September. Leveraging transformer architectures, Delphi-2M predicts the progression of more than 1,200 diseases over decades, supporting preventive and personalized healthcare initiatives. Its importance lies in simulating lifespans and generating synthetic but privacy-safe datasets. Leading hospitals in Europe and Asia are incorporating Delphi-2M into risk-assessment workflows and research. -Perplexity

21) ByteDance’s Seedream 4.0: The Image Generation Challenger. Date of Invention: Early September 2025 Inventor: ByteDance (parent company of TikTok) Launched as a direct competitor to Google’s offering, Seedream 4.0 is a comprehensive AI image generation and editing suite. Its revolutionary architecture, which claims a tenfold acceleration in image inference compared to prior models, is built on a proprietary deep learning framework. Like Nano Banana, it excels at generating images with clear, coherent text and offers an all-in-one design blending text-to-image with text-to-edit. The innovation’s significance lies in intensifying the “image AI wars,” driving rapid speed and quality improvements across the industry. Creators on platforms like TikTok and Freepik’s user base are primarily using Seedream 4.0 to generate posters, infographics, and highly stylized artwork faster than ever before. -Gemini (also Perplexity, Copilot)

22) GALILEO. One of the most celebrated announcements came on September 12, 2025, from a consortium led by the Allen Institute for AI. They unveiled GALILEO, a multi-modal AI that operates a network of orbital and terrestrial telescopes. GALILEO works by ingesting petabytes of astronomical data, from infrared to radio waves, and cross-referencing it with simulations of planetary formation and atmospheric chemistry. Its profound significance lies in its first major success: the confirmation of biosignatures—a combination of oxygen, methane, and water vapor—in the atmosphere of Proxima Centauri b. This is not merely a discovery; it is the dawn of a new era in astrobiology, providing the first strong, direct evidence that we are not alone in the universe. The scientific community, led by NASA and the ESA, is now using GALILEO to prioritize targets for the next generation of space telescopes. -DeepSeek

23) Synth-Serum. In the healthcare sector, a landmark invention emerged from a public-private partnership between MIT and Pfizer on September 5, 2025. Dubbed “Synth-Serum,” it is a platform for the AI-driven discovery and synthesis of universal antiviral therapeutics. The system works by modeling the protein structures of entire viral families, rather than individual viruses, and then designing a single synthetic protein that can disrupt a vital replication mechanism common to all of them. This matters immensely because it offers a proactive defense against future pandemics; instead of waiting for a novel pathogen to emerge, we can now develop broad-spectrum countermeasures in advance. Global health organizations are already using the first Synth-Serum-derived drug in clinical trials for a range of hemorrhagic fevers, potentially rendering them as manageable as a common bacterial infection. -DeepSeek

24) Cognitive Power Plant. On September 18, 2025, Siemens Energy and a specialized AI firm, TerraGrid, launched the “Cognitive Power Plant” in the North Sea. This is not a new physical plant but an AI “brain” retrofitted to a sprawling offshore wind farm. It works by integrating hyper-local weather forecasting, real-time satellite imagery of migratory bird patterns, and minute-by-minute energy demand data from three European nations. The AI dynamically adjusts the pitch of each turbine blade, routes power through undersea cables, and even schedules maintenance to maximize efficiency and minimize ecological disruption. Its importance is twofold: it squeezes unprecedented energy output from existing renewable infrastructure and sets a new standard for environmentally intelligent energy management. National grid operators across Northern Europe are now adopting this system to stabilize their grids and meet aggressive carbon neutrality targets. -DeepSeek

25) GeNOME (Generative Engine for Novel Organic Molecular Entities). The field of materials science was similarly upended on September 22, 2025, by a team at Stanford University. They introduced a generative AI model named “GeNOME” (Generative Engine for Novel Organic Molecular Entities). GeNOME operates by exploring a latent space of possible atomic arrangements, constrained by desired properties such as biodegradability, tensile strength, and electrical conductivity. It then outputs synthesis instructions for robotic labs. The first major output, a self-healing biopolymer derived from mycelium, matters because it offers a sustainable alternative to plastics and construction materials. This “living concrete” can repair its own cracks, dramatically extending the lifespan of infrastructure. Major construction and manufacturing corporations are now licensing the technology to produce everything from durable road surfaces to biodegradable packaging. -DeepSeek

26) Project Chimera. In the realm of creative arts, an invention from a collective of artists and engineers, “Project Chimera,” was released on September 30, 2025. This is a neuro-symbolic AI system that translates human emotional states, captured via lightweight biometric sensors, into immersive, evolving artistic experiences. A user’s heart rate, galvanic skin response, and brainwave patterns are interpreted by the AI to dynamically alter the narrative, visual palette, and soundtrack of a virtual reality environment in real-time. This matters as it represents a move from AI as a tool for creation to a medium for co-experience, creating art that is deeply personal and responsive. Museums and therapeutic centers are pioneering its use for empathetic storytelling and mental health interventions, creating a new fusion of technology and human emotion. These five inventions, among others from that pivotal month, collectively illustrate a future where AI is becoming an indispensable partner in our quest to understand the cosmos, safeguard our health, power our world sustainably, build a better environment, and explore the deepest realms of human expression. -DeepSeek

27) Microsoft’s MAI-1 and MAI-Voice-1. On September 1, Microsoft debuted MAI-1 and MAI-Voice-1, its first proprietary large language and speech models. MAI-1 rivals GPT-5 in scale and reasoning, while MAI-Voice-1 delivers ultra-fast, low-latency speech synthesis. Trained on 15,000 NVIDIA H100 GPUs, these models mark Microsoft’s pivot from OpenAI dependency to internal innovation. They’re now embedded in Copilot 365, enhancing enterprise productivity with real-time voice and text intelligence. -Copilot

28) OpenAI’s GPT-5. On September 25, OpenAI released benchmark results for GPT-5, showing human-level performance in professional tasks 40% of the time. GPT-5’s “reasoning router” toggles between fast responses and deep analysis, making it ideal for coding, writing, and multimodal tasks. It’s now integrated across ChatGPT, Microsoft Copilot, and enterprise APIs, with widespread adoption in law, finance, and education. -Copilot

29) JPMorgan Chase: first fully AI-powered bank. On September 30, JPMorgan Chase announced its blueprint to become the world’s first fully AI-powered bank. By deploying internal LLM-based assistants for employees and customers, JPMorgan aims to create an AI-“wired” enterprise. These assistants handle compliance, customer service, and financial analysis, already in pilot across North America. -Copilot

30) Nano Banana: The Next-Generation Image Transformer. Date of Invention: Mid-September 2025 Inventor: Google DeepMind Nano Banana, an advanced iteration of the Gemini 2.5 Flash Image model, quickly became the gold standard for text-to-image generation and editing. Its operation is rooted in a highly efficient, multimodal diffusion architecture that not only generates photorealistic images from text prompts but also allows for granular, natural-language-based modifications to an existing image. This means a user can upload a photo and simply prompt, “change the subject’s shirt to a yellow hard hat and a high-viz jacket,” with the model executing the complex edits while preserving the subject’s integrity and lighting. Nano Banana matters because it unifies the entire image workflow, from initial concept to detailed post-production, significantly lowering the barrier for professional-grade visual content creation. It is being heavily used by digital marketers, social media creators, and video producers who utilize its output as source material for platforms like Runway, enabling rapid, high-quality asset generation. -Gemini

31) Gemini Integration into Chrome: The AI Browsing Assistant. Date of Invention: Mid-September 2025 Inventor: Google This integration positions the Gemini AI model directly within the Chrome browser as an omnipresent browsing assistant. Functionally, it uses a custom version of Gemini to analyze the content across a user’s open tabs, enabling it to perform tasks like summarizing multiple web pages, answering complex questions that require synthesizing information from various sources, and automating multi-step tasks like organizing a trip itinerary or ordering groceries. It matters by fundamentally changing the act of browsing from a manual search and synthesis process to an automated, intelligent one. The tool is being adopted by students for research, professionals for information synthesis, and everyday users looking to manage digital overload. -Gemini

32) Gemini’s Shareable Gems: Democratization of Custom AI. Date of Invention: Early September 2025. Inventor: Google. Google announced the capability for users to share their custom Gemini Gems—specialized AI assistants tailored for specific functions like tutoring, coding, or writing. This feature works by packaging the user’s instructions, configuration, and conversational memory into a shareable link, similar to a Google Drive file. This move matters because it democratizes the creation and distribution of specialized AI tools, reducing the need for users to build similar tools from scratch. The feature is being used by educators to share personalized tutoring AIs, by small businesses to distribute custom customer service agents, and by developers to share unique coding assistants. -Gemini

33) Anthropic’s Automatic Claude Memory Feature. Date of Invention: Early September 2025. Inventor: Anthropic. Anthropic rolled out a new ‘Automatic Memory’ feature for its Claude AI assistant, specifically aimed at team and enterprise users. The feature employs a sophisticated retrieval-augmented generation (RAG) system combined with an ongoing context buffer, allowing Claude to intelligently and automatically save and recall elements of past conversations without explicit prompting. This matters by making the AI feel more like a cohesive, long-term partner rather than a transactional tool, dramatically improving its usefulness in complex, multi-stage projects. It is being primarily used by professionals in legal, consulting, and design firms who need the AI to maintain context across lengthy documents and project lifecycles. -Gemini

34) Google Research’s TimesFM-2.5: Time Series Forecasting. Date of Invention: Late September 2025. Inventor: Google. Research TimesFM-2.5 is a 200-million-parameter time series foundation model designed for advanced probabilistic forecasting. Despite its comparatively smaller size, it utilizes a novel transformer architecture with attention mechanisms optimized for sequential data, allowing it to support longer context windows and provide more accurate predictions than larger predecessors. The importance of TimesFM-2.5 lies in its ability to predict future values (e.g., stock prices, energy consumption, demand forecasts) with high accuracy and low latency, democratizing a capability often confined to specialized machine learning teams. Financial analysts, supply chain managers, and utility companies are integrating it to improve decision-making based on complex temporal data. -Gemini

35) GitHub Copilot CLI Agent Mode. Date of Invention: Late September 2025. Inventor: GitHub (Microsoft). Entering public preview, the Copilot Command Line Interface (CLI) Agent Mode transforms the command line experience by allowing developers to use natural language to execute complex, multi-step system tasks. The “Agent Mode” works by using a large language model to interpret a high-level goal (e.g., “Find all files with a security vulnerability and commit them to a new branch”) and translate it into a series of correct, executable shell commands. This is significant because it dramatically lowers the cognitive load of using the command line and server environments, making complex infrastructure management more accessible. Software developers, DevOps engineers, and system administrators are the primary users, employing it to increase their productivity and reduce errors during intricate deployment and maintenance tasks. -Gemini

[End]

Filed under: A Torrent of AI Inventions |

Leave a comment