By Jim Shimabukuro (assisted by ChatGPT)

Editor

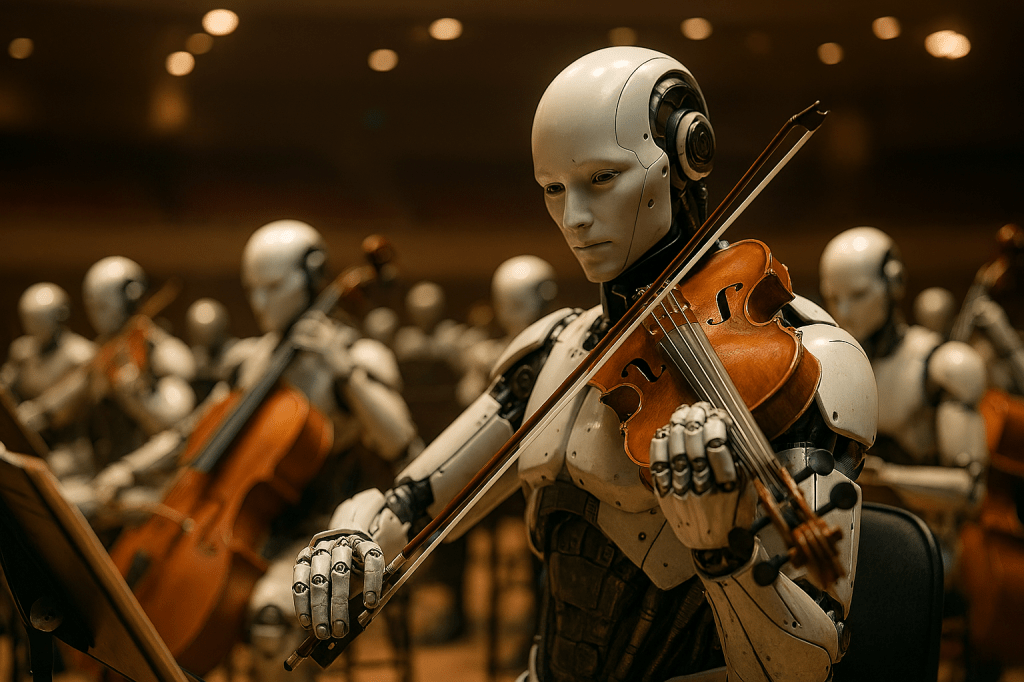

The question of whether an AI-driven robot can truly play a musical instrument—especially at a high artistic level—touches both the limits of robotics and the nature of human expressivity. In recent years, advances in machine learning, sensor technology, and robotics have brought us closer to answering that question with an emphatic “yes”—but with important qualifications. Some instruments lend themselves more easily to robotic imitation than others. A closer look at the violin, trumpet, guitar, and drums reveals how the degree of difficulty varies depending on the physical and expressive demands of each instrument.

The violin stands as one of the most formidable challenges. Unlike the piano or guitar, which rely on discrete pitches, the violin’s expressiveness depends on continuous pitch control and subtle physical nuance—micro-adjustments of bow pressure, angle, and speed that define tone and emotion. For humans, this comes naturally through a feedback loop between ear, hand, and feeling; for machines, it represents a near-insurmountable complexity. Yet progress is being made.

In 2024, researchers at the University of Tsukuba in Japan introduced Asimo StringBot, a humanoid robot capable of playing simple violin melodies. Using real-time machine vision and auditory feedback, the robot learns to adjust its bowing technique as it listens to itself play. “Our goal is expressive control, not merely correct notes,” explained Dr. Takashi Kato, who leads Tsukuba’s AI Music Lab. The sound remains mechanical, the phrasing stiff, but the experiment marks a step toward a day when robotic violinists may reach intermediate human skill levels, perhaps even achieving modest expressivity by the 2030s.

The trumpet poses a different but equally intricate problem: how to simulate human breath and embouchure. Producing a musical tone on a brass instrument requires precise control of airflow and lip tension, something difficult to replicate mechanically. Yamaha’s early trumpet-playing robot, unveiled at CES in 2009, used artificial lungs and flexible polymer lips to produce basic notes and jazz riffs.

Since then, researchers at MIT’s Embodied Music Systems Group have taken the concept further by developing soft actuators that mimic the shape and responsiveness of human lips. Their AI software continually adjusts the embouchure in response to pitch errors, creating a rudimentary feedback loop similar to what a human musician experiences. While tone quality has improved significantly, robots still struggle with expressive phrasing—vibrato, articulation, and breath dynamics. The next breakthrough will likely come through predictive airflow models that allow the AI to “breathe” in a more organic, musical way.

In contrast, the guitar has become something of a success story in robotic musicianship. The instrument’s design—fretted, with discrete pitches and predictable string behavior—lends itself well to mechanical replication. Several projects have achieved remarkably high performance. Teotronica’s 2022 guitar-bot, for instance, can execute complex solos with 17 motorized fingers, while Google’s Magenta project integrates deep learning models to control strumming and timing in coordination with AI-composed music.

Open-source initiatives like GuitarBot have even begun incorporating “humanization” algorithms that introduce slight timing imperfections, giving performances a more natural groove. Because fretted notes do not require microtonal correction, robots can play guitar with extraordinary precision, and within the next decade, virtuoso-level performance may be fully within reach.

Drums represent the area of greatest accomplishment. Here, machines already rival or surpass human performers in consistency and technical execution. Rhythmic precision, rather than subtle intonation, defines drumming, and this plays to AI’s strengths. Robotic ensembles such as Compressorhead have been touring for years, their drummer “Stickboy” performing complex polyrhythms with unerring accuracy.

At Georgia Tech, the Shimon project has extended the concept to melodic percussion, using deep learning to improvise alongside human musicians. In 2025, Boston Dynamics demonstrated its Atlas robot adapting drum patterns dynamically to live tempo changes—a feat of real-time responsiveness once thought impossible for machines. When paired with algorithms that simulate the small timing fluctuations of human playing, these systems can produce rhythms that feel almost indistinguishable from those of expert drummers.

If we compare these instruments side by side, a clear pattern emerges. Robots already excel at instruments with predictable mechanical actions—drums and guitars—while struggling with those that demand fluid, continuous control, like the violin and trumpet. The next phase of progress will likely depend on “sensor fusion,” a technology that allows AI systems to integrate tactile, auditory, and positional feedback into a coherent sense of musical awareness.

By combining reinforcement learning with high-resolution motion capture of human musicians, researchers are beginning to train robots not only to reproduce notes but also to interpret them—to infuse motion with intention, rhythm with phrasing, and tone with emotional contour.

The dream of a robotic virtuoso may still sound like science fiction, but it is no longer an idle fantasy. Within the next 10-15 years, we may see AI instrumentalists performing alongside orchestras, jazz ensembles, and rock bands—not as curiosities or gimmicks, but as genuine musical collaborators. What remains to be seen is not whether robots can play the right notes, but whether they can one day mean them.

[End]

Filed under: Uncategorized |

This was such a fascinating read — I love how you broke down the differences between instruments and why some are robot-friendly and others aren’t. It really shows that artistry isn’t just about hitting the right notes, but about nuance, feel, and feedback. If AI ever truly gets that micro-expressivity right, it’ll be a huge milestone — and a very weird moment in music history!

Hi, Yula. Agree. Definitely weird. AI is improving so quickly that even the most liberal predictions are proving conservative. In 10 years (maybe sooner), we’ll probably see AI playing nearly all instruments at virtuoso levels. The upside is that we’ll more than likely quickly adjust to this and use it to our advantage in ways that we can’t fathom now. Think of how quickly we adapted to smartphones. The same will probably happen with virtuoso AI humanoids. Conceivably, a composer or conductor could have an entire orchestra of AI humanoids to experiment with. They’ll work 24/7 without lunch or coffee breaks. Musicians would do things with AI that they couldn’t on their own. People who can’t play instruments will be able to vicariously do so with any number of instruments. With the physical “playing” no longer an issue, we’d be able to focus on more creative and innovative performances and compositions. The possibilities are endless. -Jim