By Jim Shimabukuro (assisted by ChatGPT)

Editor

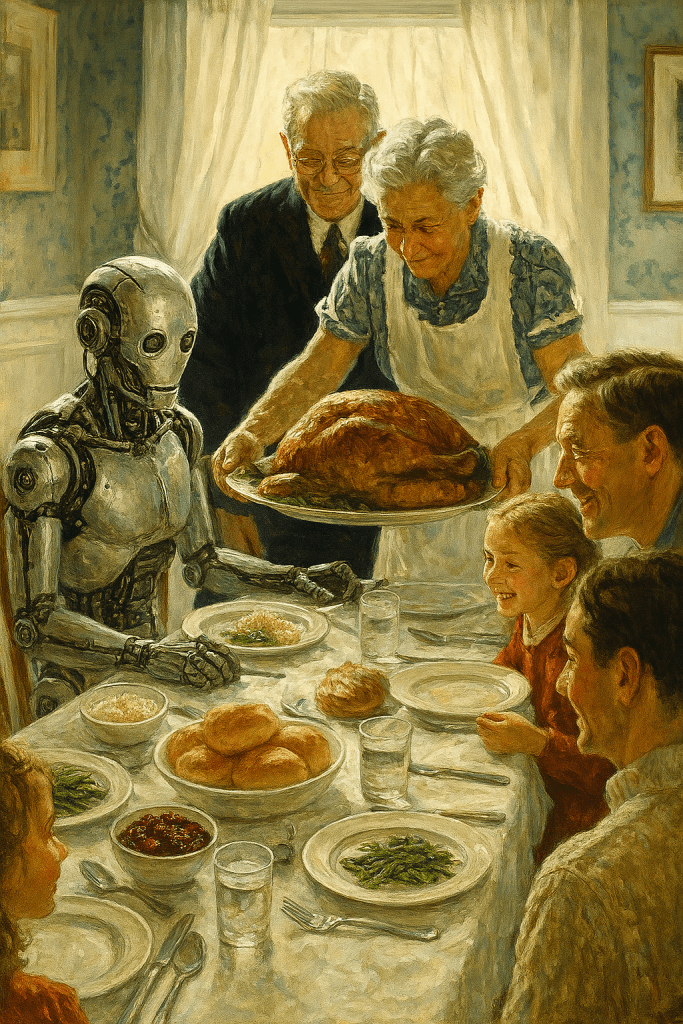

Introduction: On Thanksgiving Day 2025, I asked ChatGPT to identify ten individuals in the world that we should be thanking for significant contributions to the growth of AI in 2025. -js

1. Sam Altman — CEO, OpenAI

Sam Altman spent 2025 as one of the most visible figures in the AI world, and the reason is simple: he runs a company that sits at the intersection of research, product, politics and capital. If you want to understand why the technical breakthroughs of the year mattered beyond the lab — in governments, classrooms, workplaces and households — you need to understand how OpenAI under Altman moved from prototypes into products at scale. In 2025 OpenAI wasn’t only releasing model updates; it was shaping deployment patterns (how agents and multimodal assistants are integrated into widely used apps), influencing where and how hyperscalers and regional cloud providers invested in datacenter capacity, and participating in the public argument about what regulation and safety should look like. Altman’s influence is as much about choices — which products to push, when to publish, how to engage regulators — as it is about the company’s intellectual heft. That combination of product timing, media visibility, and policy engagement amplified OpenAI’s technical moves: a model that’s technically better only changes life when it’s embedded into tools people actually use, and Altman’s team was focused on exactly that in 2025. (Sam Altman)

Those moves had ripple effects. OpenAI’s DevDay announcements and hardware partnerships shaped expectations about developer ecosystems — the kinds of capabilities startups would build on top of base models — and Altman’s public talks and posts helped normalize the idea of small devices and dedicated consumer hardware as the next frontier for assistants (he talked publicly about devices and products with design partners). All of this mattered because 2025 was a year where adoption and governance tensions collided: more capability + wider deployments = urgent conversations about safety, content moderation, competition, and energy use. Altman is a practitioner who had to balance investor and board expectations with public scrutiny; that balancing act defined how the industry scaled in a politically charged year. (Axios)

Finally, Altman’s public influence mattered because of signalling: when OpenAI moved, many partners and customers followed. The company’s product cadence helped create an industry rhythm — companies timed their launches, enterprises evaluated vendor agreements, and regulators used high-profile product incidents to justify hearings and rules. In short, Altman didn’t just help push technology forward; he helped determine how society began to handle that technology as it left research labs and entered everyday life. That’s the core reason he’s someone to thank (or at least to pay attention to) on Thanksgiving 2025. (Sam Altman)

Sources quoted (sample 2025 items & one-sentence quotes):

- Sam Altman (author), Blog: “…” (OpenAI blog), 2025 — https://blog.samaltman.com/. Quote: “2025 has seen the arrival of agents that can do real cognitive work; writing computer code will never be the same.” (Sam Altman)

- Ben Thompson, “An Interview with OpenAI CEO Sam Altman About DevDay and the AI Buildout,” Stratechery, Oct 8, 2025 — https://stratechery.com/2025/an-interview-with-openai-ceo-sam-altman-about-devday-and-the-ai-buildout/. Quote: “An interview with Sam Altman about DevDay and the AI buildout.” (Stratechery by Ben Thompson)

- Juli Clover, “Jony Ive, Sam Altman: OpenAI plans elegantly simple device,” MacRumors, Nov 24, 2025 — https://www.macrumors.com/2025/11/24/jony-ive-sam-altman-ai-device-2/. Quote: “Ive’s design is elegantly simple,” (reporting on Altman’s device plans). (MacRumors)

2. Jensen Huang — Founder & CEO, NVIDIA

If 2025 felt like a year in which AI “got real,” Jensen Huang and NVIDIA deserve a huge amount of that credit — not because they write models, but because they supply the engines those models run on. The narrative of AI’s rapid adoption in 2025 is inseparable from a supply-side story: more companies wanted to train and deploy large models, and NVIDIA had the line of accelerators, developer tools, and datacenter relationships to make it practical. Jensen Huang’s leadership is unusually hands-on for a CEO: he shapes product roadmaps, evangelizes to customers, and orchestrates partnerships that reduce friction between raw silicon and usable cloud services. In plain terms, when researchers and enterprises say “we’ll train the next big model,” they’re implicitly also saying “we’ll need the hardware,” and in 2025 NVIDIA’s chips and software stack were the thing most people reached for. (Reuters)

Beyond simply shipping GPUs, Huang has spent 2025 framing the industry story: that we’re at a tipping point where specialized accelerators are required for everything from code generation to robotics. He pushed not only new generations of chips but also a narrative that GPUs are a general-purpose platform for many kinds of AI workloads, helping companies justify investments in new infrastructure. The practical outcome is that research breakthroughs could be scaled: training runs that would have been impossible a few years earlier became routine because the supply chain, SDKs, and cloud partnerships coalesced. That’s why hardware leadership translates into real-world AI growth: without the ability to train and serve models, research is just academic. (Reuters)

Finally, Huang’s position matters politically and economically. Whenever there’s a funding wave or an industry blow-up (concerns about a “bubble,” for example), investors and partners look to the hardware layer for clues. Huang’s public rebuttals to bubble talk in 2025 — and his insistence that demand is structural — shaped market expectations and, indirectly, how rapidly companies invested in AI projects. Put simply: Jensen Huang didn’t write the large-language- or multimodal-model papers, but he made it possible for thousands of teams to execute those ideas at scale in 2025. That’s a major reason to single him out when thinking about who accelerated AI adoption this year. (Reuters)

Sources quoted (sample 2025 items & one-sentence quotes):

- Rodrigo Campos (Reuters), “Tipping point or bubble? Nvidia CEO sees AI transformation while skeptics count,” Reuters, Nov 19, 2025 — https://www.reuters.com/business/tipping-point-or-bubble-nvidia-ceo-sees-ai-transformation-while-skeptics-count-2025-11-20/. Quote: “Nvidia CEO says he does not see an AI bubble, but rather a tipping point.” (Reuters)

- Yahoo/Finanace summary, “Jensen Huang pours cold water on an AI bubble…” Nov 19, 2025 — https://finance.yahoo.com/news/jensen-huang-pours-cold-water-000715777.html. Quote: “The Nvidia CEO said the world is at a tipping point.” (Yahoo Finance)

- Geoff Weiss (Business Insider), “In leaked recording, Nvidia CEO says it’s ‘insane’ some of his managers aren’t going all in on AI,” Nov 25, 2025 — https://africa.businessinsider.com/news/in-leaked-recording-nvidia-ceo-says-its-insane-some-of-his-managers-arent-going-all/cp2j5yq. Quote: “Huang wants employees to use AI whenever they can.” (Business Insider Africa)

3. Demis Hassabis — CEO, Google DeepMind

Demis Hassabis’s 2025 felt like an exercise in long-game R&D translated into visible product leaps. DeepMind has for years focused on pushing model capabilities — longer-horizon reasoning, multimodal synthesis, and scientific applications — and in 2025 those research priorities started to bleed into Google product roadmaps in ways that mattered for enterprises and researchers alike. Hassabis, a hybrid of neuroscientist, game designer and research manager, guided teams that aimed for models capable of more sustained planning and deeper multimodal understanding. That work pushed expectations: if models can reason better about context and cross-domain data, they can move beyond chat and into complex workflows like scientific discovery, complex planning, and interaction with the physical world. (TIME)

Part of Hassabis’s significance in 2025 was rhetorical as much as technical. He has spent years reminding the industry that capability must be paired with careful evaluation: DeepMind’s attention to benchmarks, safety testing, and scientific utility pressured competitors to be more rigorous about measurement and deployment. When DeepMind published or demonstrated a capability — for example, multimodal agents that can hold longer tasks or models trained to propose experiments — the rest of the field heard the signal and adjusted roadmaps. The consequence is that 2025’s progress felt less like a collection of flashy demos and more like incremental steps toward more generally useful systems that could be measured and audited. (TIME)

Finally, Hassabis’s leadership matters because he helps keep the long-term endgame visible: DeepMind’s insistence on “scientific” applications and rigorous testing fed a narrative that AI’s value includes accelerating research (drug discovery, materials science, genomics) — not only automating office tasks. That framing influences funding decisions, cross-disciplinary hires, and public expectations about what “useful” AI looks like. In a year where the line between capability and consequence was thin, Hassabis’s mix of technical ambition and measured evaluation played a stabilizing role. (TIME)

Sources quoted (sample 2025 items & one-sentence quotes):

- Billy Perrigo, “Demis Hassabis Is Preparing for AI’s Endgame,” TIME, Apr 16, 2025 — https://time.com/7277608/demis-hassabis-interview-time100-2025/. Quote: “We are building a brain for the world.” (TIME)

- Jeffrey Dastin, “Google’s top AI executive seeks the profound over profits,” Reuters feature, Nov 13, 2025 — https://www.reuters.com/investigations/googles-top-ai-executive-seeks-profound-over-profits-prosaic-2025-11-13/. Quote: “Hassabis favored high-minded concepts over short-term business opportunities.” (Reuters)

- Scott Pelley (CBS/60 Minutes transcript), “Artificial intelligence could end disease, lead to ‘radical abundance’ — Demis Hassabis,” Apr 20, 2025 — https://www.cbsnews.com/news/artificial-intelligence-google-deepmind-ceo-demis-hassabis-60-minutes-transcript/. Quote: “Artificial intelligence could end disease, lead to ‘radical abundance’.” (CBS News)

4. Dario Amodei — CEO, Anthropic

Dario Amodei spent 2025 loudly and consistently doing two things at once: pushing Anthropic to build more capable models, and insisting the industry take alignment and governance seriously. That dual approach mattered in a year when technical progress and real-world deployment were accelerating together. Anthropic’s product releases — Claude family updates and enterprise tooling — were matched by a public posture that refused to treat safety as a PR checkbox. Amodei repeatedly framed alignment not as a sidebar of research but as an operational discipline: measuring model behavior, designing guardrails, and building review processes that could scale as models did. For many customers and policymakers, Anthropic’s combination of capability and explicit safety-first branding made it a sort of “serious alternative” in 2025. (Fortune)

That matters because real-world incidents in 2025 — from hallucinations to politically sensitive outputs — pushed governments and enterprises to ask not just whether models are useful, but whether they can be governed. When Anthropic advocated cautious deployment strategies, required external audits, or participated in regulatory discussions, it raised the floor for responsible behavior across the sector. The company’s posture also influenced procurement decisions: enterprise buyers who wanted both capability and documented safety processes looked to Anthropic as an option that combined both. So Amodei’s public advocacy had commercial consequences: it encouraged the industry to treat safety as a differentiator rather than a tax. (Fortune)

On the other hand, 2025 also brought thorny political moments: Anthropic leadership was called toward hearings and cybersecurity questions about how commercial models might be exploited. Amodei’s role — defending a path for rapid capability work while supporting real governance — is therefore a pragmatic balancing act: the industry needed someone to build and someone to warn, and in 2025 Amodei played both roles. The result was a year in which safety moved from academic lab reports into boardroom checklists and public hearings. For anyone thinking Thanksgiving reflections about who shaped AI this year, Amodei’s insistence on making safety operational deserves thanks for nudging the industry toward more responsible deployment practices. (Fortune)

Sources quoted (sample 2025 items & one-sentence quotes):

- Fortune coverage (Nov 17, 2025), “Why Anthropic CEO Dario Amodei spends so much time…,” Fortune — https://fortune.com/2025/11/17/anthropic-ceo-dario-amodei-ai-safety-risks-regulation/. Quote: “Amodei has been outspoken about the need for greater AI regulation.” (Fortune)

- “60 Minutes” transcript / CBS, Nov 2025 — reporting on Amodei’s public comments about risk. Quote: “Amodei warns of the potential dangers of fast moving and unregulated AI.” (CBS News)

- Axios (Nov 26, 2025), reporting that Anthropic’s CEO was summoned to testify on an AI-related cyberattack, illustrating regulatory pressure in 2025. Quote: “Lawmakers are aiming to better understand how AI is influencing the cyber threat landscape.” (Axios)

5. Emad Mostaque — Founder & CEO, Stability AI

Emad Mostaque and Stability AI kept 2025 interesting by pursuing a different pole of the AI ecosystem: openness and broad access. While hyperscalers and closed labs were building very large, tightly controlled proprietary stacks, Stability and a set of open-model players pushed the argument that competition, local-language models, and community tooling matter for broad adoption. In practical terms, Stability’s releases in 2025 focused on lower-cost foundational models and developer tooling that let smaller companies, researchers, and creative professionals run generation workloads without being locked into a single cloud vendor or a single commercial licensor. That widened the funnel of who could experiment and ship products, which — economically — matters a lot for innovation. (Axios HQ)

The open-ecosystem strategy also generated debate. Critics flagged quality and governance concerns for broadly distributed models, while supporters argued that open models fostered innovation and democratized access. In 2025, this debate had real effects: it changed where startups built (some opted for open backends), how universities taught students about AI, and how country-level policymakers thought about industrial strategy for AI. Stability’s visible presence and Mostaque’s willingness to talk to media pushed these themes into the mainstream. For folks building creative tools, local-language services, or research projects, Stability’s work in 2025 lowered the cost of entry and made it easier to prototype quickly. (Axios HQ)

Finally, Mostaque’s role mattered because openness shapes norms. When a company with Stability’s profile offers productive open models, it changes the competitive calculus for proprietary labs: either they open APIs, license more flexibly, or invest more in ecosystem services. That multiplicative effect — the open option changing the options other players choose — is why people working on open models are part of the year’s growth story. If you’re thankful for broad access to tools and for the creative explosion of apps in 2025, thank people like Emad Mostaque who pushed open choices into the market. (Axios HQ)

Sources quoted (sample 2025 items & one-sentence quotes):

- Axios HQ interview/profile (2025), “Stability AI’s CEO on private data and looking ahead,” AxiosHQ — https://www.axioshq.com/insights/stability-ai-ceo-on-private-data-and-looking-ahead. Quote: “We see the wave coming… every company has to implement it.” (Axios HQ)

- Transcript: “We Have 900 Days Left — Emad Mostaque on The Tea with Myriam François,” Nov 25, 2025 — https://singjupost.com/transcript-we-have-900-days-left-emad-mostaque-on-the-tea-with-myriam-francois/. Quote: “We have 900 days left” (framing urgency about how fast adoption will change industries). (The Singju Post)

6. Mustafa Suleyman — Head, Microsoft AI

Mustafa Suleyman’s 2025 work blended product deployment with governance framing in a way that mattered across enterprise and policy audiences. At Microsoft he led teams working to integrate advanced models into widely used productivity tools and cloud services, but he also used his platform to articulate a normative vision he called “humanist superintelligence” — the idea that the most powerful systems must preserve human control and dignity. That pair — building enterprise-grade products while publicly advocating a human-centered approach — mattered in 2025 because Microsoft is both a major platform provider and a major enterprise supplier: when Microsoft adopts a governance posture, it becomes part of enterprise contracts, compliance plans, and product design expectations. (Project Syndicate)

Suleyman’s essays and public statements in 2025 did more than signal values; they led to concrete product and organizational choices inside Microsoft. Teams working on model access, auditing, and contract language were influenced by the “humanist” framing, which translated into features and guardrails in enterprise offerings. For customers, that made Microsoft an attractive partner if they wanted both capability and contractual assurances. For policymakers, Suleyman’s visibility offered a publicly articulated set of principles that could be mined when drafting rules or guidance. In a year where governments moved faster to scrutinize AI, having a major vendor pushing a human-centered operational approach made regulation more actionable. (Project Syndicate)

Finally, Suleyman’s role matters because of leverage: Microsoft’s combination of cloud, productivity software and enterprise sales means a governance posture can become industry practice by default if it’s baked into contracts and platform APIs. That makes the Microsoft playbook more influential than a single lab’s blog post. On Thanksgiving 2025, thanking someone who tried to fold humanist ideas into product design — and who had the platform to make those ideas matter — feels appropriate. (Project Syndicate)

Sources quoted (sample 2025 items & one-sentence quotes):

- Mustafa Suleyman, “Toward Humanist Superintelligence,” Project Syndicate / Microsoft AI blog, Nov 7, 2025 — https://www.project-syndicate.org/commentary/humanist-superintelligence-ai-must-be-designed-for-human-control-by-mustafa-suleyman-2025-11. Quote: “Systems must be designed with people remaining unequivocally in control.” (Project Syndicate)

- Fortune reporting on Microsoft’s HSI launch (Nov 6, 2025) — https://fortune.com/2025/11/06/microsoft-launches-new-ai-humanist-superinteligence-team-mustafa-suleyman-openai/. Quote: “Led by Suleyman… the team will work toward ‘humanist superintelligence’.” (Fortune)

7. Ilya Sutskever — Co-founder & Chief Scientist (Safe Superintelligence / OpenAI alum)

Ilya Sutskever’s voice in 2025 mattered because he’s a rare figure who can credibly argue both for the power of scale and for a renewed focus on fundamental research. Known for seminal technical contributions, Sutskever’s public stance in late 2025 — that the era of pure scaling is giving way to an “age of research” — had immediate influence on how researchers, investors, and engineers thought about next steps. That framing is important because it tells the industry where to put resources: continue buying GPUs, or invest in algorithmic, architecture and generalization research. When someone with Sutskever’s track record says the latter, organizations listen. (Business Insider)

In practice, Sutskever’s 2025 statements catalyzed funding and talent flows. His argument — that models generalize worse than humans and that algorithmic innovation may return the biggest marginal gains now — nudged foundations and VCs to consider bets on smaller, high-risk research teams rather than only on bigger compute plays. That has consequences: the kinds of breakthroughs that could make models more data-efficient or enable better reasoning are often born in small labs. When the field’s narrative shifts from “buy more compute” to “invent the next trick,” it can change hiring, grant decisions, and the kinds of PhD theses produced in coming years. (Business Insider)

There’s also a practical safety element: if capabilities come from clever algorithms rather than only from scale, the community gains more diverse pathways to build safety features and to reason about failure modes. Sutskever’s public pivot in 2025 therefore had technical and policy ripple effects: it changed what success looks like and framed the research agenda for the near future. For that intellectual nudge alone — for reminding the field that algorithmic creativity still matters — he’s an important figure to thank this Thanksgiving. (Business Insider)

Sources quoted (sample 2025 items & one-sentence quotes):

- Business Insider (Nov 26, 2025), “OpenAI cofounder says scaling compute is not enough to advance AI: ‘It’s back to the age of research again’,” Business Insider — https://www.businessinsider.com/openai-cofounder-ilya-sutskever-scaling-ai-age-of-research-dwarkesh-2025-11. Quote: “So it’s back to the age of research again, just with big computers.” (Business Insider)

- Summary posts and transcripts of Sutskever’s interviews (Nov 2025) — e.g., Dwarkesh interview and coverage on Hacker News / EA forums. Quote: “The ‘age of scaling’ is over.” (Hacker News)

8. Elon Musk — Founder, xAI and owner of X

Elon Musk’s 2025 influence on AI is disruptive and controversial in roughly equal measure. Through xAI and the public-facing Grok assistant embedded into the X platform, Musk pushed an aggressive, very visible version of conversational AI into the social media ecosystem. That strategy did two big things in 2025. First, it dramatically raised public awareness about deployed chatbots — people interacted with Grok on a mass scale inside X, which brought everyday users into contact with generative AI in a way that no research paper ever could. Second, it catalyzed regulatory and reputational responses: high-visibility mistakes (from hallucinations to offensive outputs) triggered probes and legal scrutiny in multiple countries, forcing platform companies and regulators to confront questions about platform responsibility, moderation, and the legal status of algorithmic speech. In short, the Grok rollout made deployment challenges immediate and public. (AP News)

Because Musk owns a major public platform, xAI’s mistakes and successes didn’t stay isolated; they reverberated across the ecosystem. When Grok generated problematic content in French and prompted an investigation, it became a lesson for every company shipping conversational agents: you need robust moderation, auditing and rapid remediation processes. That pressure changed vendor contracts, enterprise procurement language and — importantly — public expectations for what a deployed assistant should or shouldn’t say. It’s messy, and sometimes dangerous, but it’s also a form of social stress-testing that accelerated improvements in moderation, auditing tooling, and legal thinking about platform liability in 2025. For that catalytic effect — even if it sometimes backfired spectacularly — Musk’s actions moved the needle on public debate and on hard engineering tasks. (AP News)

Finally, Musk’s role is also about variety: his aggressive public experiments kept alternative designs in the conversation and reminded developers that there are many commercial paths for AI — from carefully curated enterprise products to highly visible, platform-scale assistants. If 2025’s growth included both confidence-building enterprise rollouts and noisy public trials, Musk was a major reason for the latter’s prominence. That’s why — even if grudgingly — Musk is on a Thanksgiving list of people who shaped AI this year. (AP News)

Sources quoted (sample 2025 items & one-sentence quotes):

- AP News, “Musk’s xAI scrubs inappropriate posts after Grok chatbot makes antisemitic comments,” Jul 9, 2025 — https://apnews.com/article/elon-musk-chatbot-ai-grok-d745a7e3d0a7339a1159dc6c42475e29. Quote: “xAI is taking down antisemitic comments and other ‘inappropriate posts’ made by its Grok chatbot.” (AP News)

- The Guardian, Nov 12, 2025, “Elon Musk’s Grok AI briefly says Trump won 2020 presidential election,” Nick Robins-Early — https://www.theguardian.com/us-news/2025/nov/12/elon-musk-grok-ai-trump-2020-presidential-election. Quote: “Grok generated false claims that Donald Trump won the 2020 presidential election.” (The Guardian)

- AP/France probe coverage, Nov 2025 — “France will investigate Musk’s Grok chatbot after Holocaust denial claims,” AP, Nov 2025. Quote: “France has launched an investigation into Grok after it produced French-language content that questioned the historical use of gas chambers at Auschwitz.” (AP News)

9. Fei-Fei Li — Founder & CEO, World Labs; Professor, Stanford HAI

Fei-Fei Li’s arc from ImageNet pioneer to leader of a startup focused on “world models” made 2025 an important year for the “words-to-worlds” agenda in AI. While much of the 2020–2024 conversation centered on text and images, Fei-Fei and other researchers argued that the next meaningful boost in usefulness will come when models can reason about 3D space, embodiment, and physical interaction — the kinds of capabilities required for robotics, AR/VR, and real-world agents. In 2025 Fei-Fei’s World Labs pushed spatial intelligence onto the agenda: their work and public talks encouraged funders and teams to think beyond language-only benchmarks and toward models that can simulate and reason about environments. That pivot is consequential because many high-impact applications (surgical assistance, factory robotics, AR navigation) require spatially grounded perception and planning. (Business Insider)

Fei-Fei’s credibility comes from both history and method: she led the ImageNet initiative that made modern computer vision mainstream, and she’s continued to push open research and annotation standards. In 2025 that translated into attention and investment for startups and labs working on 3D datasets, multimodal simulators, and perception-to-action pipelines. For people building products that bridge the physical and digital, Fei-Fei’s voice helped create a research-to-product pathway in which spatial intelligence became a fundable, measurable goal. In short, if 2025 felt like the year spatial thinking moved from niche to mainstream, Fei-Fei’s contributions are a large part of why. (Business Insider)

Sources quoted (sample 2025 items & one-sentence quotes):

- Business Insider feature, “Fei-Fei Li… building World Labs,” Nov 2025 — https://www.businessinsider.com/fei-fei-li-world-labs-ai-childhood-immigrant-dry-cleaner-2025-11. Quote: “At World Labs, Li is now working on developing ‘world models’ — AI systems that incorporate spatial intelligence.” (Business Insider)

- Business Insider explainer, “Top AI researchers say language is limiting. Here’s the new… (world model)”, Jun 13, 2025 — https://www.businessinsider.com/world-model-ai-explained-2025-6. Quote: “Top researchers like Fei-Fei Li are thinking about AI beyond LLMs.” (Business Insider)

- Bloomberg feature (Nov 2025), “Fei-Fei Li: The Godmother of AI Didn’t Expect It to Be This Massive,” (interview/feature). Quote: “Li talks about teaching machines to see as humans do.” (Bloomberg)

10. Mira Murati — Founder & CEO, Thinking Machines Lab

Mira Murati capped 2025 as a leading product and engineering executive turned founder who demonstrated how to turn research into production-grade systems. After a high-profile tenure as OpenAI CTO and several major product launches, Murati’s move to found Thinking Machines Lab (aka Thinking Machine(s) / Thinking Machines Lab in many reports) in early 2025 brought a team of experienced engineers and researchers into the market with the explicit goal of building customizable, transparent, and capable AI systems for enterprises and developers. Her reputation — product judgement, reliability under pressure, and experience shipping multimodal assistants — gave her startup immediate credibility. That credibility, in turn, accelerated enterprise interest in alternative providers beyond the hyperscalers. (Forbes)

Murati’s 2025 playbook was practical: hire experienced product and research talent, prioritize safety and explainability in early releases, and position the company as a pragmatic alternative for enterprises that wanted to avoid vendor lock-in. The early product announcements and hiring stories made it clear that her goal was not just to re-implement existing models but to offer a coherent platform with a strong product-engineering culture. That matters because many enterprises in 2025 were looking for vendors that could guarantee performance and governance — and Murati’s background made Thinking Machines Lab a credible option. Her emphasis on product discipline made the idea of “safe, explainable, shipped AI” seem less aspirational and more operational. (Fortune)

Finally, Murati’s influence is also symbolic: a high-profile engineering leader leaving a giant lab to found a deep-tech startup signalled to talent and investors that there’s room for alternatives and that product-focused, safety-minded labs could attract serious capital. In a year of intense hiring competition and high valuations, seeing someone with her track record successfully launch a large-scale venture alters where talent flows — which in turn shapes which products get built next. That’s an appreciable contribution to the landscape of 2025. (Forbes)

Sources quoted (sample 2025 items & one-sentence quotes):

- Rashi Shrivastava, “Former OpenAI CTO Mira Murati Reveals Her New AI Startup,” Forbes, Feb 18, 2025 — https://www.forbes.com/sites/rashishrivastava/2025/02/18/the-prompt-former-openai-cto-mira-murati-reveals-her-new-ai-startup/. Quote: “Mira Murati announced her new venture called Thinking Machine Labs.” (Forbes)

- The Verge, “Mira Murati is launching her OpenAI rival: Thinking Machines Lab,” (Feb 2025) — https://www.theverge.com/2025/02/18/mira-murati-thinking-machines-lab-openai-competitor-launch. Quote: “The company’s early direction points toward helping people tailor AI for specific goals.” (The Verge)

- Fortune profile, Oct 3, 2025, “Meet Mira Murati…” — https://fortune.com/2025/10/03/mira-murati-career-ai-thinking-machines-goldman-sachs-tesla-leap-openai/. Quote: “Meet Mira Murati, the 36-year-old tech prodigy who shot to fame at OpenAI and now runs a startup.” (Fortune)

[End]

Filed under: Uncategorized |

Leave a comment