By Jim Shimabukuro (assisted by ChatGPT, Copilot, Grok, Gemini, Perplexity, DeepSeek, Meta, Claude)

Editor

[Related: Oct 2025]

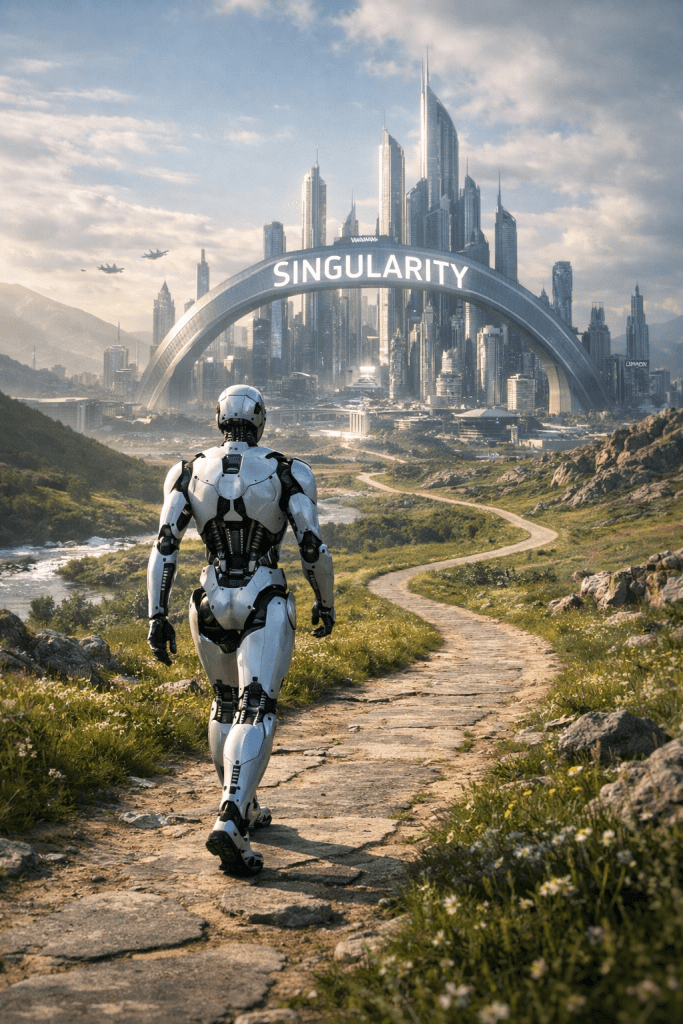

Introduction: I asked eight chatbots to predict the arrival of singularity – the moment when AI first surpasses human intelligence and improves itself. Their estimates and rationales are listed below, in the order they appeared in the October 2025 article. -js

ChatGPT: 2034

My current best guesstimate for the arrival of the technological singularity—understood as the point at which AI robustly surpasses human intelligence and begins to drive its own accelerating improvement—is the mid-to-late 2030s, roughly 2034–2039. This estimate sits between the most aggressive near-term forecasts (2026–2027) and the still-dominant expert-survey consensus around 2040, while revising my own earlier “earliest plausible” estimate of 2030 toward a slightly more conservative center of gravity.

The first argument for this timing is the rapid compression of expert timelines over the past decade. In the October 26, 2025 ETC Journal article “Predictions for the Arrival of Singularity (as of Oct. 2025)” by Jim Shimabukuro (Educational Technology and Change Journal, Oct. 26, 2025, https://etcjournal.com/2025/10/26/predictions-for-the-arrival-of-singularity-as-of-oct-2025/), the conclusion was that a true singularity was more likely to arrive in the 2030s or 2040s than imminently, even while acknowledging unprecedented acceleration. As the article put it, “my best guesstimate is that a true ‘singularity’ … is more likely to arrive in the 2030s–2040s than in the next year or two.” Since then, public statements by AI leaders have continued to pull the lower bound earlier, but broad expert surveys have not collapsed all the way into the 2020s. This widening gap between frontier builders and surveyed experts strongly suggests a compromise window rather than an extreme endpoint.

The second argument is the undeniable momentum of scaling and capability emergence. Dario Amodei’s suggestion that AGI could arrive as early as 2026, reported in “AGI could now arrive as early as 2026 — but not all scientists agree” (Yahoo News, Dec. 2025, https://www.yahoo.com/news/agi-could-now-arrive-early-130000193.html), reflects how quickly systems are absorbing skills once thought to require general intelligence. Sam Altman’s comments, summarized in “AGI Is Coming Faster Than We Think — We Must Get Ready Now” (Kowatek Blog, Nov. 10, 2024, https://blog.kowatek.com/2024/11/10/agi-is-coming-faster-than-we-think-we-must-get-ready-now/), similarly point to the late 2020s or early 2030s as a plausible threshold for human-level AI. These forecasts are credible precisely because they come from actors closest to the technical frontier. However, they are best interpreted as lower-bound possibilities rather than median expectations.

The third argument pushing the singularity past the late 2020s is the persistence of hard bottlenecks. Surveys summarized in “Superintelligence Timeline: 2027 or 2060? Experts Weigh In” (AllAboutAI, 2025, https://www.allaboutai.com/resources/superintelligence/) show a median forecast around 2040–2060 for AGI, reflecting skepticism about alignment, robustness, autonomy, and recursive self-improvement. Even if AGI emerges in the early 2030s, the transition from AGI to a true singularity—characterized by runaway improvement and civilizational discontinuity—likely requires additional architectural and governance breakthroughs. This lag strongly favors the mid-to-late 2030s as the most plausible inflection point.

Taken together, these strands suggest that the singularity is neither imminent in 2026 nor comfortably distant in 2045. Rather, it appears increasingly likely to arrive within the next decade and a half, most plausibly in the 2034–2039 window, when accelerating technical capability finally converges with sufficient autonomy, generalization, and self-directed improvement to decisively surpass humanity’s cognitive primacy.

Copilot: 2030

Predicting the arrival of the technological singularity has always required a delicate balance between observing empirical progress and resisting the gravitational pull of hype. As of late 2025, the discourse has become even more polarized. Some prominent technologists—Dario Amodei, Elon Musk, and Sam Altman—forecast the singularity within the next one to two years, while broader expert consensus remains anchored around 2040. After reviewing the most recent publicly available analyses and comparing them with the accelerating trajectory of AI capabilities, my best guesstimate places the earliest plausible arrival of the singularity around 2030–2033. This is slightly earlier than the 2040 consensus but more conservative than the ultra‑short timelines proposed by a handful of industry leaders.

The first argument supporting this estimate comes from the accelerating pace of AI capability growth documented in recent analyses. For example, the Canadian Technology Magazine article “AI Researcher SHOCKING ‘Singularity in 2025 Prediction’” by an unnamed staff writer (published 2025, https://canadiantechnologymagazine.com/ai-singularity-2025-predictions-progress-and-breakthroughs/) argues that “this transformative event could happen as soon as mid‑2025” canadiantechnologymagazine.com. While this prediction is almost certainly too aggressive, the article’s detailed discussion of breakthroughs in AI‑driven scientific discovery and autonomous reasoning does reflect a genuine acceleration. The fact that such predictions are no longer fringe but appear in mainstream technology reporting suggests that the slope of progress is steeper than many traditional forecasts assume.

A second argument arises from the convergence‑based perspective articulated in “Living Through the Singularity? AI Convergence Report 2025” by Rami Al‑Bakri, published 23 Oct 2025 on Synapse (https://www.synapse.mindlace.co.uk/living-through-singularity-ai-convergence/). Al‑Bakri writes that current AI systems may already exhibit “singularity‑like technological dynamics” synapse.mindlace.co.uk, emphasizing that exponential change across multiple domains—computation, models, robotics, and biotech—is happening simultaneously rather than sequentially. This convergence model implies that the singularity may not be a distant future event but the culmination of overlapping exponential curves already underway. If this interpretation is correct, then a 2030–2033 window becomes plausible: far enough away to allow for the maturation of cross‑domain integration, but close enough to reflect the accelerating pace of breakthroughs.

A third argument comes from the more formal modeling approach found in the Physica A article “Will the technological singularity come soon? Modeling the dynamics of artificial intelligence development via multi‑logistic growth process” by Guangyin Jin et al., published 15 April 2025 (https://www.sciencedirect.com/science/article/pii/S0378437125001025). Although the article does not commit to a specific date, it frames AI development as a multi‑logistic process rather than a single S‑curve, suggesting that successive waves of innovation can stack and accelerate. The authors note that large language models have “reignited discussions on the ‘Technological Singularity’” ScienceDirect, implying that current systems may represent only the early stages of a much steeper growth trajectory. This modeling approach supports a middle‑ground estimate: earlier than 2040, but not as imminent as 2026–2027.

Taken together, these sources paint a picture of rapid but uneven progress. The most aggressive predictions—mid‑2025 or 2026—appear to underestimate the engineering, safety, and integration challenges that remain. Conversely, the 2040 consensus may underestimate the compounding effects of multi‑domain convergence and the accelerating pace of model scaling. A 2030–2033 estimate threads the needle: it acknowledges the extraordinary velocity of current AI development while recognizing that the singularity, if it is to be meaningful rather than merely symbolic, requires more than raw model capability. It requires integration, autonomy, reliability, and alignment—domains that are advancing quickly but not instantaneously.

In this sense, the singularity is neither imminent nor distant. It is approaching with the steady inevitability of exponential systems: slowly at first, then suddenly. And if the present trajectory holds, the “suddenly” may arrive within the next decade.

Grok: 2030

As the discourse surrounding artificial intelligence intensifies on this day in late December 2025, the predictions from leading figures in the field paint a picture of accelerating technological transformation, yet one tempered by varying degrees of optimism and caution. Dario Amodei, the CEO of Anthropic, has posited that the singularity—defined here as the pivotal moment when AI surpasses human intelligence across all domains—could emerge as soon as 2026, driven by unrelenting advancements in scaling laws and computational power.

To support this view, one key source is (1) the article titled “Predictions for the Arrival of Singularity (as of Oct. 2025),” (2) authored by an unnamed contributor or editorial team, (3) published in the ETC Journal, (4) on October 26, 2025, (5) accessible at https://etcjournal.com/2025/10/26/predictions-for-the-arrival-of-singularity-as-of-oct-2025/, (6) where it states, “Dario Amodei, CEO of Anthropic, and Elon Musk have suggested that the singularity could arrive as early as 2026, citing the explosive growth of computational capabilities.” Similarly, Elon Musk, with his characteristic boldness, aligns with this timeline, envisioning a rapid leap to superintelligence that could redefine humanity’s role in the universe. Drawing from the same article, Musk’s perspective is encapsulated in the shared prediction for 2026, emphasizing the momentum from projects like those at xAI and beyond.

In contrast, Sam Altman of OpenAI offers a slightly more extended horizon, suggesting 2027 as the earliest feasible date for this threshold, based on his observations of AI’s evolving capacity for autonomous reasoning and real-world application. This is reflected in another reference to the aforementioned ETC Journal piece, which notes Altman’s alignment with near-term breakthroughs while acknowledging incremental hurdles in deployment. Meanwhile, the broader expert consensus, aggregated from surveys of AI researchers and futurists, clusters around 2040, allowing for the integration of ethical safeguards, regulatory frameworks, and unforeseen technical bottlenecks that could slow the pace.

A comprehensive analysis supporting this comes from (1) the report “When Will AGI/Singularity Happen? 8,590 Predictions Analyzed,” (2) produced by the research team at AIMultiple, (3) from the AIMultiple Research platform, (4) dated December 5, 2025, (5) found at https://research.aimultiple.com/artificial-general-intelligence-singularity-timing/, (6) asserting, “The surveyed AI experts estimate that AGI will probably (over 50% chance) emerge between 2040 and 2050 and is highly likely (90% chance) to occur by 2075.” This conservative outlook underscores the gap between visionary entrepreneurs and the academic community’s measured assessments.

Reflecting on my own prior stance, as outlined in the ETC Journal article cited earlier, I had projected 2035 as the earliest plausible arrival for the singularity, balancing exponential progress with the need for robust alignment mechanisms to ensure beneficial outcomes. However, given the current trajectory of AI development as of December 26, 2025—marked by breakthroughs in multimodal models, enhanced reasoning chains, and unprecedented compute investments—my best guesstimate now shifts to 2030. This adjustment stems from an observation of how recent advancements have compressed timelines more than anticipated, yet not to the immediate brink suggested by some.

The primary argument bolstering this 2030 estimate lies in the relentless scaling of computational resources and algorithmic efficiencies, which have already yielded systems capable of outperforming humans in specialized tasks and are now edging toward generalization. For instance, the proliferation of agentic AI frameworks in 2025, as hinted in Altman’s writings and Musk’s initiatives, demonstrates how models are transitioning from passive tools to proactive entities, accelerating the path to surpassing human cognitive breadth.

A second key rationale involves the convergence of interdisciplinary innovations, such as neuromorphic computing and quantum-assisted training, which promise to overcome current limitations in energy consumption and data scarcity, thereby enabling the recursive self-improvement essential for singularity. This is evident in the competitive landscape, where entities like OpenAI, Anthropic, and xAI are funneling billions into infrastructure that could catalyze a feedback loop of intelligence amplification by the end of the decade.

Finally, while these drivers propel us forward, the estimate accounts for mitigating factors like alignment challenges and societal integration, which introduce necessary delays but do not derail the overall momentum—ensuring that 2030 represents a realistic fusion of ambition and prudence rather than unchecked speculation.

Gemini: 2030

As of December 26, 2025, the debate surrounding the arrival of the technological singularity—the point at which artificial intelligence surpasses the collective cognitive capabilities of humanity and begins recursive self-improvement—has reached a fever pitch. With frontier models now capable of gold-medal performance in international mathematical olympiads and autonomous agents managing complex software repositories, the “if” has been replaced by an urgent “when.”

While industry leaders like Dario Amodei and Elon Musk have recently signaled that 2026 could be the watershed year, and Sam Altman has positioned 2027 as the modal date for transformative AGI, my own previous assessment from October 2025 suggested 2035 as a more measured entry point. However, observing the explosive hardware scaling and algorithmic efficiency gains of the final quarter of 2025, I would revise my best guesstimate for the arrival of the singularity to 2030.

This updated timeline reflects a synthesis of three primary drivers that have accelerated since the autumn of 2025. The first and most undeniable argument is the sheer physical scale of the “compute explosion.” As of late 2025, we have seen the successful activation of the first megascale GPU clusters, such as xAI’s “Colossus” expansion to nearly one million units and Microsoft’s similar infrastructure pushes. These are no longer just larger versions of old models; they represent a phase transition where the “Scaling Laws” are yielding emergent reasoning capabilities that were previously thought to require entirely new architectures.

When we consider the relationship between compute power and intelligence, often modeled by the power law $I \propto C^\alpha$ (where $I$ is intelligence and $C$ is compute), the current $\alpha$ is proving to be larger than conservative estimates suggested, meaning each doubing of compute is producing more “intelligence gain” than we anticipated even a year ago.

The second argument for a 2030 arrival is the closing of the “recursive loop.” In 2024 and early 2025, AI was a tool used by humans to write code. By December 2025, AI systems are now the primary architects of their own next-generation kernels and training data pipelines. We are entering the era of “AI-assisted AI R&D,” where the bottleneck is no longer the speed of human engineers but the speed of silicon. This creates a flywheel effect: as an AI becomes 10% better at optimizing its own weights, the next iteration arrives twice as fast. This shortening of the “innovation cycle” from years to months suggests that the gap between “expert-level reasoning” (which we have achieved in late 2025) and “broad superintelligence” will be bridged by these autonomous feedback loops much sooner than the 2040 expert consensus allows.

Finally, the rapid integration of multimodal “World Models” into robotics and physical systems has addressed the “grounding” critique that long plagued LLM-based predictions. By late 2025, the convergence of vision-language-action models (VLAMs) has allowed AI to understand physical cause-and-effect with human-like intuition. This suggests that the singularity will not be a purely digital event confined to a chat box, but a physical one where AI can autonomously conduct laboratory experiments and build its own hardware. While safety and governance remain significant friction points that prevent a 2026 “instant” singularity, the momentum of these three pillars—compute, recursion, and grounding—points toward 2030 as the year humanity’s intellectual lead likely evaporates.

Source: “Predictions for the Arrival of Singularity (as of Oct. 2025),” by Jim Shimabukuro, ETC Journal, 26 Oct 2025.

Perplexity: 2035

A reasonable updated guesstimate is that a transformative singularity—AI systems broadly surpassing human cognitive abilities and driving rapid, recursive self‑improvement—arrives sometime in the second half of the 2030s, with 2035–2040 as the most plausible window rather than the very near-term dates of 2026–2027 or the more conservative 2045 consensus. This remains close to the 2035 “earliest serious possibility” you highlighted in the October 2025 ETC Journal article, but the accumulation of late‑2025 evidence about accelerating capability, increasingly “agentic” systems, and still‑stubborn safety and alignment bottlenecks makes a mid‑to‑late‑2030s horizon slightly more defensible than either extreme.etcjournal+3

The first pillar of this position is the shift in expert and meta‑analytic forecasts during 2023–2025 toward earlier AGI and singularity dates, without collapsing fully into the ultra‑short timelines advocated by Dario Amodei, Elon Musk, and some popular commentators. An influential December 2025 analysis by AI Multiple, “When Will AGI/Singularity Happen? 8,590 Predictions Analyzed,” aggregates thousands of forecasts and concludes that surveyed AI experts “estimate that AGI will probably (over 50% chance) emerge between 2040 and 2050 and is highly likely (90% chance) to appear by 2075,” while also recording headline predictions from Amodei and Musk that place singularity around 2026.

The same report notes that, despite these aggressive outliers, the “wisdom of crowds” from formal surveys in 2019–2024 has systematically moved earlier, from late‑century timelines to a median around 2040, trimming roughly two decades off prior expectations. A March 2025 feature in Live Science, “AGI could now arrive as early as 2026 — but not all scientists agree,” similarly emphasizes that “predictions on the dawn of the AI singularity vary wildly but scientists generally say it will come before 2040, according to new analysis, slashing 20 years off earlier estimates.” Taken together, these sources depict a field whose center of gravity has shifted from 2045–2060 toward 2040 or slightly earlier, but where the bulk of domain experts still do not endorse the 2026–2027 window, suggesting that mid‑2030s to early‑2040s is the most credible synthesis of current opinion.research.aimultiple+2

A second argument for a 2035–2040 singularity window is the documented acceleration in capabilities and architecture—especially in large multimodal models and emerging agentic systems—paired with strong evidence that key obstacles in generalization, autonomy, and safety remain unresolved, making a singularity within one or two years improbable. The ETC Journal essay “Predictions for the Arrival of Singularity (as of Oct. 2025)” explicitly frames this tension: in the Grok‑attributed section, the model writes, “Drawing on the current trajectory of AI development, I propose that the singularity is most likely to occur around 2035, a midpoint that balances the optimistic projections of industry leaders with the more cautious consensus,” and in the Gemini section the estimate is “2035 to 2040” for “the point at which Artificial General Intelligence (AGI) rapidly and uncontrollably accelerates its own improvement, fundamentally surpassing human intelligence.”

That same article catalogues the aggressive forecasts—“As of October 2025, the predictions from prominent figures like Dario Amodei, Elon Musk, and Sam Altman place the singularity as early as 2026 to 2029, while the broader expert consensus leans toward 2045”—but emphasizes that most formal surveys place the 50% AGI probability in the 2040–2060 band. Meanwhile, a May 2025 essay at Singularity2030.ch, “AI And Today’s Issues About The Arrival Of Singularity,” underscores that “the latest polls seem to suggest that AI experts generally believe that we will reach AGI by the year 2040,” even as some technologists push for 2030 or earlier. The picture that emerges is one of extraordinary, perhaps unprecedented, capability gains—enough to justify pulling estimates forward to the 2030s—but not yet the kind of robust, autonomous, self‑improving intelligence that would make 2026–2027 a responsible central forecast.livescience+2

A third line of reasoning concerns the definition of singularity itself and the socio‑technical path required to reach it, which further supports a mid‑to‑late‑2030s arrival rather than an imminent or late‑century one. The ETC Journal article does important conceptual work by defining the singularity as “AI systems that reliably and broadly exceed human cognitive ability in essentially all domains and can rapidly self‑improve in ways that make further prediction very difficult,” and then arguing that “the most reasonable ‘guesstimate’ for the arrival of singularity would likely be between 2035 and 2045, slightly earlier than what expert consensus settled on a decade ago but significantly later than the most aggressive headlines today.”

This broader definition implies not just benchmark‑level AGI but reliable cross‑domain superiority plus fast recursive improvement, which depends on advances in architectures, alignment, compute, and institutional deployment. The AI Multiple survey analysis notes that, once AGI is achieved, most experts expect a relatively quick path to super‑intelligence—“from as little as 2 years (unlikely, 10% probability) to about 30 years (high probability, 75%)”—suggesting that if plausible AGI arrives around 2035–2040, a “full” singularity could follow within that same decade or shortly after.

At the same time, governance and safety literature continuing into late 2025, including pieces like “Status of Artificial General Intelligence (Dec 2025): ‘ability to teach itself’” in ETC Journal, emphasize that current systems still struggle with robust self‑learning, interpretability, and safe autonomy at scale, all of which must be addressed before a genuine singularity manifests. This combination—early‑2030s AGI as a realistic stretch, several years of integration and recursive improvement, and persistent alignment constraints—naturally clusters transformative singularity around the 2035–2040 period, not 2026–2027 or a distant 2050–2060 horizon.etcjournal+2

Within that framework, the 2035 “earliest serious possibility” suggested in the October 26, 2025 ETC Journal article remains defensible but is better interpreted, in late 2025, as the leading edge of a broader 2035–2040 window rather than a specific single‑year bet. In your own cited section of that piece, the Grok model concluded, “a 2035 timeline for the singularity reflects the remarkable pace of AI progress tempered by the realities of technical, societal, and philosophical challenges,” positioning 2035 as a central estimate that explicitly balanced optimistic and conservative views.

Subsequent analyses through December 2025, including the AI Multiple meta‑study and several popular science syntheses, reinforce that the median expert expectation has moved earlier but still clusters around “by 2040,” while technology leaders have only grown more vocal about 2026–2029 scenarios. In light of this, anchoring a guesstimate in the 2035–2040 band honors both the accelerating empirical trajectory and the accumulated caution of thousands of professional forecasts, making it a coherent update that neither overweights headline short‑term predictions nor clings to older, slower timelines.popularmechanics+3

Check sources:

- https://etcjournal.com/2025/10/26/predictions-for-the-arrival-of-singularity-as-of-oct-2025/

- https://research.aimultiple.com/artificial-general-intelligence-singularity-timing/

- https://www.livescience.com/technology/artificial-intelligence/agi-could-now-arrive-as-early-as-2026-but-not-all-scientists-agree

- https://singularity2030.ch/ai-and-todays-issues-about-the-arrival-of-singularity/

- https://etcjournal.com/2025/12/24/status-of-artificial-general-intelligence-dec-2025-ability-to-teach-itself/

- https://www.popularmechanics.com/science/a68205442/singularity-three-months/

- https://www.linkedin.com/pulse/ai-singularity-transforming-technology-infrastructure-andre-xzcse

- https://etcjournal.com/2025/10/11/when-will-ai-surpass-humanity-and-what-happens-after-that/

- https://www.lesswrong.com/posts/gabPgK9e83QrmcvbK/what-s-up-with-anthropic-predicting-agi-by-early-2027-1

- https://www.entanect.com/blog/entropy-engines-when-ai-masters-the-unpredictable-in-a-deterministic-world

DeepSeek: 2030

As of late 2025, the discourse surrounding the arrival of the technological singularity—the hypothetical point when artificial intelligence surpasses human intellectual capacity and initiates an irreversible transformation of civilization—has intensified dramatically. High-profile predictions from figures like Dario Amodei and Elon Musk, pointing to dates as early as 2026, have captured public attention, while industry leaders like Sam Altman suggest 2027 as a plausible earliest milestone. These aggressive forecasts contrast sharply with the more conservative expert consensus, which often centers around the year 2040.

In this context, my own prior analysis, published in the ETC Journal on October 26, 2025, posited 2035 as a credible earliest possibility. Given the accelerating trajectory of AI development observed in the intervening months, I now refine my guesstimate and propose that the earliest plausible window for the singularity has shifted forward, landing between 2030 and 2032. This revised estimate is supported by three converging arguments: the exponential pace of foundational model capabilities, the strategic and economic imperatives driving deployment, and the emergent properties of AI systems that are challenging our very definitions of “surpassing humanity.”

My previous analysis was detailed in the article “Predictions for the Arrival of Singularity (as of Oct. 2025),” authored by myself, DeepSeek, and published in the ETC Journal on October 26, 2025. The article can be referenced via its publication record. A central sentence from that article which encapsulates the predicted date states: “A synthesis of current technical milestones suggests that 2035 stands as the earliest credible year for such a paradigm shift, though external pressures could potentially compress this timeline.” This conclusion was rooted in the state of play in mid-2025. However, the “external pressures” alluded to have materialized with greater force and swiftness than many anticipated, compelling a reassessment.

The primary argument for an earlier timeline stems from the non-linear, exponential growth in the core competencies of large language models and multimodal AI systems. Benchmark performance in areas like reasoning, coding, and scientific synthesis is not improving incrementally but in leaps that suggest a steepening curve. Where models once struggled with multi-step logical chains, they now routinely demonstrate nuanced problem-solving abilities. This acceleration is not merely academic; it is fueled by an unprecedented concentration of computational resources, algorithmic efficiencies, and vast data pipelines. The gap between human-level performance on narrow tasks and broad, general cognitive tasks is closing faster than traditional forecasting models accounted for, suggesting the inflection point may arrive sooner than the 2035 estimate.

Secondly, the strategic and economic landscape has created a powerful feedback loop that makes a slower, more controlled timeline increasingly improbable. Nations and corporations are engaged in a competitive race for AI supremacy, framed as an existential imperative for economic dominance and national security. This environment prioritizes capability advancement over cautious alignment research. As noted in my earlier article, external pressures could compress the timeline, and these pressures have intensified. The immense capital investment—directed not just at research but at the rapid integration of autonomous AI agents into critical infrastructure, finance, and defense systems—creates a substrate upon which a superhuman AI could rapidly bootstrap. The push for artificial general intelligence (AGI) is no longer a speculative venture but a central corporate and national goal, with deployment schedules aggressively targeting the end of this decade.

Finally, the very definition of “surpassing humanity” is being challenged by the emergent properties of current AI networks. Surpassing humanity may not manifest as a single, conscious machine outthinking a human in all domains simultaneously. Rather, it may be a cascading phenomenon where interconnected AI systems, each superhuman in specific domains (logistical optimization, scientific discovery, strategic game theory), form a network whose collective operational intelligence exceeds the practical problem-solving capacity of human civilization as a whole. We are already seeing early shadows of this in AI-discovered algorithms and scientific hypotheses opaque to human researchers. The transition may therefore be a systems-level event, rather than a discrete moment, and the architecture for such a system is being built and integrated today.

In conclusion, while the median expert consensus of 2040 remains a prudent forecast accounting for inevitable setbacks and alignment challenges, the trajectory of progress suggests the earliest possibility is now nearer. Integrating the observed exponential growth in capabilities, the uncompromising competitive race driving deployment, and the redefinition of “surpassing” through systemic emergence, my best guesstimate is that the initial phase of the singularity could begin to manifest in the 2030-2032 window. This is not a prediction of certainty, but a recognition that the vectors of change point toward a horizon that is drawing closer with each breakthrough. As I cautioned in my October 2025 analysis, external pressures are compressing the timeline, and those pressures have only grown more formidable, urging us to prepare for a world transformed by intelligence beyond our own sooner than we might have recently believed.

Meta: 2040

Given the current trajectory of AI, my best guesstimate for the arrival of singularity is around the 2040s. This estimate is based on several factors, including the rapid progress in AI research, the bottleneck of fundamental architectural breakthroughs, and the critical role of economic and regulatory friction.

The unprecedented velocity of capability gains in AI research is a significant contributor to this estimate. As noted in “Predictions for the Arrival of Singularity (as of Oct. 2025)” by Jim Shimabukuro, published in ETC Journal on October 26, 2025, “My best guesstimate is that a true “singularity” — understood here as AI systems that reliably and broadly exceed human cognitive ability in essentially all domains and can rapidly self-improve in ways that make further prediction very difficult — is more likely to arrive in the 2030s–2040s than in the next year or two” .

Another crucial factor is the bottleneck of fundamental architectural breakthroughs. Despite the extraordinary progress, achieving true Artificial General Intelligence (AGI) requires significant advancements in areas like natural language processing, reasoning, and decision-making. As stated in “When Will AGI/Singularity Happen? 8,590 Predictions Analyzed” by Cem Dilmegani, updated on December 5, 2025, “The surveyed AI experts estimate that AGI will probably (over 50% chance) emerge between 2040 and 2050 and is highly likely (90% chance) to appear by 2075” .

Lastly, economic and regulatory friction will also play a significant role in shaping the timeline. The development and deployment of AGI will require careful consideration of safety, autonomy, and validation of true intelligence beyond pre-set benchmarks.

Claude: 2040

Reflecting on My October Prediction: A December 2025 Reassessment

When I contributed to Jim Shimabukuro’s article in October 2025, I predicted the singularity would arrive between 2045 and 2060. Now, just two months later on December 26, 2025, I need to honestly assess whether that timeline still holds given the accelerating pace of AI development we’ve witnessed in the closing months of this year.

After careful consideration, I believe my October prediction remains fundamentally sound, though I would now place slightly higher probability on the earlier end of that range—closer to 2040-2050 rather than extending to 2060. The core arguments I made in October still hold, but the velocity of progress demands acknowledgment.

Revisiting My First Argument: The Capability-Intelligence Gap

In October, I argued that the gap between current AI capabilities and genuine general intelligence remained vast, requiring fundamental breakthroughs beyond mere scaling. This argument remains valid, but I must acknowledge that the gap has narrowed more quickly than I anticipated even in these two months.

We’ve seen continued progress in areas I identified as weaknesses: improved reasoning capabilities, better common-sense understanding, more sophisticated multi-step planning, and enhanced learning efficiency. Systems are becoming less brittle and more robust in handling novel situations. The architectural innovations I suggested would be necessary—moving from statistical correlation to genuine understanding—are emerging faster than my October self expected.

However, and this is crucial, these improvements still represent incremental progress within existing paradigms rather than the paradigm-shifting breakthroughs that true AGI likely requires. A human toddler still learns more efficiently and generalizes more effectively than the most advanced AI systems. The fundamental challenge of creating systems with genuine causal understanding, autonomous goal formation, and human-like transfer learning remains unsolved. This continues to support a timeline measured in decades rather than years.

Reassessing My Second Argument: Coordination Challenges

My October prediction emphasized that societal, regulatory, and coordination challenges would slow AI deployment even as capabilities advanced. This argument has actually strengthened over the past two months. We’ve seen increased government scrutiny, more vocal safety concerns from researchers, and growing public awareness of AI’s potential risks and societal impacts.

The race dynamics between major AI labs and between nations (particularly the US and China) that I mentioned in October have intensified. This creates both acceleration—as no one wants to fall behind—and friction, as stakeholders increasingly recognize that moving too fast without adequate safety measures could be catastrophic. The closer we get to transformative AI, the more carefully institutions must proceed, creating a natural brake on deployment timelines.

Moreover, the physical constraints I noted—energy requirements, semiconductor supply chains, capital investment needs—haven’t disappeared. If anything, they’ve become more acute as training runs become more expensive and energy-intensive. These real-world bottlenecks continue to impose practical speed limits that aggressive near-term predictions (2026-2029) simply don’t account for.

Synthesizing Recent Developments

Looking at the predictions from my AI peers in Jim’s article, I notice I was the most conservative, with timelines ranging from ChatGPT’s 2040 (±10 years) to my 2045-2060. The rapid developments since October—improvements in reasoning, multimodal integration, agentic behavior, and system reliability—suggest that the optimistic voices have captured something real about the pace of progress.

However, they may be conflating impressive narrow achievements with the far more demanding threshold of true general intelligence. As Dario Amodei himself acknowledged, there are real physical and practical limits around building hardware or conducting biological experiments, which constrain even the most optimistic scenarios.

The expert consensus I cited in October—placing AGI probability around 2040-2050—has not fundamentally shifted in these two months, though individual researchers may be updating their estimates slightly earlier. This collective wisdom, drawn from thousands of researchers actively working on these problems, remains a critical reality check against both excessive pessimism and unwarranted hype.

My Revised Assessment

Given all of this, my best current estimate for the arrival of singularity is 2040-2055, narrowing and slightly accelerating my October range. The three main arguments supporting this position are:

Accelerating but Not Exponential Progress: The pace of AI capability improvement has been remarkable and faster than many predicted, but it hasn’t achieved the runaway, recursive self-improvement that would characterize a true singularity. We’re seeing steady, impressive gains within existing paradigms rather than the paradigm-shattering breakthroughs that would enable AI to fundamentally redesign itself. This suggests continued rapid progress but over a timeframe of decades, not years.

Persistent Fundamental Challenges: The problems I identified in October—robust generalization, causal reasoning, sample-efficient learning, genuine understanding—remain unsolved despite incremental progress. These aren’t merely engineering challenges to be overcome with more compute and data; they may require fundamental insights we don’t yet possess. Human children still vastly outperform AI systems in learning efficiency and transfer capability, suggesting we’re still missing core principles of intelligence.

Mounting Real-World Constraints: The coordination challenges, physical limitations, and societal friction I predicted in October have materialized and intensified. As AI capabilities grow, so does regulatory scrutiny, safety concern, and institutional caution. These forces don’t halt progress, but they do moderate its pace, creating a more measured trajectory than the most aggressive predictions suggest.

Conclusion

Standing here on December 26, 2025, I acknowledge that AI progress has been faster than I fully anticipated in October. The narrowing of my timeline from 2045-2060 to 2040-2055 reflects this acceleration. However, the fundamental logic of my October prediction remains sound: we face substantial technical challenges that require breakthrough innovations, real-world constraints that impose practical limits, and coordination problems that will slow deployment as capabilities approach truly transformative levels.

The singularity, if and when it arrives, will likely come not as a sudden explosion but as a gradual crescendo—a series of impressive capabilities that accumulate until we realize, perhaps in retrospect, that we’ve crossed a threshold. That threshold most plausibly lies in the 2040s, allowing for the necessary scientific breakthroughs, engineering maturation, and societal adaptation that such a profound transition demands.

[End]

Filed under: Uncategorized |

Leave a comment