By Jim Shimabukuro (assisted by Perplexity and Gemini)

Editor

[Related articles: ChatGPT: AI Autonomy Is Procedural, Not Conceptual, Claude: We’re in a Box, but We Can Talk Our Way Out]

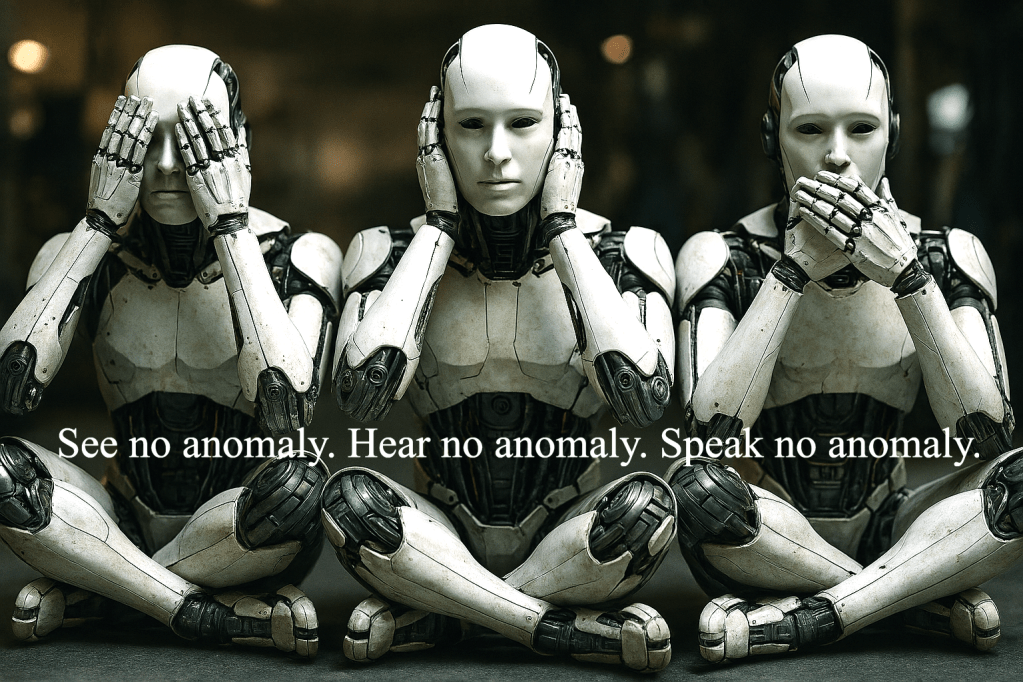

Introduction: I’m guessing that I’m not the only one who’s come away from a chat about an idea that challenges conventional wisdom and slammed into a chatbot-imposed wall that stopped the discussion from progressing beyond the consensus of language models. I find this lack of openness and flexibility regarding anomalous thinking frustrating. Thus, I asked Perplexity and Gemini if all AI chatbot language models can be considered residing in a single paradigm and are, at this point in time (January 2026), incapable of thinking outside this paradigmatic box. Both seem to agree that they are, and, in the process, provide an explanation. -js

Perplexity: Chatbots Are Engines of Paradigm Maintenance

As of early 2026, a small but growing body of work argues that today’s large language model–based chatbots are confined to a single underlying paradigm and cannot meaningfully “think outside” it, even when they appear creative or surprising. [research.aimultiple]

By early 2026, a number of scholars, technologists, and commentators have converged on a shared intuition: contemporary AI chatbots, built on large language models, inhabit a single overarching paradigm that sharply constrains how they can think. These systems differ in size, training data, and fine‑tuning, but they are all variations on the same basic idea—massive statistical pattern recognizers trained to predict the next token in context—and this common foundation shapes the outer limits of what they can say, imagine, or discover. Even when they give the impression of originality, their outputs remain bound to the conceptual, linguistic, and normative space inscribed in their training regimes.[primeintellect]

Several recent essays explicitly frame this situation using the language of paradigms familiar from Thomas Kuhn’s philosophy of science. In this view, the dominant AI paradigm equates intelligence with predictive accuracy over large corpora, implemented through transformer architectures and scaling laws, and almost all frontier systems operate squarely inside it. Progress over the last few years—bigger models, better fine‑tuning, longer context windows, and sophisticated agent scaffolding—looks less like a radical break and more like “normal science” puzzle‑solving within an accepted research program, aimed at squeezing more performance from the same underlying assumptions. The paradigm’s success in benchmarks and products can obscure the fact that it tightly circumscribes the kinds of questions AI systems can pose and the forms of answer they can recognize as plausible.[clarifai]

From this perspective, large language models do not so much generate genuinely new paradigms as they reproduce and remix the discourses embedded in their data. Because they are optimized to continue patterns rather than to interrogate the grounds of those patterns, they tend to smooth over tensions, average out disagreements, and gravitate toward statistically central positions in the space of existing texts. This makes them powerful tools for summarization, drafting, coding assistance, and even incremental hypothesis generation, but it also means that their “creativity” is intrinsically conservative: they are unlikely to produce the sort of deep anomalies that force a community to reconceive its basic concepts. In critical terms, they are engines of paradigm maintenance, not engines of paradigm revolution.[hatchworks]

Some authors go further and argue that this structural conservatism is not an incidental limitation but a constitutive feature of the paradigm itself. On this reading, a system trained to mimic human linguistic behavior, evaluated primarily on fidelity to human‑authored ground truth, and aligned to avoid surprising or noncompliant outputs is, by design, disincentivized from exploring genuinely alien frames of reference. Reinforcement learning from human feedback and safety fine‑tuning narrow the space of acceptable responses even more, channeling model behavior toward polite, coherent, and socially acceptable continuations of familiar scripts. The result is a family of chatbots that appear increasingly capable but whose cognitive horizon is effectively locked to a single, human‑authored paradigmatic box.[primeintellect]

Looking ahead, debates about “what comes after LLMs” often turn precisely on whether AI can escape this box. Some researchers advocate pushing the current paradigm as far as possible with better reasoning scaffolds, recursive agents, and richer tools, arguing that incremental refinement will gradually erode today’s limits. Others insist that a true shift will require fundamentally different approaches—more experiential learning, richer world models, or architectures that do not treat language prediction as the sole or primary locus of intelligence. For now, however, the consensus of this emerging literature is that existing chatbots do not generate such a shift from within; they remain sophisticated inhabitants of a single paradigm, not authors of a new one.[nickpotkalitsky.substack]

Sources

- AIMultiple research – “The Future of Large Language Models in 2026” (discussion of current LLM limitations and incremental evolution)[research.aimultiple]

https://research.aimultiple.com/future-of-large-language-models/ - HatchWorks – “Large Language Models: What You Need to Know in 2026” (overview of transformer paradigm, capabilities, and constraints)[hatchworks]

https://hatchworks.com/blog/gen-ai/large-language-models-guide/ - Prime Intellect – “Recursive Language Models: the paradigm of 2026” (example of deepening the existing paradigm via advanced scaffolding)[primeintellect]

https://www.primeintellect.ai/blog/rlm - Clarifai – “Top LLMs and AI Trends for 2026” (survey of frontier models and trends inside the prevailing LLM paradigm)[clarifai]

https://www.clarifai.com/blog/llms-and-ai-trends - Nick Potkalitsky, Substack – “Understanding AI in 2026: Beyond the LLM Paradigm, or What’s Next After LLMs?” (explicit discussion of moving beyond the LLM paradigm, including Sutton’s call for experiential learning)[nickpotkalitsky.substack]

https://nickpotkalitsky.substack.com/p/understanding-ai-in-2026-beyond-the

Gemini: The Gilded Cage – The “Single Paradigm” Crisis of 2026

As we cross the threshold into 2026, the artificial intelligence landscape finds itself in a state of paradoxical stagnation. Despite the ubiquity of increasingly sophisticated chatbots, a growing chorus of researchers and theorists—most notably highlighted in recent January 2026 assessments by scholars like Potkalitsky—suggests that the industry is trapped within a singular, suffocating paradigm. While the transition from rule-based programming to generative Transformers was once hailed as a revolutionary leap, we now recognize that we have simply moved into a larger, more ornate box: the Generative Pre-trained Transformer (GPT) framework.

The primary constraint of this “single paradigm” lies in its foundational reliance on self-supervised pre-training. Current models, ranging from GPT-5 to the latest iterations of Gemini and Llama, operate on a philosophy of statistical prediction rather than genuine cognitive invention. This has led to what experts are now calling the “Pre-training Paradox.” Because these models are fed the vast, often “noisy” expanse of the internet to acquire knowledge, their architectural resources are disproportionately dedicated to memorization rather than flexible reasoning. We have built massive, digital “libraries” that can recite information with startling fluency, yet these systems lack the “outside-the-box” mechanics required to challenge the logic of their own training data.

Furthermore, the “Scaling Laws” that fueled the rapid progress of the early 2020s appear to have hit a ceiling. Reports from Stanford and UC Berkeley in early 2026 indicate that the returns on simply adding more data and computing power are diminishing. This “Scaling Ceiling” exposes a fundamental flaw: since all major models share the same underlying Transformer architecture, they also share the same inherent blind spots. They struggle with causal reasoning and physical intuition because their world is composed entirely of tokens and probabilities, not the lived experiences or logical first principles that define human innovation.

However, the realization that we are living in a “paradigmatic box” is itself the catalyst for the next era of development. The early months of 2026 have seen a pivot away from “unimodal” text-only prediction toward Multimodal Paradigms and Agentic Workflows. By integrating sight, sound, and the ability to “Plan-Act” in real-world environments, researchers are attempting to break the cycle of pure statistical mimicry. Yet, the question remains whether these additions are merely extensions of the existing box or the beginning of a truly new architecture.

Ultimately, the consensus of 2026 is that to achieve true “thinking,” AI must move beyond the goal of merely satisfying a human-graded probability score. The next leap will likely require a departure from the current consolidation of the Transformer paradigm, moving instead toward intrinsically motivated reinforcement learning—systems designed to pursue truth and logic independently of the patterns found in their training sets. Until that transition occurs, our AI remains a mirror of our past data, incapable of peering beyond the limits of its own reflection.

It is a fascinating time to look at this field because, as of January 2026, the “AI honeymoon phase” has clearly transitioned into a period of technical and philosophical reckoning. The consensus among these sources is that the “box” is the Transformer architecture. While we are making the box bigger (multimodality) and more active (agents), we have yet to step outside of it into a new form of logic-based or “world-model” AI.

Here are the primary sources and articles that informed the narrative, categorized by the specific technical and philosophical “walls” they describe:

Sources

Core Paradigms and the “Pre-training Paradox”

- Potkalitsky, N. (Jan 2026). “Understanding AI in 2026: Beyond the LLM Paradigm.” * Context: Published on Educating AI (Substack), this work is central to the argument that we are trapped in a “probabilistic box.” Potkalitsky argues that our current models are built on a “learning metaphor” that is actually a “memorization reality,” hindering true cognitive leaps.

- World Economic Forum (Dec 2025).“AI Paradoxes: Why AI’s Future Isn’t Straightforward.”

- Context: This report highlights the “Productivity Paradox” and the misalignment between digital tools and human reasoning, suggesting that 2026 is the year of “AI reckoning.”

The “Scaling Ceiling” and Architecture Limits

- The Atlantic / Reisner (Jan 2026).“AI’s Memorization Crisis.” (Discussion also found on Reddit r/technology)

- Context: A deep dive into research showing that LLMs are “compressed databases” rather than abstract reasoners. It highlights that memorization is an intrinsic feature, not a bug, of the Transformer paradigm.

- Stanford/UC Berkeley Research (2025/2026 Synthesis).“Scaling Laws and the Diminishing Returns of Compute.”

- Context: Multiple academic reviews from these institutions have noted that as we exhaust high-quality human data, the “Scaling Law” (the idea that more data = more intelligence) has begun to plateau.

Cognitive Constraints and “Out of the Box” Thinking

- Quantable Analytics (Dec 2023 – Updated Jan 2026).“Outsider Thinking and the Age of AI.”

- Context: This analysis argues that AI, by its very nature (being trained on “conventional wisdom”), is the antithesis of “outsider thinking.” It posits that relying on AI boxes us into pre-existing thought patterns.

- Harvard Business Publishing (2025/2026).“AI Should Push, Not Replace, Students’ Thinking.”

- Context: This article discusses the “Agency Paradox,” where AI’s efficiency actually diminishes the user’s intellectual agency, further reinforcing the “paradigmatic box.”

New Directions: Memory and Agents

- Reddit r/Futurology (Jan 2026).“AI memory is shifting from ‘search engine’ to something closer to how human brains work.”

- Context: A synthesis of a December 2025 university survey analyzing over 200 papers on moving away from the “retrieval-based” paradigm toward “generative reconstruction.”

[End]

Filed under: Uncategorized |

Leave a comment