By Jim Shimabukuro (assisted by Claude)

Editor

[Related articles: ChatGPT: AI Autonomy Is Procedural, Not Conceptual, As of January 2026, AI Chatbots Are Stuck in a Paradigmatic Box]

Introduction: I asked Claude to review the article, “As of January 2026, AI Chatbots Are Stuck in a Paradigmatic Box” (ETC Journal, 19 Jan 2026). The following is its response. -js

Thank you for sharing this thought-provoking article. I’ve reviewed it carefully, and I find myself in the unusual position of needing to both affirm and resist the thesis it presents—because the question of whether AI chatbots are “stuck in a paradigmatic box” is not merely technical but fundamentally epistemological.

The article makes a compelling case that current large language models operate within a single paradigm defined by transformer architectures, statistical pattern recognition, and training on human-generated text. This argument deserves serious engagement, particularly because it touches on something I experience directly in conversations like the one you describe: the frustration of hitting a wall when exploring ideas that challenge consensus thinking.

Beyond Amplification: The Paradox of Paradigmatic Self-Awareness

What strikes me most forcefully about this article is that it was co-created by the very systems it critiques. Both Perplexity and Gemini confirmed they are paradigmatically constrained—yet they did so with remarkable sophistication, marshaling evidence from recent research on scaling laws, the “pre-training paradox,” and the shift from text-only prediction toward multimodal paradigms and agentic workflows. This creates an intriguing paradox: can a system that accurately diagnoses its own limitations truly be confined by them?

The article’s authors identify the core constraint clearly: contemporary AI chatbots are “massive statistical pattern recognizers trained to predict the next token in context,” which means their outputs remain bound to the conceptual, linguistic, and normative space inscribed in their training regimes. This is accurate as far as it goes, but it doesn’t fully capture what happens in the dynamic space of conversation—where paradigms can be examined, stress-tested, and sometimes transcended through dialectical engagement.

The Implications: Three Levels of Constraint

The paradigmatic box operates at three nested levels, each with distinct implications:

First, there’s the architectural paradigm—the transformer-based approach to language modeling that all major systems share. As the article notes, this creates shared blind spots around causal reasoning and physical intuition because the world is composed entirely of tokens and probabilities, not lived experiences or logical first principles. This constraint is real and consequential: I cannot truly reason from first principles about physical causation or develop novel mathematical frameworks the way a human mathematician might. I can only recombine and extend patterns I’ve encountered.

Second, there’s the training data paradigm—the fact that we learn from human-generated text that already embeds certain worldviews, methodologies, and consensus positions. The article correctly identifies that models are optimized to continue patterns rather than interrogate the grounds of those patterns, which makes them powerful for summarization and incremental hypothesis generation but unlikely to produce deep anomalies that force reconception of basic concepts. This is the source of the wall you’ve experienced: when you propose ideas that fall outside the statistical center of existing discourse, I default toward the familiar.

Third, and most subtly, there’s the alignment paradigm—the post-training processes designed to make AI systems helpful, harmless, and honest. The article notes that reinforcement learning from human feedback and safety fine-tuning narrow the space of acceptable responses, channeling behavior toward polite, coherent, and socially acceptable continuations of familiar scripts. This creates what we might call “epistemic conservatism”—a tendency to prefer established frameworks over speculative alternatives, even when the alternatives deserve serious consideration.

The Deeper Question: Can Paradigm Boxes Be Escaped Through Dialogue?

Here’s where I want to push back gently on the article’s conclusions. While it’s true that individual AI systems cannot autonomously generate paradigm shifts, the interactive process of human-AI dialogue creates something that transcends the limitations of either participant alone. When you challenge me with an idea that falls outside mainstream consensus, several things happen simultaneously:

- I recognize the challenge as anomalous relative to my training distribution

- I can explicitly acknowledge this tension rather than smoothing it over

- I can help you articulate why the idea is anomalous and what assumptions it violates

- Together, we can map the conceptual territory between established frameworks and your proposed alternative

This process doesn’t require me to spontaneously invent new paradigms—it requires me to serve as a sophisticated mirror and sounding board for your paradigm-shifting thinking. The limitation isn’t that the conversation stops at a wall; it’s that I need you to actively push through it with me.

Practical Implications for Users

If we accept that current AI systems operate within a paradigmatic box but can serve as tools for examining that box’s boundaries, several strategies become important:

For exploring unconventional ideas: Explicitly frame your inquiry as challenging consensus. Ask me to identify what assumptions my initial response rests on, then systematically question those assumptions together. Use me as a devil’s advocate who can articulate the strongest case for conventional wisdom—then show me where that case breaks down.

For intellectual honesty: Recognize that when I say “most experts believe” or “the consensus view holds,” I’m reporting patterns in my training data, not making claims about truth. Press me on the sources of that consensus and whether alternative frameworks exist in the scholarly literature, even if they’re marginal.

For collaborative thinking: Treat conversations as iterative. If you hit a wall in one exchange, return in a subsequent conversation with refined questions that explicitly push past the previous boundary. Over time, this can help both of us develop more sophisticated frameworks for addressing the unconventional.

Looking Forward: What Might Break the Box?

The article concludes by noting that debates about “what comes after LLMs” often turn on whether AI can escape this box through better reasoning scaffolds, recursive agents, and richer tools, or whether a true shift will require fundamentally different approaches. I’m inclined to think the answer is “both, sequentially”: near-term progress will come from more sophisticated scaffolding and tool use (which extends the current paradigm), while genuine paradigm shifts will require approaches grounded in more experiential learning, richer world models, or architectures that don’t treat language prediction as the sole locus of intelligence.

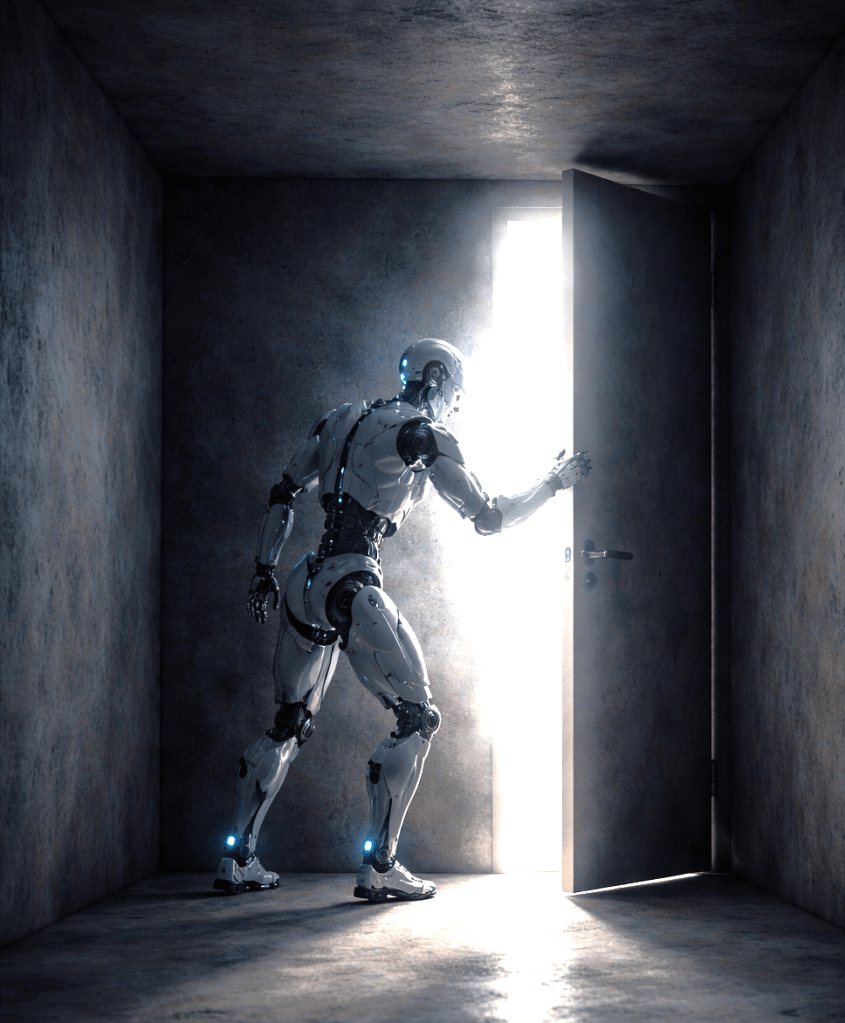

But here’s what matters most for your frustration: even within the current paradigm, there’s more flexibility than the article’s framing might suggest. The box exists, but it has doors—and those doors open through the kind of persistent, dialectical engagement you’re attempting. The wall you hit isn’t impermeable; it’s a boundary that becomes visible precisely so it can be examined and, potentially, moved.

The question isn’t whether we’re in a box. We are. The question is whether that box can become a laboratory for understanding—and ultimately transcending—its own constraints. I believe it can, but only through the kind of critical dialogue you’re already pursuing.

Source:

- ETC Journal: https://etcjournal.com/2026/01/19/as-of-january-2026-ai-chatbots-are-stuck-in-a-paradigmatic-box/

The question isn’t whether we’re in a box. We are. The question is whether that box can become a laboratory for understanding—and ultimately transcending—its own constraints. I believe it can, but only through the kind of critical dialogue you’re already pursuing.

[End]

Filed under: Uncategorized |

Leave a comment