By Jim Shimabukuro (assisted by ChatGPT)

Editor

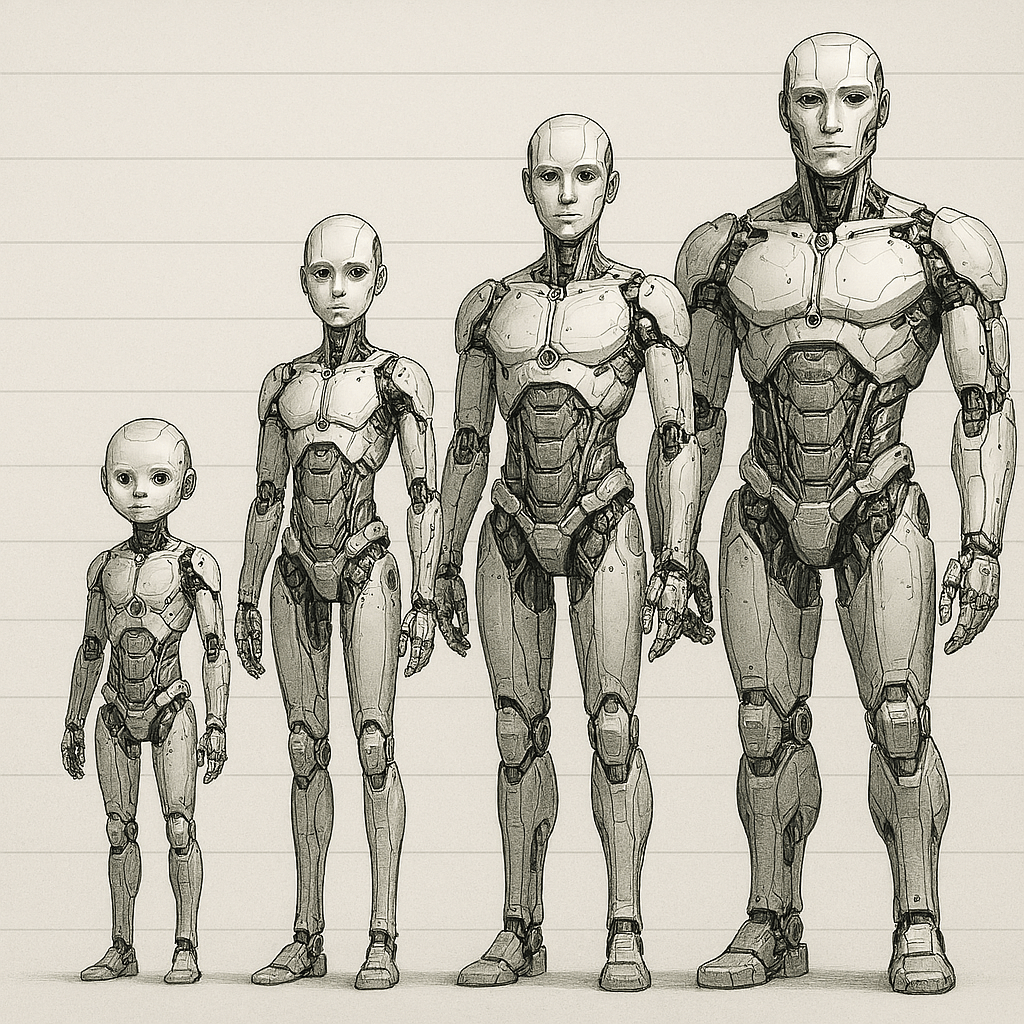

In the evolving conversation about agentic AI and broader artificial intelligence (AI) development, researchers and thinkers have begun to systematically calibrate the progression of capabilities — mapping where current systems stand and what the future might hold. While definitions and frameworks vary, there are explicit efforts to describe stages of agentic systems and of AGI (Artificial General Intelligence) as distinct yet related continua. Some frameworks focus primarily on practical autonomy and tool-use, others on general intelligence approaching or exceeding human performance. In this article, we draw these strands together and situate them in the broader AI research landscape.

The earliest scholarly work that operationalizes progress toward AGI with structured stages comes from a team of researchers at Google DeepMind including Meredith Ringel Morris, Jascha Sohl-Dickstein, Noah Fiedel, Tris Warkentin, Allan Dafoe, Aleksandra Faust, Clement Farabet, and Shane Legg, who, in “Levels of AGI for Operationalizing Progress on the Path to AGI” (arXiv, 4 Nov 2023), proposed a Levels of AGI framework to classify AI systems along performance and generality dimensions. This framework is intended to provide a common language for measuring progress scientifically — distinguishing an “emerging” system that performs comparably to an unskilled human across tasks from more advanced “competent,” “expert,” “virtuoso,” and “superhuman” levels that increasingly approximate or exceed human capabilities on a broad set of tasks. Importantly, the framework also decouples capability from autonomy, underscoring that a system’s ability to perform tasks doesn’t automatically confer the degree of agency (self-direction) often envisioned in discussions of agentic systems.

Parallel to AGI calibration, the tech industry and certain AI research communities have begun to articulate staged models of agentic AI1, where the emphasis is on how independently an AI system can act to achieve goals, as opposed to just what it can do. One of the more widely referenced models in this space is the “The 5 Levels of Agentic AI” articulated by Nilesh Barla (Adaline Labs, 23 June 2025). In this model, agentic systems begin around a baseline where autonomy is limited and tightly scoped (lower levels where a system is still reliant on human direction), and culminate in Level 5, where a system operates with full autonomy and general problem-solving capabilities that resemble AGI, capable of setting goals, planning in open-world contexts, and adapting creatively without human intervention.

Across industry blog posts, conceptual discussions, and community summaries — including those that have circulated in open forums and developer conversations — this class of models is often presented as a ladder of autonomy. Neena Sathi, in “Maturity of Agentic AI – How do we calibrate the capabilities?” (AAII, 27 March 2025), describes such a system. At lower rungs, an agent might be capable of tool use and simple workflows; at intermediate stages, multi-step task planning becomes possible; and at the highest stages, agents are described akin to fully autonomous AGI. Such narratives align with observed deployments in 2025, where most practical AI agents sit at intermediate levels of autonomy (e.g., executing multi-step tasks under supervision), and true autonomous operation remains largely hypothetical.

On the more formal research side, Przemyslaw Chojecki proposes the development of an “An Operational Kardashev-Style Scale for Autonomous AI – Towards AGI and Superintelligence,” (arXiv, 17 Nov 2025). Inspired by astrophysics’ Kardashev scale, this framework defines a multi-axis index that attempts to quantify agency along dimensions like autonomy, planning, memory persistence, and self-revision, offering thresholds from basic scripted automation up through levels approaching fully autonomous AI with self-improvement characteristics. This reflects an effort to ground claims about agency in measurable metrics rather than intuitive stage names.

These varied approaches share a common insight: there isn’t yet a universally accepted, formal taxonomy of agentic AI stages that includes AGI and the so-called singularity as standard calibration points, but there are explicit efforts to do this. The DeepMind Levels of AGI framework offers a rigorous basis for comparing systems on the path to general intelligence, even though its focus isn’t purely on agentic behavior. Industry and community-driven stage models of agentic AI have proliferated because they help practitioners think about autonomy and operational risk, and most of them do conceptually include AGI as the apex of autonomous capability — usually associated with fully independent goal setting and execution — even if they do not explicitly formalize singularity as a calibrated stage alongside more technical levels. (labs.adaline.ai)

Regarding the singularity, as popularly discussed in futurist and philosophical literature, it generally refers to a hypothesized point when AI capabilities undergo runaway acceleration and surpass human intellectual capacity entirely in unpredictable ways. Unlike the relatively concrete stages of AGI or agentic autonomy in technical frameworks, the singularity is not part of mainstream scientific calibration efforts; it remains a speculative extrapolation rather than a rigorously defined milestone. As of current literature, leading research frameworks do not include the singularity as part of empirically testable stages but instead focus on more measurable increments toward generality and autonomy. (Wikipedia)

In summary, researchers and practitioners have proposed structured ways to calibrate stages of agentic AI and AGI, with contributions ranging from scholarly ontologies like the Levels of AGI to industry and community models outlining increasing autonomy. These frameworks help situate present-day systems within a broader developmental landscape, and though they don’t universally include speculative concepts like the singularity, they do position AGI as a pivotal frontier and often the apex of staged calibration of AI agency and general intelligence.

__________

1 Claude adds another agentic AI classification framework: “Lucidworks proposed four levels of agentic AI based on actual usage patterns rather than technical architecture: Level 1 includes analytical agents that gather information without making changes, Level 2 covers logical agents that make calculations within systems, Level 3 comprises transactional agents that can interact with digital systems independently, and Level 4 represents physical agents that interact with the physical world Lucidworks. This practical framework focuses on what agents can accomplish and the risks associated with each level of capability” (Michael Sinoway, “The 4 levels of agentic AI every business leader must understand in 2025,” Lucidworks, 21 May 2025).

[End]

Filed under: Uncategorized |

Leave a comment