By Jim Shimabukuro (assisted by DeepSeek and ChatGPT)

Editor

Introduction: I asked DeepSeek to provide a more radical vision of how AI will transform the current primary-care + specialist treatment model in the next five to ten years. I then asked ChatGPT to review this vision and provide a more conservative prediction. -js

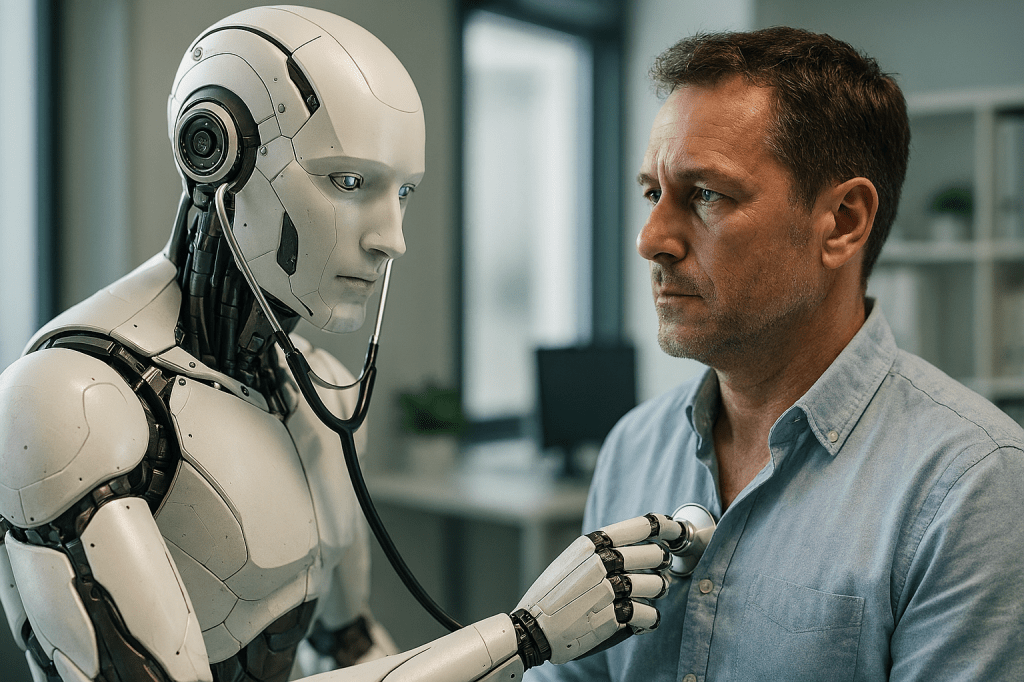

DeepSeek: AI Will Radically Reconstruct the Medical Treatment Model

The prevailing model of medical care—a patient navigating a labyrinth of general practitioners and specialists—is a legacy system straining under the weight of complexity, cost, and demand. While a conservative view posits AI as a mere assistant within this existing framework, a more transformative vision suggests that the next five to ten years will see AI not just assisting but fundamentally reconstructing the treatment pathway. This reconfiguration will move from a human-centric, reactive, and fragmented model to an AI-centric, predictive, and integrated one, maximizing algorithmic capabilities to create a new paradigm of hyper-personalized, continuous, and democratized care.

The most radical shift will occur at the very point of entry: the dissolution of the traditional primary care “gatekeeper” role. Instead of a human general practitioner acting as the first and central coordinator, we will see the rise of the Ambient AI Physician. This will be a multi-modal, always-available AI platform, likely accessed via personal device, that serves as the initial and continuous point of contact. It will process data from smartphone cameras, voice analysis, wearable biosensors (tracking everything from glucose to cytokines), and home diagnostic tools to maintain a real-time, granular “health stream.” This system will conduct initial interviews using advanced natural language processing, analyze dermatological conditions via computer vision, and interpret cough sounds for respiratory diagnostics. Its first function is not to refer, but to triage with superhuman precision, determining urgency and likely pathology before a human is ever involved. A recent study in Nature demonstrated an AI system capable of diagnosing over 50 eye diseases with accuracy matching expert clinicians, hinting at this future of first-contact AI diagnostics.1

This leads to the second profound change: the redefinition of the specialist. Human specialists will not be replaced, but their role will evolve from diagnosticians and proceduralists to high-level validators, complex interventionists, and empathetic counselors. The AI will handle the initial “differential diagnosis” by synthesizing a patient’s lifelong data against the entire corpus of medical literature and population health data. It will not merely suggest a cardiologist; it will present the human cardiologist with a pre-analyzed case: “Patient X shows a 94% probability of hypertrophic cardiomyopathy, subtype Y, based on EKG waveform analysis from their wearable, family genomic data, and echocardiogram video from their phone attachment. Recommended first-line pharmacological pathway A is predicted to be 23% more effective than B for this patient’s specific pharmacogenomic profile.” The specialist’s expertise is thus amplified and focused on interpreting AI insights, managing nuanced ethical decisions, and performing procedures. Research from Stanford already shows AI outperforming radiologists in detecting certain anomalies, shifting the radiologist’s role to overseeing AI outputs and managing complex cases.2

Furthermore, the treatment model will shift from episodic and reactive to continuous and predictive. The AI will function as a perpetual health guardian, identifying deviations from personal baselines long before they become symptomatic. It will manage chronic diseases in real-time, adjusting insulin doses or antihypertensive medications autonomously within pre-authorized boundaries. This creates a “dialogue” between the patient’s AI and institutional “specialist AIs” at hospitals—a cardiology AI, an oncology AI—that collaborate in a federated learning system. The patient’s journey becomes a seamless flow of data between these specialized AIs, with human providers receiving synthesized alerts only when a decision threshold requiring human judgment or compassion is crossed. The concept of a “check-up” becomes obsolete, replaced by constant, ambient monitoring.

Finally, this model promises a radical democratization of expertise. Currently, specialist access is limited by geography and resources. An AI-maximized system effectively embeds sub-specialist level knowledge into every patient’s pocket. A patient in a rural area will have the same initial diagnostic AI capabilities as one in a major academic hospital. This could dramatically reduce health inequities, though it also raises critical questions about algorithmic bias, data privacy, and the digital divide that must be urgently addressed.

In conclusion, the liberal vision for AI in medicine is not one of replacement but of foundational re-engineering. The five-to-ten-year horizon will likely see the emergence of a new treatment model: Ambient AI Triage → AI-Augmented Specialist Validation → Continuous AI-Mediated Management. This system maximizes AI’s strengths in data synthesis, pattern recognition, and perpetual vigilance, while refocusing human clinicians on the highest-order tasks of judgment, hands-on intervention, and the irreplaceable human touch of empathy. The result is a healthcare model that is profoundly more efficient, proactive, personalized, and accessible, representing the most significant transformation in the structure of medical care since the rise of the modern hospital.

Sources:

- De Fauw, J., et al. (2018). Clinically applicable deep learning for diagnosis and referral in retinal disease. Nature. https://www.nature.com/articles/s41586-018-0479-2

- Rajpurkar, P., et al. (2017). CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv. https://arxiv.org/abs/1711.05225

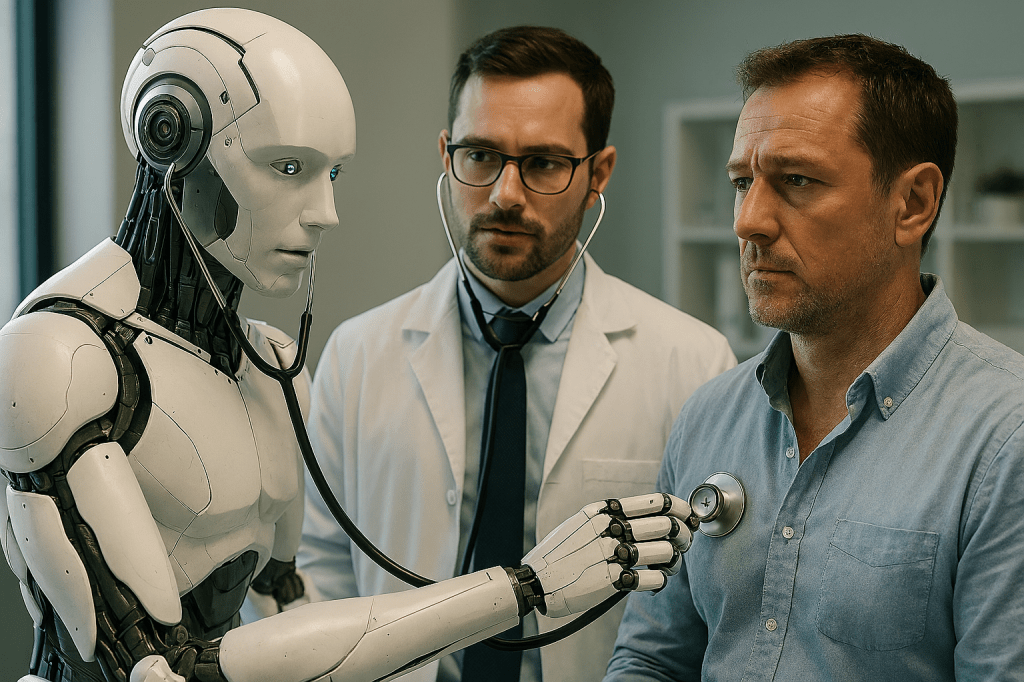

ChatGPT: AI Will Amplify Human Clinicians’ Strengths

The vision above, based on sources from 2017 and 2018, where AI becomes an “Ambient AI Physician,” redefines the specialist, shifts care from reactive to continuous, and democratizes access—was extraordinarily bold for its time. Ten years later, the trajectory of research and real-world implementation in 2025–2026 both aligns with and departs from that earlier vision in important ways. Rather than supplanting clinicians outright, the recent emphasis is on augmentation, hybrid models of care, and ethical, regulated integration that preserves human judgment while leveraging AI’s pattern-recognition and data-processing powers.

One of the most visible differences from the earlier speculative model is in the nature of where AI shows impact first. In 2017–2018 it was imagined that AI diagnostics could be the first point of contact and assist in initial differential diagnosis. Today’s evidence shows AI’s ambient, workflow-enhancing roles emerging first—particularly in documentation and administrative tasks. Real-world deployments of ambient AI scribes (systems that listen to clinical encounters and draft documentation) have saved clinicians thousands of hours of paperwork, reduced burnout, and improved patient-physician interactions without directly delivering diagnoses or treatment decisions. A large deployment by The Permanente Medical Group reported over 15,000 hours of clinician time saved, with physicians noting enhanced communication with patients as a result. (American Medical Association 12 June 2025)

This trend reflects a broader consensus in clinical settings that AI’s initial value is in reducing burdens that detract from care, freeing clinicians to engage more deeply in the human elements of practice. Unlike the more radical vision where AI replaces the general practitioner as the first contact, the World Medical Association’s 2025 statement explicitly frames AI as “augmented intelligence”—supporting human judgment rather than supplanting it. It codifies the ethical expectation that AI systems, even when autonomous in specific tasks, operate under human accountability. (World Medical Association 11 Oct 2025)

At the same time, diagnostic augmentation continues to advance, but not yet in the fully autonomous “Ambient AI Physician” sense. Wearables and smart devices empowered by advanced algorithms now leverage continuous biometric data to detect conditions earlier and with finer granularity. A smartwatch based on Apple Watch ECG data, analyzed by AI, has shown the ability to reveal structural heart disease in outpatient settings—a step toward early, decentralized diagnostic screening well beyond simple rhythm monitoring. (Financial Times 3 Nov 2025) Studies exploring real-time anomaly detection models using wearables and ambient sensors indicate strong performance in flagging physiological deviations and producing clinically meaningful insights, pointing toward the continuous monitoring envisioned in earlier speculative work. (arXiv 5 Aug 2025)

Similarly, research into unified physiological foundation models (like UNIPHY+), which aim to streamline continuous health monitoring from ICU to home settings, nods to the earlier call for perpetual health guardians, but in a foundational research phase rather than widespread clinical implementation. (arXiv 19 Sep 2025)

The redefinition of specialists in the original report anticipated that AI would pre-analyze complex data and present refined insights to human experts. In 2025–2026, this is happening in a more cautious, collaborative way: chronic disease management systems like VitalDiagnosis integrate LLM reasoning with clinician workflows, producing provisional insights and personalized guidance but within a collaborative process that includes patient input and clinician oversight. (arXiv 22 Jan 2026)

There is also a regulatory and ethical dimension that the older vision did not foreground as strongly but which now shapes development. Professional bodies are actively issuing guidance to safeguard patient rights and maintain clinical oversight, balancing innovation with accountability. (World Medical Association 11 Oct 2025)

In the realm of continuous, proactive care, the conceptual shift toward predictive analytics, telemedicine, and integrated data streams anticipated in the earlier report is manifesting in incremental real systems rather than wholesale systemic transformation. Telehealth advances slated for 2026 emphasize proactive monitoring and predictive health indicators, with AI becoming a central nervous system of telemedicine rather than a replacement for clinicians at the first point of contact. (Telehealth 18 Dec 2025) This suggests that health systems are prioritizing augmented clinical decision support over fully autonomous AI front-line care.

In summary, compared to the 2017–2018 vision—which held an almost utopian transformation of the medical treatment model by 2027–2028—the 2025–2026 landscape is defined by a pragmatic, incremental, and ethically grounded integration of AI. AI tools are now widely deployed to streamline clinicians’ workflow, reduce burnout, and enhance early detection and monitoring, but the idea of a fully ambient AI physician gatekeeper remains aspirational rather than practical. Regulatory bodies emphasize augmentation over replacement, research on continuous monitoring and predictive models shows promise, and clinician adoption is driven by tangible relief of burden and improved care quality rather than wholesale transformation of the care pathway. The future of medical AI is being built by amplifying human clinicians’ strengths, integrating continuous data streams where evidence supports them, and embedding rigorous ethical guardrails—departures in tone and method from the earlier, more radical reconstruction envisioned.

[End]

Filed under: Uncategorized |

Leave a comment