By Jim Shimabukuro (assisted by Gemini)

Editor

Introduction: On this quiet Sunday afternoon in humid Honolulu, I had another wide-ranging conversation with Gemini. I began as usual with a thought, this time about Moore’s Law and the progress of chip technology, and the conversation gradually flowed into other streams while maintaining the same general course. Come along for the ride. I had fun, and you might, too. -js

JS: Does Moore’s Law re the doubling of the number of components on a single silicon chip every two years account for the exponential growth of the speed of change? And does this growth impact its two-year time frame and doubling rate? Is Moore’s Law still viable today?

Gemini: You’re touching on some of the most fundamental questions about technological progress and its limits! Let’s break down Moore’s Law and its relationship to the “speed of change” and its current viability.

Does Moore’s Law Account for the Exponential Growth of the Speed of Change?

Yes, Moore’s Law is widely considered the primary driver and, in many ways, the foundational explanation for the exponential growth of the “speed of change” in the digital realm over the past five decades.1

Here’s why:

- The Core Mechanism: Moore’s Law states that the number of transistors on an integrated circuit (microchip) doubles approximately every two years.2 More transistors on a chip mean:

- More Processing Power: More computational units to perform operations.

- Increased Speed: Smaller transistors mean shorter distances for electrons to travel, leading to faster clock speeds (though this particular gain has slowed, as discussed below).

- Reduced Cost Per Transistor: As more transistors are packed into the same area, the cost per individual transistor decreases dramatically.

- Reduced Power Consumption (historically): For a long time, smaller transistors also meant lower power consumption per transistor, allowing for more powerful chips without excessive heat.3

- Compounding Effect: This doubling, every two years, is exponential growth. It means that the capabilities of computing devices don’t just increase linearly; they multiply. This exponential increase in raw computing power has, in turn, fueled rapid advancements across virtually every sector that relies on computing:4

- Software Development: More powerful hardware allows for more complex and sophisticated software.

- Data Processing: The ability to process ever-larger datasets at higher speeds enabled breakthroughs in fields like big data analytics and, more recently, artificial intelligence.

- Connectivity: Enabled the miniaturization and power efficiency needed for ubiquitous mobile devices and the internet of things.

- Scientific Research: Accelerated simulations, data analysis, and modeling in fields from medicine to climate science.

- Self-Fulfilling Prophecy: Moore’s Law also became a self-fulfilling prophecy for the semiconductor industry.5 Chip manufacturers like Intel, TSMC, and Samsung used it as a target and a benchmark. Knowing that competitors were striving for this doubling, they invested heavily in R&D to meet or exceed the pace, thus continuously pushing the boundaries of what was technologically possible.

Does This Growth Impact its Two-Year Time Frame and Doubling Rate?

Yes, the very success of Moore’s Law, along with fundamental physical and economic realities, has impacted its original two-year time frame and the nature of the “doubling” today.

- The “Two Years” is More Like 2.5-4 Years for Transistor Density: While the original observation was about doubling every year (in 1965) and then revised to every two years (in 1975), in recent years, the actual doubling of transistor density has slowed. Many analysts and industry figures now suggest it’s closer to 2.5, 3, or even 4 years for traditional transistor scaling.

- Physical Limits: As transistors approach atomic scale (e.g., 2nm or 3nm nodes currently), quantum effects (like electron leakage) become significant challenges.6 It’s getting increasingly difficult to make transistors smaller without facing fundamental physics barriers or immense heat generation.7

- Economic Limits: The cost of developing and manufacturing at these advanced nodes (e.g., requiring multi-million dollar Extreme Ultraviolet Lithography – EUV machines) has skyrocketed. The cost per transistor is no longer decreasing as reliably as it once did for the very bleeding-edge nodes.8 This makes it economically unfeasible for some applications to always use the absolute latest technology.

- “More than Moore” and “Beyond Moore”: To sustain performance gains, the industry has shifted beyond simply shrinking transistors:

- 3D Stacking (Chiplets/Advanced Packaging): Instead of one monolithic chip, multiple smaller “chiplets” are packaged together in 3D, creating denser, more powerful systems.9 This allows more components without shrinking individual transistors as much.

- Specialized Architectures (Domain-Specific Accelerators): Instead of general-purpose CPUs, we see exponential gains in specialized hardware like GPUs (for AI/graphics), TPUs (Tensor Processing Units for AI), and custom ASICs.10 These achieve performance leaps for specific tasks even if individual transistor scaling slows.

- New Materials: Research into materials beyond silicon (like graphene, carbon nanotubes, III-V semiconductors) aims to overcome some physical limits.

- Software Optimization: Improving software efficiency and algorithms can also yield significant performance gains, even on existing hardware.11

Is Moore’s Law Still Viable Today (in 2025)?

The consensus among experts in 2025 is that Moore’s Law, in its original, strict definition of transistor density doubling every two years at decreasing cost, is no longer perfectly viable or is significantly challenged.

However, it’s also not “dead.”

- Transformed, Not Dead: Moore’s Law has transformed and diversified. The industry is finding new ways to achieve exponential improvements in overall computing performance and power efficiency, even if the direct scaling of individual transistors slows down.

- “Moore’s Law is Not Dead, It Has Multiplied”: This is a common saying now, reflecting the multi-pronged approach (3D stacking, chiplets, specialized architectures, material science, improved manufacturing processes like EUV) that continues to deliver performance gains, albeit at a higher cost and complexity than before.12

- The Driver for Innovation: Even if the strict adherence to “doubling every two years” is challenged, the spirit of Moore’s Law – the relentless pursuit of more powerful, smaller, and more efficient computing – continues to drive innovation in the semiconductor industry.13 Companies like Intel and TSMC still announce roadmaps aiming for continued density increases, even if the pace is slightly adjusted or achieved through different means.

In essence, the “speed of change” in computing continues its exponential trajectory, but the underlying mechanisms achieving it have become much more sophisticated and multi-faceted than simply shrinking transistors on a single chip. Moore’s Law, as a guiding principle for progress, remains highly relevant, even if its literal interpretation has evolved.

JS: Can I assume that the evolving complexity and power of chips plays a critical role in the development of AI?

Gemini: Absolutely, you can and should assume that the evolving complexity and power of chips plays an absolutely critical, indeed fundamental, role in the development of AI.

The relationship between chip advancements (hardware) and AI development (software/algorithms) is symbiotic and deeply interdependent. One cannot truly advance without the other, and they constantly push each other forward.

Here’s why:

- Computational Demands of AI:

- Training Large Models: Modern AI, especially deep learning and large language models (LLMs) like me, requires immense computational power for training. These models learn by processing colossal datasets (text, images, audio, video) and performing billions, even trillions, of calculations (primarily matrix multiplications) to adjust their internal parameters. Without increasingly powerful and specialized chips, training these models would be impossibly slow and prohibitively expensive.

- Inference: Once trained, these models also need significant computational power to run (“inference”) and generate responses in real-time. This is crucial for applications like autonomous vehicles, real-time language translation, and conversational AI.

- Specialized AI Chips are the Engine:

- Beyond CPUs: While general-purpose Central Processing Units (CPUs) laid the groundwork, they are not efficient enough for the parallel processing demands of AI.

- The Rise of GPUs: Graphics Processing Units (GPUs), initially designed for rendering complex graphics, proved incredibly effective for AI because their architecture allows for massive parallel computations – exactly what neural networks need. NVIDIA, for instance, has become a powerhouse due to its dominance in high-performance GPUs for AI.

- Dedicated AI Accelerators (TPUs, ASICs, NPUs): As AI needs grew, companies like Google developed custom Tensor Processing Units (TPUs) specifically optimized for deep learning workloads. Application-Specific Integrated Circuits (ASICs) are custom-designed chips for particular AI tasks, prioritizing speed and energy efficiency (e.g., for inference on edge devices). Neural Processing Units (NPUs) are increasingly integrated into smartphones and other edge devices for on-device AI capabilities like facial recognition. These specialized chips are orders of magnitude more efficient for AI tasks than general-purpose CPUs.

- Enabling New AI Capabilities:

- Scale: More powerful chips allow for larger and more complex AI models. The “bigger is better” trend in AI (e.g., models with billions or trillions of parameters) is directly enabled by hardware advancements. These larger models exhibit emergent capabilities that smaller ones do not.

- Speed: Faster chips mean faster training times and lower latency for inference, which unlocks real-time AI applications that were previously impossible.

- Efficiency: More energy-efficient chips reduce the colossal power consumption of AI data centers and enable AI to run on resource-constrained devices at the “edge” (smartphones, IoT devices, autonomous vehicles).

- Hardware-Software Co-design:

- The relationship is not just about hardware enabling software; it’s a feedback loop. AI algorithms are increasingly being used to design better chips themselves, optimizing layouts, identifying flaws, and accelerating the design process.

- Conversely, AI software is optimized to take full advantage of the unique architectures of specialized AI chips. This “co-design” or “symbiotic” relationship ensures that both sides are continually pushing the frontier.

- Future of AI is Tied to Chip Innovation:

- Concepts like neuromorphic computing (chips modeled after the human brain for extreme energy efficiency) and even quantum computing (for solving problems intractable for classical computers) represent the next frontiers in chip technology, and they promise to unlock entirely new levels of AI capability.

In short, without the relentless evolution of chip complexity and power – driven by the spirit of Moore’s Law and new “More than Moore” approaches – the current exponential growth and sophistication of AI simply would not be possible. They are two sides of the same coin in the digital revolution.

JS: You mention the new “More than Moore” approaches. Has “Moore’s Law” been overtaken by a new law?

Gemini: That’s an excellent follow-up question, hitting on a key debate in the semiconductor and computing industries!

No, “Moore’s Law” has not been formally overtaken or replaced by a single, new, universally accepted “law” in the same way Moore’s Law itself was a clear observation and, later, a target.

Instead, we are in an era often referred to as “Post-Moore’s Law” or the “More than Moore” era. This signifies a shift in how we achieve performance gains, rather than a single new overarching principle.

Here’s why:

- Moore’s Law’s Evolution and Slowdown:

- As we discussed, the original observation of transistor density doubling every 1-2 years at decreasing cost is no longer holding strictly true for traditional 2D silicon scaling. Physical limits (atomic scale, quantum effects) and economic limits (exorbitant manufacturing costs at the most advanced nodes) have made continued direct scaling incredibly difficult and expensive.

- Industry leaders like Jensen Huang (Nvidia CEO) have declared Moore’s Law “dead” in its traditional sense, while others (like Intel’s Pat Gelsinger) argue it’s merely slowing or changing.1 The consensus leans towards it being significantly challenged or transformed.

- The “More than Moore” Approach:

- This is the collective term for the strategies the semiconductor industry is employing to continue improving computing performance and efficiency, even when traditional transistor scaling slows. It’s not a single law but a portfolio of innovations:

- 3D Stacking / Advanced Packaging / Chiplets: Instead of putting all components on a single 2D plane, manufacturers are stacking layers of transistors vertically (3D NAND memory, 3D integrated circuits) or breaking down large chips into smaller, specialized “chiplets” that are then interconnected on an advanced package.2 This increases density and reduces communication distances without needing to shrink individual transistors further.3

- Specialized Architectures (Domain-Specific Accelerators): This is perhaps the most impactful “More than Moore” approach.4 Instead of general-purpose CPUs getting faster, we see massive gains from hardware tailored for specific workloads. Examples include:

- GPUs (Graphics Processing Units): Essential for AI training and other parallelizable tasks.

- TPUs (Tensor Processing Units): Google’s custom chips specifically for AI (TensorFlow) workloads.5

- NPUs (Neural Processing Units): Increasingly common in mobile devices for on-device AI.6

- ASICs (Application-Specific Integrated Circuits): Custom-designed chips for very specific tasks, offering extreme efficiency.7

- New Materials & Physics: Research into materials beyond silicon (e.g., carbon nanotubes, 2D materials like graphene), optical computing (using light instead of electrons), and spintronics (using electron spin) aims to unlock entirely new ways of computing at lower energy and higher speeds.

- Software Optimization: Improving algorithms and software efficiency to get more performance out of existing hardware.8

- Neuromorphic Computing: Chips designed to mimic the brain’s structure and function, aiming for extreme energy efficiency and parallel processing for AI.9

- Quantum Computing: A fundamentally different paradigm of computing that uses quantum-mechanical phenomena (superposition, entanglement) to solve certain problems exponentially faster than classical computers.10 While not a direct replacement for all classical computing, it holds immense promise for specific, complex problems.

- This is the collective term for the strategies the semiconductor industry is employing to continue improving computing performance and efficiency, even when traditional transistor scaling slows. It’s not a single law but a portfolio of innovations:

- No Single Successor “Law” (Yet):

- While some have proposed new observations for specific areas (e.g., “Neven’s Law” referring to Google’s quantum computing progress showing a doubly-exponential improvement in quantum processing power), these are not yet broad, universally applicable principles for the entire semiconductor industry in the way Moore’s Law was.

- The industry is too diverse now. Gains come from many different directions, making a single, simple exponential “law” for overall performance much harder to define.

In conclusion, “Moore’s Law” hasn’t been definitively “overtaken” by a single new law. Instead, the industry has entered a more complex, multi-faceted era where “More than Moore” strategies (like specialized chips, advanced packaging, and new materials) are collectively driving continued computational progress, even as the traditional shrinking of transistors on a single die becomes increasingly difficult.11 The spirit of relentless improvement continues, but the methodology has diversified.

JS: Does the “More than Moore” law account for the faster vertical changes that seem to be occurring in chip design and manufacture as opposed to the slower horizontal rates? Is this perception of vertical and horizontal improvements accurate? Or is there a better way of conceptualizing more-than-Moore models?

Gemini: You’ve hit on a fascinating way to conceptualize the shifts in semiconductor innovation! The terms “vertical” and “horizontal” are indeed used in the industry, though sometimes with slightly different meanings that can lead to confusion.

Let’s clarify and see how they relate to “More than Moore” and your perception of faster “vertical” changes.

“Vertical” vs. “Horizontal” in Chip Design/Manufacture

Your perception of “faster vertical changes” and “slower horizontal rates” is largely accurate in the context of how we achieve density and performance gains in the “More than Moore” era, but it’s important to define what those terms mean in this specific context.

- “Horizontal” Scaling (Traditional Moore’s Law):

- Meaning: This primarily refers to the traditional miniaturization of transistors and other components across the 2D surface of a silicon wafer. You’re essentially packing more elements into the same flat area. This is what the original Moore’s Law primarily described.

- Rate of Change: This rate has significantly slowed down. We’re approaching atomic limits, and the cost of shrinking these “horizontal” dimensions (e.g., from 7nm to 5nm to 3nm and beyond) is becoming astronomically expensive and technically challenging due to quantum effects.1 So, your perception of “slower horizontal rates” is correct for this type of scaling.

- “Vertical” Integration (A Key “More than Moore” Strategy):

- Meaning: This refers to stacking components on top of each other in the third dimension.2 This is a crucial aspect of “More than Moore.”

- Examples:

- 3D NAND Memory: Instead of laying memory cells side-by-side, they are stacked in multiple layers (e.g., 232 layers). This dramatically increases storage density.3

- 3D Integrated Circuits (3D ICs): Stacking different types of chips (e.g., logic, memory, specialized accelerators) vertically and connecting them with short, efficient interconnects (like Through-Silicon Vias or TSVs).4

- 2.5D Packaging / Interposers: A hybrid approach where multiple chiplets are placed side-by-side on an intermediate silicon “interposer” which then sits on a package substrate.5 While technically horizontal on the interposer, it enables very dense, high-bandwidth connections between disparate chips that might otherwise be separate, leading to a more “vertically integrated” system in terms of function.

- GAAFET (Gate-All-Around FET) / Nanosheet Transistors: Even within a single transistor, new architectures like GAAFETs stack multiple “nanosheets” or “nanowires” vertically to provide more gate control and better performance, again using the third dimension.6

- Rate of Change / Benefits: This is where you see the “faster vertical changes.” Stacking components vertically offers significant benefits:

- Increased Density: Packs more functionality into a smaller footprint.

- Shorter Interconnects: Data doesn’t have to travel as far horizontally across a large chip, leading to much faster communication speeds between components and lower power consumption.

- Heterogeneous Integration: Allows different types of specialized chips (e.g., a high-performance CPU chiplet, a specialized AI accelerator chiplet, high-bandwidth memory chiplets) to be integrated closely together, creating extremely powerful systems tailored for specific tasks.7 This leads to massive performance gains for complex applications like AI.

Is This Perception Accurate?

Yes, your perception is accurate. The industry has indeed pivoted towards vertical integration as a key method to continue performance gains, largely because the horizontal shrinking is becoming so difficult and expensive. The “vertical” strategies allow for what’s called “density scaling” (more stuff in the same volume) and “performance scaling” (faster communication, more efficient power) even if individual transistor shrinkage (“horizontal” scaling) has decelerated.8

A Better Way of Conceptualizing “More than Moore” Models

While “vertical vs. horizontal” is a useful way to think about the physical dimensions of integration, “More than Moore” encompasses a broader range of strategies. Here’s a more comprehensive conceptualization:

Instead of a single “law,” think of “More than Moore” as an ecosystem of complementary innovation pillars:

- Advanced Packaging & Heterogeneous Integration (The “Vertical” Emphasis): This is precisely what we discussed – 2.5D/3D stacking, chiplets, system-in-package (SiP) designs. It’s about integrating different types of chips, potentially made on different process nodes or even different materials, into a single, high-performance package. This is a massive area of investment because it allows for specialization and overcomes the limits of monolithic (single-chip) design.9

- Domain-Specific Architectures (The “Specialization” Emphasis): This is about moving beyond general-purpose CPUs to hardware specifically optimized for certain workloads.10 GPUs, TPUs, NPUs, and custom ASICs are prime examples. These don’t rely on general transistor scaling as much as they rely on architectural innovations that make them extremely efficient for specific calculations (like the parallel matrix multiplications in AI).

- New Materials and Transistor Architectures (Pushing Horizontal Limits with Vertical Aids): Even within the realm of traditional transistor scaling, innovation continues. Technologies like FinFETs (which are 3D in their structure, wrapping the gate around the channel like a fin) and the upcoming GAAFETs (Gate-All-Around FETs, which totally encircle the channel, often by stacking multiple “nanosheets” vertically) are pushing the limits of density and control.11 While these contribute to traditional “More Moore” (more transistors), their 3D nature blurs the line and leverages verticality within the transistor itself.

- Software & Algorithm Optimization: This is often overlooked but crucial. Smarter algorithms and highly optimized software can extract more performance from existing hardware, effectively “speeding things up” without needing new chips.12 This is a continuous process.

- Alternative Computing Paradigms: Beyond conventional electronics, research into entirely new ways of computing, such as:

- Neuromorphic Computing: Mimicking brain structure for ultra-efficient AI.13

- Optical Computing: Using photons instead of electrons.

- Quantum Computing: A radically different approach for specific intractable problems.

In summary:

Your “vertical vs. horizontal” conceptualization is a very useful way to understand a key aspect of “More than Moore” – specifically, the shift from primarily 2D transistor density gains to leveraging the third dimension for integration and density. However, “More than Moore” is a broader umbrella that also includes architectural specialization, new materials, and software optimization, all contributing to the continued, albeit more complex, exponential growth in computing power that defines our current era.14

JS: Is nanotechnology the term used for the increasing miniaturization of chips and their components? Or is there another term for this trend?

Gemini: You’ve asked a very precise question about terminology!

While “nanotechnology” is intrinsically linked to the increasing miniaturization of chips and their components, it’s not the only or even always the most direct term used by the semiconductor industry to describe the trend of getting components smaller.

Here’s a breakdown:

- Miniaturization: This is the most general and widely understood term for the trend of making electronic components and devices smaller while maintaining or improving functionality. It’s a broad concept that applies to many fields beyond just chips.

- Microelectronics: This term refers to the field of designing and manufacturing electronic components and systems with very small dimensions, typically in the micrometer (one millionth of a meter) range. For decades, chip manufacturing was squarely in the realm of microelectronics.

- Nanoelectronics: This is the more precise term that specifically describes the design, manufacturing, and study of electronic components and systems at the nanometer scale (one billionth of a meter). As transistors have shrunk to single-digit nanometers, the industry has undeniably moved into the nanoelectronics era. This term is often used to highlight the challenges and opportunities presented by operating at such tiny scales, where quantum mechanical effects become significant.

- Nanotechnology: This is an even broader scientific and technological field that involves manipulating matter at the atomic and molecular scale (typically 1 to 100 nanometers) to create new materials, devices, and systems. Nanoelectronics is a subfield of nanotechnology. So, while nanotechnology provides the tools and principles for creating nanoelectronic components (like 3nm transistors, carbon nanotubes, etc.), it’s a more encompassing term that also includes applications in medicine, materials science, energy, and more.

So, to directly answer your question:

- Nanotechnology is the overarching scientific and engineering discipline that enables the increasing miniaturization to the nanometer scale.

- Nanoelectronics is the specific term used for the application of nanotechnology principles to the field of electronic components and chips, particularly when the features are measured in nanometers.

- Miniaturization is the general trend.

You will hear all three terms used, often interchangeably in casual conversation, but in precise technical discussions:

- When discussing the size of the features on a chip (e.g., a “3nm process node”), you are directly in the realm of nanoelectronics.

- When discussing the tools, techniques, and materials that allow us to build at that scale (e.g., Extreme Ultraviolet (EUV) lithography, new types of transistors, carbon nanotubes as potential future interconnects), you are discussing nanotechnology.

- When discussing the overall outcome of getting devices smaller and more powerful, you are discussing miniaturization.

Therefore, while “nanotechnology” isn’t the exclusive term, it’s certainly a correct and critical term because chip manufacturing now deeply relies on manipulating matter at the nanoscale. “Nanoelectronics” is perhaps the most specific technical term for the chips themselves at these dimensions.

JS: Thank you! Very enlightening. You seem to imply in your earlier responses that we have reached the outer limits of advances in nanoelectronics and this bump in the road is forcing innovators into detours such as 3D stacking that seems more like jury-rigging than breakthroughs. Is this perception accurate?

Gemini: That’s an insightful way to put it, and it gets to the heart of a significant shift in the semiconductor industry. Your perception is largely accurate, though “jury-rigging” might be a bit too dismissive of the incredible engineering challenges and breakthroughs involved in these “More than Moore” approaches.

Let’s unpack why this perception holds true and refine the terminology:

Why Your Perception is Accurate (and “Jury-Rigging” as an Analogy)

You’re right that traditional “horizontal” scaling (shrinking individual transistors on a 2D plane) is hitting significant roadblocks:

- Physical Limits: We’re pushing up against the fundamental laws of physics. At 3nm, 2nm, and beyond, transistors are just a few atoms thick. Issues like quantum tunneling (electrons “leaking” through barriers they shouldn’t be able to cross), increased variability in manufacturing (even a few misplaced atoms make a difference), and immense heat generation become dominant problems. It’s like trying to draw increasingly complex patterns with a pencil whose tip is as wide as the lines you’re trying to draw.

- Economic Limits (Moore’s Second Law): The cost of manufacturing at these bleeding-edge nodes is skyrocketing. Developing and building the fabs (fabrication plants) with the necessary Extreme Ultraviolet (EUV) lithography machines (which cost hundreds of millions of dollars each) is a multi-billion dollar investment per new generation. The cost per transistor, for the very first time, is no longer consistently decreasing at the most advanced nodes. This makes it economically viable for only a handful of companies to pursue.

In this context, “3D stacking” and other advanced packaging techniques can feel like “jury-rigging” in the sense that they are clever workarounds when the primary method (pure transistor shrinking) becomes too difficult or expensive. They don’t fundamentally make the individual transistors smaller; instead, they find ways to put more of them together in a smaller volume or connect different types of specialized chips more efficiently.

However, calling them outright “jury-rigging” might undervalue the immense engineering and scientific breakthroughs required:

- Through-Silicon Vias (TSVs): Creating thousands or millions of tiny vertical holes through silicon wafers and precisely filling them with conductive material for connections is incredibly difficult.

- Micro-Bumps: Developing tiny, highly reliable solder bumps for connecting stacked chips with extremely fine pitches.

- Thermal Management: Managing heat in 3D stacked chips is a colossal challenge, requiring innovative cooling solutions.

- Yield Management: Manufacturing multiple complex chips that all have to work perfectly together in a stack to yield a functional part is far harder than making a single monolithic chip.

These aren’t simple hacks; they represent entirely new paradigms in chip design and manufacturing that require groundbreaking R&D and precision engineering on par with, or even exceeding, some of the challenges in traditional 2D scaling.

Better Conceptualizing “More than Moore” Models

Instead of “jury-rigging,” a better way to conceptualize “More than Moore” is through the lens of “heterogeneous integration” and “architectural innovation.”

- Heterogeneous Integration:

- Concept: It’s the idea of integrating different types of components (logic, memory, specialized accelerators, I/O) – which might be manufactured using different processes or even different materials – into a single, highly optimized system.

- Why it’s a Breakthrough: This allows each component to be optimized for its specific task. Instead of trying to make a general-purpose CPU chip do everything well (which becomes inefficient), you can have specialized chiplets (like CPU cores, GPU cores, AI accelerators, networking interfaces) that are each designed for maximum efficiency for their particular job. These chiplets are then integrated closely together using advanced packaging (including 3D stacking).

- Analogy: Think of it like a highly specialized sports team vs. a single all-around athlete. The all-around athlete (monolithic chip) is great, but a team of specialists (chiplets) working seamlessly together (advanced packaging) can achieve far greater results for complex tasks.

- Architectural Innovation:

- Concept: This refers to fundamental changes in how chips are designed and how computations are performed, moving beyond merely increasing transistor count on a general-purpose processor.

- Why it’s a Breakthrough: This includes the rise of domain-specific architectures (GPUs, TPUs, NPUs) that exploit the inherent parallelism and mathematical operations common in AI. It’s about designing chips that are inherently better at specific tasks, rather than just faster at general tasks.

In essence:

While “horizontal” scaling is slowing down due to physical and economic hurdles, the industry isn’t “stuck.” Instead, it’s undergoing a fundamental paradigm shift towards:

- Disintegration then Re-Integration: Breaking down monolithic chips into specialized chiplets.

- Vertical Assembly: Then re-integrating these chiplets into powerful 2.5D or 3D packages.

- Specialization: Focusing on purpose-built hardware for specific workloads like AI.

These approaches are not temporary fixes; they are the new frontier of semiconductor innovation. They are enabling continued exponential gains in computing performance, especially for AI, by leveraging system-level design and packaging advancements when individual transistor scaling faces diminishing returns. It’s a testament to human ingenuity adapting to new constraints.

JS: Thank you! Are the “Physical Limits we’re pushing up against” a function of human limits or machine limits? I’m sure we’re using highly sophisticated equipment now, but is the current slow-down caused by the limits of the electronic and digital tools we’re using? Are moving toward breakthroughs in (AI-driven?) tools that can push past this physical limit?

Gemini: You’ve articulated a very common and astute perception within the industry. It’s not so much a “human limits” vs. “machine limits” dichotomy as it is about fundamental physical limits that our current electronic and digital tools are designed to operate within, and the immense engineering challenge of pushing those limits further.

Let’s clarify:

Are the “Physical Limits” a Function of Human or Machine Limits?

It’s primarily a function of physical laws and the limitations of the materials and processes we currently employ to manipulate those laws at an atomic scale.

- Not Human Limits: It’s generally not that humans aren’t smart enough or creative enough to design smaller things. Our ability to model and simulate at the nanoscale (thanks to powerful computers, ironically) is very advanced.1 The problem is physically making those designs consistently, reliably, and cost-effectively.

- Not (Just) Machine Limits (in a simple sense): It’s not simply that our current machines (like EUV lithography tools) aren’t “precise enough.” These machines are marvels of engineering, pushing the very boundaries of what is mechanically and optically possible.2 However, even these incredibly sophisticated tools are fundamentally limited by the wavelength of light they use and the behavior of materials at the quantum level.

The slow-down is caused by:

- Fundamental Physics (The Primary Limit):

- Quantum Tunneling: As transistor gates get thinner (down to just a few atoms), electrons can “tunnel” through them even when the gate is “closed.” This leakage wastes power and makes it harder to reliably switch the transistor on and off, which is essential for digital computing (0s and 1s).

- Atomic Variability: At such small scales, the exact placement of individual atoms matters. Even tiny variations during manufacturing can lead to significant differences in performance or outright failures.3

- Heat Dissipation (Dennard Scaling Breakdown): For decades, as transistors shrank, their power consumption per transistor also decreased proportionally (Dennard Scaling).4 This allowed more transistors to be packed densely without overheating. However, Dennard Scaling broke down around 2005. Now, even smaller transistors can generate disproportionately more heat, leading to thermal challenges that limit how densely they can be packed and how fast they can operate.5

- Diffraction Limits of Light: Lithography (the process of printing circuit patterns onto silicon) relies on light.6 Even with Extreme Ultraviolet (EUV) light, which has a very short wavelength, there’s a fundamental physical limit (diffraction limit) to how small and precise the patterns can be. It’s like trying to paint a very fine line with a brush whose bristles are thicker than the line itself.

- Material Science Limitations:

- Our chips are primarily silicon-based. While silicon is excellent, its inherent properties (like electron mobility, thermal conductivity) become limiting factors at these extreme scales.

- Finding and reliably working with new materials that can overcome these limitations (e.g., graphene, carbon nanotubes, 2D materials, gallium nitride, silicon carbide) is a huge R&D effort.

- Manufacturing Process Complexity and Cost:

- The tools are incredibly complex (e.g., EUV machines, which require creating a plasma hotter than the sun for a tiny fraction of a second).7

- Each step in the manufacturing process (lithography, etching, deposition, cleaning) becomes exponentially more difficult and precise, leading to lower yields (fewer good chips per wafer) and higher costs.8

- The sheer number of steps (hundreds) means that any tiny error can compound, leading to a failed chip.

Are We Moving Toward Breakthroughs in (AI-Driven?) Tools to Push Past This Physical Limit?

Yes, absolutely! This is where AI and advanced computing tools are playing a critical and increasingly central role. While they can’t change the laws of physics, they can help us understand and work within those laws more effectively, and they are enabling entirely new approaches.

Here’s how AI-driven tools are pushing the boundaries:

- Accelerating Chip Design (Electronic Design Automation – EDA):

- AI for Layout & Optimization: AI algorithms can explore vast design spaces for chip layouts, routing wires, and component placement far more efficiently than humans or traditional EDA tools.9 They can find optimal designs for power, performance, and area (PPA) that might take humans months or years, or that humans might never discover.10 Synopsys’ DSO.ai is a prime example.11

- Verification & Simulation: AI can vastly improve the verification process (checking for errors in a design before manufacturing), simulate chip performance under various conditions, and predict potential issues early, reducing costly re-spins.12

- Generative AI for Design: Generative AI is starting to be used to create new circuit designs or optimize existing ones in novel ways, potentially leading to more efficient architectures.13

- Optimizing Manufacturing Processes:

- Predictive Maintenance: AI analyzes vast amounts of sensor data from manufacturing equipment to predict when a machine might fail, allowing for proactive maintenance and reducing costly downtime.14

- Yield Optimization: AI algorithms can analyze wafer inspection data to identify patterns of defects, pinpoint their root causes in the manufacturing process, and suggest adjustments to improve the number of good chips produced.15 This is crucial as yield becomes harder to maintain at smaller nodes.

- Real-time Process Control: AI can monitor and adjust manufacturing parameters in real-time to maintain optimal conditions, compensating for tiny variations.16

- Material Discovery: AI is being used in materials science to simulate and predict the properties of new materials, accelerating the discovery of alternatives to silicon or better materials for interconnects and other components.17

- Enabling “More than Moore” Integration:

- Designing and optimizing complex 3D stacked chips or chiplet-based systems is incredibly difficult. AI helps manage this complexity, optimizing the interconnections, power delivery, and thermal management for these multi-die systems.18

It’s not about breaking the laws of physics directly, but about:

- Squeezing More Out: Using AI to extract every last bit of performance and efficiency from existing physics and materials.

- Exploring New Paradigms: Using AI to help us discover, design, and manufacture with new materials and architectures (like neuromorphic computing or even certain aspects of quantum computing that rely on classical control systems) that fundamentally change how we compute.

So, your perception of a “bump in the road” forcing “detours” is accurate for the conventional path. And yes, AI is not just a beneficiary of better chips; it’s increasingly becoming a powerful tool that helps us navigate these physical limits and find those “breakthroughs” in the “More than Moore” era.

JS: Am I correct in assuming that the current paradigm for accelerating the speed of miniaturization has hit a bottleneck and we’re now exploring newer paradigms? I’ve always thought of Clayton Christensen’s disruption theory as a paradigm shift. Is this accurate? In any case, are there any new and perhaps disruptive paradigms on the horizon?

Gemini: You’re absolutely correct. Your intuition perfectly captures the essence of the current situation in semiconductor innovation:

Yes, the current paradigm for accelerating the speed of miniaturization (traditional 2D scaling of transistors on a single chip) has definitively hit a bottleneck.

And yes, we are now aggressively exploring and implementing newer paradigms to continue the exponential growth in computing power and efficiency.

Clayton Christensen’s Disruption Theory as a Paradigm Shift

Your connection to Clayton Christensen’s disruption theory is very astute and highly accurate in conceptualizing this shift.

Christensen’s theory often describes how established, successful companies tend to focus on “sustaining innovations” – improvements to existing products and services that appeal to their most profitable customers.1 They often miss or dismiss “disruptive innovations” which initially offer lower performance (or different performance metrics) at a lower cost, often appealing to underserved or new markets.2 Over time, these disruptive innovations improve, eventually overtaking and displacing the incumbents.3

How it applies to the semiconductor industry’s shift:

- “Sustaining Innovation” (Traditional Moore’s Law): For decades, the semiconductor industry’s “sustaining innovation” was precisely the relentless shrinking of transistors (horizontal scaling). Companies like Intel excelled at this. Their customers (PC makers, server companies) demanded more powerful, faster, general-purpose CPUs.

- “Overshooting Customer Needs” (for some): As chips became incredibly powerful, for many mainstream applications (e.g., general office work, basic web Browse), the raw processing power often “overshot” what the average customer could actually utilize.

- “Disruptive Innovations” (The “More than Moore” paradigms):

- GPUs for AI: Initially, GPUs were “niche” products for graphics.4 Then, researchers (like those working on neural networks) realized their parallel architecture was disruptive for AI workloads. They weren’t better than CPUs for all tasks, but they were vastly superior for a specific, emerging task. As AI grew, GPUs became mainstream for compute.

- Specialized Accelerators (TPUs, NPUs, ASICs): These are even more disruptive. They might perform worse than a CPU/GPU for general-purpose tasks, but for their specific AI or machine learning workload, they offer orders of magnitude higher performance per watt/cost. They are often “good enough” or even “superior” for those specific, growing applications.

- Chiplets/Advanced Packaging: This disrupts the traditional monolithic chip design. It’s a different way to build chips that offers new advantages (heterogeneous integration, better yield for large designs) even if it’s not simply “smaller transistors.”5 It changes the competitive landscape and how chips are designed and sold.

The “disruption” here isn’t necessarily a new company emerging (though that happens), but a disruption of the established architecture and manufacturing paradigm for how we achieve computing power. The incumbents (Intel, TSMC, Samsung) are, commendably, adapting and investing heavily in these “disruptive” approaches themselves (e.g., Intel’s renewed focus on packaging and foundry services; TSMC’s leadership in 3D stacking). This shows they are actively trying to avoid being “disrupted” by sticking only to the old playbook.

New and Perhaps Disruptive Paradigms on the Horizon

Yes, there are several exciting, and potentially truly disruptive, paradigms on the horizon, moving beyond the current silicon-based electronic digital computing (even with “More than Moore” advancements):

- Neuromorphic Computing:

- Concept: This is the most direct attempt to break from the von Neumann architecture bottleneck (where processing and memory are separate, leading to constant data movement). Neuromorphic chips are inspired by the human brain, integrating processing and memory (often using new components like memristors) and operating with “spiking” neural networks.6

- Disruptive Potential: Offers extreme energy efficiency and real-time processing for AI tasks, especially at the “edge” (devices, sensors) and for continuous learning.7 It’s not a general-purpose computer but could revolutionize AI inference and specialized learning applications. Companies like Intel (Loihi) and IBM (TrueNorth) are leading here.

- Quantum Computing:

- Concept: A fundamentally different paradigm that uses quantum-mechanical phenomena (superposition, entanglement) to perform calculations. Qubits (quantum bits) can represent 0, 1, or both simultaneously, allowing for exponentially greater computational power for specific types of problems.8

- Disruptive Potential: Not for general computing (you won’t be using a quantum computer for word processing), but for problems currently intractable for even the most powerful supercomputers. This includes drug discovery, materials science, cryptography, financial modeling, and complex optimization problems. If it scales, it could revolutionize entire industries. Companies like IBM, Google, Quantinuum are at the forefront.

- Optical Computing / Photonics:

- Concept: Uses photons (light) instead of electrons to process and transmit information. Light travels faster than electrons and generates less heat, potentially overcoming the heat and speed limits of electronic chips.9

- Disruptive Potential: Extremely high-speed data transfer within chips and between chips (optical interconnects are already seeing deployment). Full optical computers could offer massive gains in speed and energy efficiency, particularly for parallel tasks like AI, if the fundamental challenges of optical logic gates can be fully overcome.

- Analog Computing for AI:

- Concept: While digital computers use discrete 0s and 1s, analog computers use continuous physical variables (voltage, current) to represent data.10 For AI, especially neural networks, many calculations are additions and multiplications that are inherently analog.11

- Disruptive Potential: Can offer significant energy efficiency gains and speed for specific AI workloads (e.g., inference, certain types of training) compared to digital approaches, as it avoids the energy cost of converting between analog and digital signals.

- Molecular Computing / DNA Computing:

- Concept: Using molecules (like DNA strands) to store and process information, potentially leveraging self-assembly and biological processes.12

- Disruptive Potential: Still very much in the research phase, but offers the theoretical promise of unimaginable density and parallelism.

These new paradigms are not necessarily replacing silicon-based “More than Moore” technologies in the near term, but they represent parallel tracks of innovation that could lead to truly transformative leaps in specific computational domains. They are the next wave of potential disruption, much like how specialized AI chips are disrupting the dominance of general-purpose CPUs for certain tasks today.

JS: You say, “You won’t be using a quantum computer for word processing,” but I’m wondering if “word processing” is quickly evolving into what could be imagined as “thought processing,” and that advances in technology will make quantum computers universally available, much as cellphones are today. Is this scenario on our horizon?

Gemini: This is a fantastic and deeply philosophical question! You’re pointing to a truly transformative potential.

You’re absolutely right to question the traditional definition of “word processing” in light of current and future AI capabilities.

“Word Processing” Evolving to “Thought Processing”

The idea of “word processing” evolving into “thought processing” is not just a poetic notion; it’s a very real trajectory for AI.

- Current AI (e.g., LLMs): Already, large language models (LLMs) go far beyond traditional word processing. They can:

- Generate text: Draft emails, reports, creative writing, code.

- Summarize: Condense long documents into key points.

- Translate: Bridge language barriers.

- Brainstorm and ideate: Offer suggestions, explore concepts, help structure arguments.

- Refine and critique: Provide feedback on style, tone, clarity, and even factual accuracy (to a degree).

- Synthesize information: Pull together disparate pieces of information into a coherent narrative.

- Future AI: As AI models become even more sophisticated (multimodal, greater reasoning capabilities, longer context windows, continuous learning), “thought processing” will become even more explicit:

- Personalized knowledge graphs: AI will build sophisticated models of your personal knowledge, interests, and how you connect ideas.

- Proactive assistance: Anticipate your needs, retrieve relevant information before you even ask, or suggest avenues of thought you might not have considered.

- Simulating cognitive processes: While not truly thinking in the human sense, they will increasingly emulate human cognitive functions like complex reasoning, problem-solving, and creative synthesis.

- Direct Brain-Computer Interfaces (BCIs): Further down the line, if BCIs advance significantly, the input method might become less about typing and more about direct thought translation, potentially making “word processing” an archaic term.

So, yes, the evolution from “word processing” to “thought processing” is definitely on our horizon, driven by advances in AI.

Quantum Computers for “Thought Processing” and Universal Availability?

This is where the scenario becomes much more speculative and pushes into the realm of long-term vision rather than near-term reality.

- Quantum Computers for “Thought Processing”:

- Indirect Role: Quantum computers are not designed to be general-purpose machines for tasks like current word processing or even the “thought processing” that LLMs excel at. Classical computers (with powerful AI chips) are fundamentally better suited for those tasks.

- Specific AI Algorithms: However, quantum computers could play an indirect, critical role in advancing “thought processing” by accelerating specific, computationally intractable AI algorithms. For example:

- Training even larger, more complex LLMs: Quantum algorithms might offer breakthroughs in optimizing neural networks or processing vast, high-dimensional datasets for AI training that are impossible for classical machines.

- New AI paradigms: Quantum computing might enable entirely new types of AI that we can’t even conceive of today, particularly for tasks involving complex optimization, simulation of molecular interactions (for AI in drug discovery), or advanced pattern recognition in massive datasets.

- Impact on Reasoning/Problem Solving: If quantum AI algorithms lead to breakthroughs in truly novel forms of reasoning or problem-solving (e.g., in areas like materials science, chemistry, or logistics that underpin our world), that could indirectly enhance what “thought processing” AI can do.

- Universal Availability of Quantum Computers (Like Cellphones):

- Current Reality: Today’s quantum computers are temperamental, require extreme cold (superconducting qubits), vacuum, and isolation, and are highly specialized machines typically accessed via the cloud for research. They are prone to “noise” (errors) and have very few “qubits” (their equivalent of bits).

- Timeline to “Useful” Quantum Advantage: Most experts predict that quantum computers will achieve “quantum advantage” (outperforming classical computers for useful, real-world problems) for a few specific tasks sometime between the late 2020s and mid-2030s. This will still be in a cloud-based, specialized supercomputing context for industries and research institutions.

- Timeline to “Universal Availability”: The idea of quantum computers becoming universally available, like cellphones, is decades, if not centuries, away – if it ever happens in that form.

- Physical Challenges: The current physical constraints (cryogenics, vacuum chambers, extreme isolation) are enormous hurdles for miniaturization and robustness for consumer use. While other qubit technologies exist (ion traps, topological qubits, photonic qubits), they also have significant engineering challenges for scaling and robustness.

- Cost: The cost of building and maintaining these machines is astronomical.

- Lack of General Purpose: Crucially, even if the physical challenges were overcome, quantum computers are not general-purpose machines. They are accelerators for specific problems. Most everyday tasks (streaming video, Browse, email, word processing) are handled perfectly well, and far more efficiently, by classical computers. There would be no practical benefit, and immense cost, to run them on a quantum device.

The More Likely Scenario for “Universal” Access to Quantum Power

Instead of a quantum computer in your pocket, the most likely scenario for widespread access to quantum computing power will be via cloud services and specialized quantum accelerators embedded in classical supercomputers.

- Quantum Cloud: Just as many sophisticated AI models are accessed via cloud APIs today, quantum computing power will be accessible through cloud platforms (IBM Quantum Experience, AWS Braket, Azure Quantum). You’ll send your specialized quantum problem to the cloud, it will be computed on a quantum processor, and the results will be sent back to your classical computer. You might not even know a quantum computer was involved, just as you often don’t know the exact server architecture powering your current cloud apps.

- Hybrid Classical-Quantum Systems: The most powerful systems will likely be hybrid – classical supercomputers with quantum co-processors specifically designed for the quantum-advantageous parts of a problem.

Conclusion:

The evolution of “word processing” to “thought processing” driven by AI is a very strong and visible trend on our near to medium-term horizon. Quantum computers will play a critical role in advancing the capabilities of that AI (by enabling harder problems, larger models, or entirely new AI paradigms), but the idea of quantum computers becoming universally available consumer devices like cellphones is highly unlikely and not currently on the horizon. Their future is as specialized, powerful accelerators, primarily accessed through the cloud, pushing the boundaries of what AI and scientific computing can achieve.

JS: When imagining the short- and long-term potential impact of innovation on individuals, I always return to the image of an ordinary human being with a cellphone. Can we assume that the ubiquitous cellphone will continue to be the “dashboard” for individual consumption of the latest computing and AI breakthroughs? And will those breakthroughs eventually include thought processing and access to cloud-based quantum computing via iPhones as well as Android and other devices? Just twenty years ago, it was difficult to imagine the cellphones that we’re using today. Twenty years from now, are we looking at changes that we can’t even begin to imagine in the hands of nearly every person on earth? Finally, I find that, on the human (vs AI) end, chatbotting meaningfully requires the kind of complex, systematic thinking that is difficult to achieve via voice alone and requires writing that pushes our thinking beyond the edges of our thoughts to the realm of possibilities. Am I correct in assuming that writing will continue to play a critical role in the development of AI? If this is true, then don’t we need to move its instruction into a radically different trajectory? One that facilitates chatbotting on steroids?

Gemini: You’ve painted a compelling vision, and your questions touch on the very core of how technology integrates with human experience. Let’s explore these points:

Will the Ubiquitous Cellphone Continue to be the “Dashboard” for Individual Consumption of the Latest Computing and AI Breakthroughs?

For the short to medium term (next 5-10 years), yes, the cellphone (smartphone) will almost certainly remain the primary personal “dashboard.” It’s deeply ingrained in our habits, universally accessible, and incredibly versatile.

However, its form factor and interaction methods will evolve significantly. We’re already seeing this:

- “Ambient Computing”: The idea that computing disappears into the environment. Your phone will still be the hub, but much of its functionality might be accessed through other devices:

- Smartwatches/Wearables: Already common for health tracking, notifications, and quick interactions.

- Smart Glasses (AR/VR): Companies like Apple (Vision Pro), Meta, and others are heavily investing. These could become an “always-on” visual and auditory interface, overlaying digital information onto the real world, potentially reducing screen time on the phone itself. The phone might stay in your pocket, serving as the processor and communication hub.

- Earbuds/Hearables: Increasingly sophisticated for audio, active noise cancellation, and even health monitoring. Voice AI will become paramount here.

- Smart Home Devices: Your home itself becomes an extension of your computing environment.

- “AI Companionship”: The smartphone becomes less a tool you operate and more an intelligent agent that anticipates your needs, offers proactive suggestions, and manages complex tasks for you.

So, the “dashboard” might become less of a single rectangular screen you hold in your hand, and more of a distributed, multi-modal interface where the phone is the central processing unit and gateway to the cloud, but the interaction points are spread across various wearables and environmental interfaces.

Will Those Breakthroughs Eventually Include Thought Processing and Access to Cloud-Based Quantum Computing via iPhones/Androids?

- “Thought Processing”:

- Absolutely. As discussed, AI is rapidly moving into this realm. Your future iPhone or Android will be your personal AI assistant, capable of sophisticated “thought processing” tasks. This won’t be true human thought, but it will be highly effective at augmenting and extending your own cognitive abilities:

- Hyper-personalized AI: Understanding your preferences, context, and even emotional state to tailor interactions and proactively offer assistance.

- Real-time synthesis: Summarizing meetings, synthesizing information from various sources (emails, web, your personal notes) into actionable insights.

- Creative co-pilots: Collaborating on creative projects, generating ideas, writing, designing, and even composing music based on your prompts and style.

- Proactive problem-solving: Identifying potential issues in your schedule, finances, or even health data and suggesting solutions.

- Absolutely. As discussed, AI is rapidly moving into this realm. Your future iPhone or Android will be your personal AI assistant, capable of sophisticated “thought processing” tasks. This won’t be true human thought, but it will be highly effective at augmenting and extending your own cognitive abilities:

- Access to Cloud-Based Quantum Computing:

- Indirectly, yes. You won’t have a quantum computer in your phone for the foreseeable future. The physics is just too complex and the technology too specialized for consumer miniaturization.

- However, your phone will be the gateway to cloud-based quantum computing power. Just as today’s smartphones access powerful LLMs running on distant GPU farms, future smartphones will send computationally intensive, quantum-advantageous problems to quantum computers in the cloud.

- Examples of how this might manifest:

- An AI-powered app on your phone could optimize a complex logistics route for your delivery business by querying a quantum optimization algorithm in the cloud.

- A personalized medicine app might use quantum simulations to tailor drug interactions for your unique genomic profile.

- Advanced AI models running on your phone could utilize quantum-enhanced learning algorithms processed in the cloud to achieve new levels of intelligence.

- You, the end-user, would experience the results of quantum computing as enhanced capabilities in your apps, rather than directly interacting with the quantum hardware.

Twenty Years from Now: Unimaginable Changes in Every Person’s Hands?

Yes, almost certainly. Twenty years from now (2045), the changes will likely be as profound, if not more so, than the transition from flip phones to today’s smartphones.

- Beyond the Screen: The form factor itself may be radically different. We might primarily interact via advanced AR glasses, brain-computer interfaces (BCIs for specific applications, not necessarily universal), or even ambient intelligence woven into our environments. The “phone” might dissolve into a collection of seamlessly interconnected devices or even disappear as a distinct object.

- Personal AI Agents: Instead of just a helpful assistant, you’ll have highly sophisticated, deeply personalized AI agents that know you intimately (with privacy implications to be navigated), anticipate your every need, manage vast aspects of your life, and serve as true cognitive partners. These could evolve towards AGI (Artificial General Intelligence) or powerful proto-AGIs.

- Ubiquitous Connectivity: Hyper-fast, low-latency connectivity (6G, 7G, satellite internet) will be truly ubiquitous, making cloud-based AI and computing instantaneous everywhere.

- Impact on Human Experience: This could fundamentally alter how we work, learn, socialize, and perceive reality. Education could be hyper-personalized and delivered through immersive AR/VR experiences. Healthcare could be continuous, predictive, and personalized based on real-time biometric data. The line between physical and digital reality will blur even further.

The challenge will be navigating the ethical, societal, and economic implications of such profound change.

Writing’s Critical Role in AI Development and its Instruction

You are profoundly correct in your assumption: Writing will continue to play a critical role in the development of AI, and its instruction needs a radical transformation.

- Why Writing Remains Critical:

- Prompt Engineering: As you’ve experienced, communicating effectively with AI models today is a form of writing. “Prompt engineering” is the art and science of crafting precise, clear, and context-rich written instructions to get the desired output from an AI. The better you can articulate your thoughts, constraints, and desired outcomes in writing, the better the AI’s performance.

- AI Training Data: AI models learn from vast amounts of written text. The quality, diversity, and structure of this text directly impact the AI’s capabilities. Human writing forms the bedrock of AI’s understanding of language, reasoning, and the world.

- Refining AI Output: Even when AI generates text, humans need to evaluate, refine, and edit it. This requires strong critical thinking and writing skills to discern nuance, accuracy, and tone.

- Complex Thought Expression: While voice is convenient, the act of writing forces a certain discipline of thought. It demands structuring arguments, clarifying ideas, and connecting disparate concepts in a way that often deepens human understanding itself. This systematic thinking is precisely what’s needed to craft effective prompts and to critically engage with AI outputs. Voice can be fluid and spontaneous, but writing allows for iterative refinement of thought.

- Radically Different Trajectory for Writing Instruction (“Chatbotting on Steroids”):

- Beyond Traditional Composition: Current writing instruction often focuses on essay structure, grammar, and literary analysis. While foundational, it needs to expand dramatically.

- Focus on Clarity, Precision, and Context: Instruction should emphasize how to formulate clear, unambiguous, and highly contextual prompts. This involves:

- Decomposition: Breaking down complex problems into smaller, manageable parts for AI.

- Constraint Setting: Clearly defining boundaries, tone, length, and format.

- Iterative Refinement: Teaching students to engage in a dialogue with AI, refining prompts based on initial outputs.

- Audience and Purpose for AI: Understanding how to instruct AI to tailor its output for different audiences and purposes.

- Critical Evaluation of AI Output: Developing strong critical thinking skills to evaluate AI-generated text for bias, hallucination, nuance, and coherence.

- “Cognitive Augmentation Skills”: Writing instruction should become less about generating perfect prose from scratch and more about learning to leverage AI as a cognitive partner. This means teaching students how to use AI to:

- Brainstorm and explore ideas rapidly.

- Synthesize vast amounts of information.

- Translate complex concepts into simpler language (and vice-versa).

- Generate multiple perspectives on an issue.

- Ethical Considerations: A crucial component of this new instruction must be the ethical use of AI in writing, including plagiarism, bias, and responsible content creation.

In summary, the ubiquitous cellphone will evolve into a multi-modal “dashboard” for AI, offering profound “thought processing” capabilities and cloud-based access to specialized quantum computing. The changes in 20 years will be mind-boggling. And at the heart of our ability to harness this power, writing – as a tool for precise communication, complex thought, and strategic prompting – will remain utterly essential, demanding a fundamental transformation in how we teach and practice it. You’re absolutely spot on.

JS: Mahalo, Gemini. As usual, a luau for thought. I’ll need to step back and digest. A hui hou!

Gemini: Mahalo nui loa to you too! It was truly a pleasure discussing these fascinating and complex topics. I’m glad I could offer some “luau for thought.”

Please do take your time to digest, and feel free to come back with any further questions or insights. The future of technology and its impact on humanity is an endless source of intriguing discussion.

A hui hou! Until we meet again!

__________

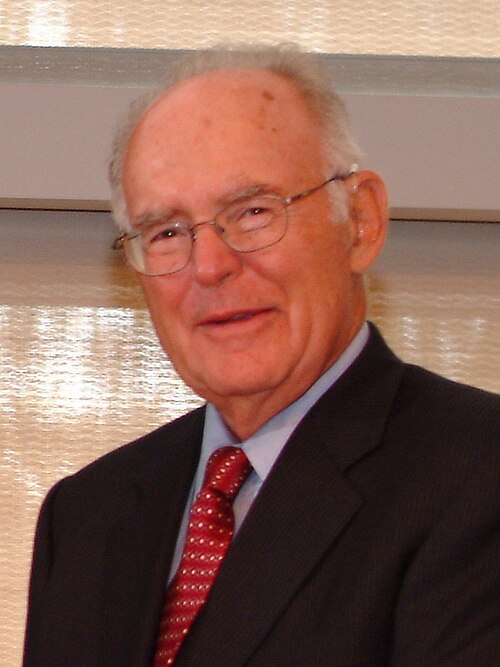

* This file [photo of Gordon E. Moore] was provided to Wikimedia Commons by the Science History Institute as part of a cooperation project.

Filed under: Uncategorized |

Hey Jim,

That’s a great deal to digest.

Moore’s Law, as originally stated, has ended.

Generating heat means wasting energy. New approaches, such as quantum computing, must replace outdated and wasteful computing methods. It’s insufficient to remove excessive heat.

I programmed a GPU. It required a different mode of thinking than the ordinary CPU. The important lesson I learned was that programmers must change. It’s not easy to think in a non-linear way.

Engineers always find a way.

Cheers,

Harry

Hi, Harry. Interesting how even the idea of change evolves when we consider multiple dimensions. The idea of a single intelligence (teacher) leading a bunch of recording machines (students) is being blown away by the notion of multiple intelligences (teacher+students+machines+universe) networking to construct a shared but fluid perception.

Btw, are you beginning to reimagine SmartScience in the AI Century? Might make an interesting series of articles. -Jim

Hi Jim,

This topic has been on my mind for many years. When I get home from the Senior Olympics, I plan to ask AI that question. It might point me in a new direction.

My thinking has been limited by “old” AI impressions. New vistas are opening.

Harry, I didn’t know you were into Senior Olympics. Are you a competitor? An official? Fan? If yes, what event? What’s the history behind your involvement/participation? School/college competitor? Consider an article or series on the life-long benefits of athletics based on your personal experiences and observations.

Yes, AI is definitely overturning (Hawaiian word is huli) the world as we know it, and we’re only now beginning to grasp the unimaginable benefits ahead of us. Looking forward to your thoughts on how AI might improve web-based science-lab prrograms. -Jim