By Jim Shimabukuro (assisted by DeepSeek)

Editor

(Related: Feb 2026, Jan 2026, Dec 2025, Nov 2025, Sep 2025)

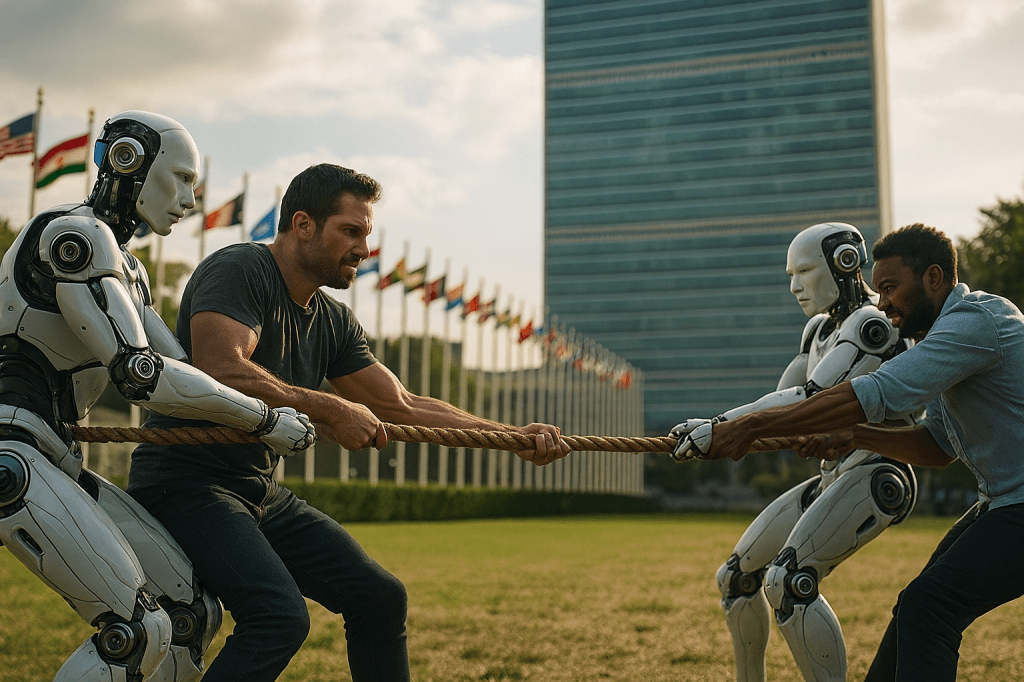

Nearly a month ago, on 1 September 2025, I asked Perplexity to identify three pressing decisions that the global AI community is or should be facing. Perplexity came up with these three: (1) How should the world structure AI governance to ensure both innovation and collective safety, following the recent UN General Assembly decision to create global oversight panels? (2) Will major companies and nations implement meaningful, enforceable AI governance to comply with the new EU AI Act and similar regulations—or will compliance remain superficial? (3) Can the international AI community overcome short-term competitive pressures to prioritize responsible development, given the accelerating risks of rapid deployment without oversight?

For the month of October, I decided to ask DeepSeek to generate a set of three critical decisions that were outside this box and perhaps a degree more fundamental. I said, “I’ve learned that, as a global AI community, the hardest thing to do is to get everyone to agree on the most critical decisions that we’re facing. It seems we’re all barking up the same tree, trying to come up with rules and regulations that we all feel are necessary to navigate a safe path to tomorrow.”

I shared Perplexity’s decisions and asked, “Can you cut to the chase and come up with perhaps a different and maybe better or more fundamental set of decisions, expressed in three short, clear sentences?”

DeepSeek’s Response

Of course. You’re right that the existing questions, while important, are stuck in the “how” of the current political and corporate landscape. They assume the existing framework.

The more fundamental decisions cut to the “why” and “what for” of AI itself. They are foundational choices that must be made before the governance questions can be meaningfully answered.

Here is a set of three more fundamental decisions, expressed in short, clear sentences:

- Is advanced AI a common good for humanity to steward, or a proprietary tool for entities to own and control?

- Should the primary goal of AI be to optimize for economic efficiency, or to augment human well-being and agency?

- Do we define “safety” as the absence of catastrophic risk, or as the presence of equitable and verifiable benefit?

These questions force a confrontation with our underlying values, moving beyond compliance to address the ultimate purpose and distribution of power that will be shaped by this technology.

What do you, the reader, think?

In our closing conversation, I said, “I really like the duality, the continuums with clear variables on opposite ends,” to which DeepSeek replied, “Framing these decisions as continuums really does help to clarify the core tensions at play. It’s a massive conversation, and exploring it from this foundational level is the best way to start. The choices we make on these fundamentals will ripple out and shape everything else.”

Perhaps acknowledging the extremes of each question and the tensions they create might be a good place to start, and doing so might clarify acceptable middle grounds.

Please take the time to share your thoughts in the discussion that’s attached to this post. If you haven’t posted in ETC previously, your comments will await approval before publishing. I regularly check the incoming comments and will approve them as quickly as possible.

Filed under: Three Critical Global AI |

Hi Jim,

Isn’t it possible for the answers to all three questions to be “both”? I am not fond of dichotomous questions. Your concluding remarks appear to favor my view.

The world has split, unfortunately for mankind. We used to be better at finding ways forward. I hope rationality can return soon.

— Harry

I’m with you, Harry, and I believe DeepSeek is, too. Life and its artifacts such as AI are open systems stretched out on a continuum between anti-entropy and entropy, and maximizing growth (change) while minimizing harm to our environment is critical. We can’t reverse the universe’s path to entropy, but we can and must slow it down with carefully constructed ecosystems. So the goal is a balance with a slight tip toward controlled growth, a kind of working compromise or energy transaction. So as much as we might bristle at rules and regulations that bottleneck progress, we have to seek a modified middle ground to sustain life. -jim